Audio Features for Isadora: What Do You Want?

-

i would think that panning is not a necessary feature to be included inside actors. presumably a panning effect can be achieved by patching something together that combines the matrix with separate level controls for each channel anyway. if the way you have approached sound routing doesn't ever mention Left and Right, it doesn't limit your setup to pan-able stereo.

Ableton Live has a feature where you can assign A or B labels to different tracks and use a crossfader between the 2 (groups) - unassigned tracks are unaffected.

-

@mark panning for more than 2 tracks is pretty irrelevant without some kind of spatial audio engine and an idea of speaker locations. Systems like spat, that allow for that, understand the locations of speakers and use something parallel to ray casting to calculate if a multichannel sound was rotated in a multi speaker environment what would it sound like from each speaker. Without all this extra data this panning is irrelevant. With individual volume controls for each channel sounds can be rebalanced to suit a speaker setup, or re-routed for miss-matched channel mappings, or where the multichannel is used to carry sub-mixes or headphones feeds create sends and sub-mixes. This is a pretty big step forward and when serious audio work in a spatial environment needs to be done then other tools are needed.

-

@mark panning a stereo file also need a -3db on central position. Panning a multichannel audio need something more complex so at least for this first iteration can be left out. Just left to 1 3 5 7 and vice versa can be enough.

-

@maximortal said:

panning a stereo file also need a -3db on central position.

Yes -- the panning uses the -3db "equal power" formulas. There are actually a few panning formulas.... but that one is common.

Best Wishes,

Mark -

@bonemap said:

If stereo pairs are going to be irrelevant to the ‘sound player’ then Stereo panning is irrelevant too, I would have thought.

Well, if you're outputting to a pair of channels, then I would expect panning to work, and it does.

It seems like the general consensus is that this is the only situation I should worry about. If you're outputting to more than two channels, I think the pan input will show as "n/a" to indicate it is not applicable.

Best Wishes,

Mark -

@bonemap said:

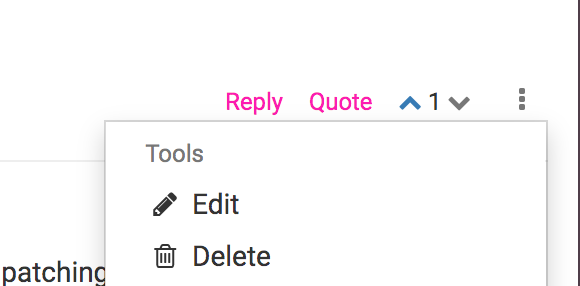

these posts are not user editable.

You don't get these two options by clicking on the three dots at the bottom right of your comments?

-

@woland said:

You don't get these two options by clicking on the three dots at the bottom right of your comments?

It's because this thread is in Isadora Annoucements -- I think this has some limitation for the users in terms of editing. We could move the thread to another category and that would probably solve it.

Best Wishes,

Mark -

Audio and Timeline

I know this would be a FUTURE request; but for me one of the most important features missing in Isadora is the concept of timeline and events. Audio and Video are to me obvious ways in which to implement this approach to Izzy.I would love to be able to synchronize multiple events (triggers of numerous media, controllers, etc) in exact relationship to TIME.

If the audio or video had a correlated grid where one could place multiple events, my live performance creations would progress dramatically with less programing time.

If any of you recall Macromedia Director program (long gone) that interface was then absorbed into Flash. This timeline based software is incredibly powerful but does not have the flexibility and programming possibilities that Izzy has. To me, if this were added to Izzy... it would move Izzy into a new category of usability.

My 2 cents worth :)

-

@kdobbe said:

one of the most important features missing in Isadora is the concept of timeline and events.

Isadora is Scene-based and while it can do linear-cueing, it's not timeline-based linear cueing. In turn this allows for greater flexibility and the possibility to do non-linear cueing. "Events" though can be created with Timer actors, Trigger Delay actors, Clock actors, Comparators, etc.

@kdobbe said:

I would love to be able to synchronize multiple events (triggers of numerous media, controllers, etc) in exact relationship to TIME.

You can build your show to run off of time. It's not a graphical timeline interface, but there's the Timecode Comparator (and the afore-mentioned actors).

-

Dear All,

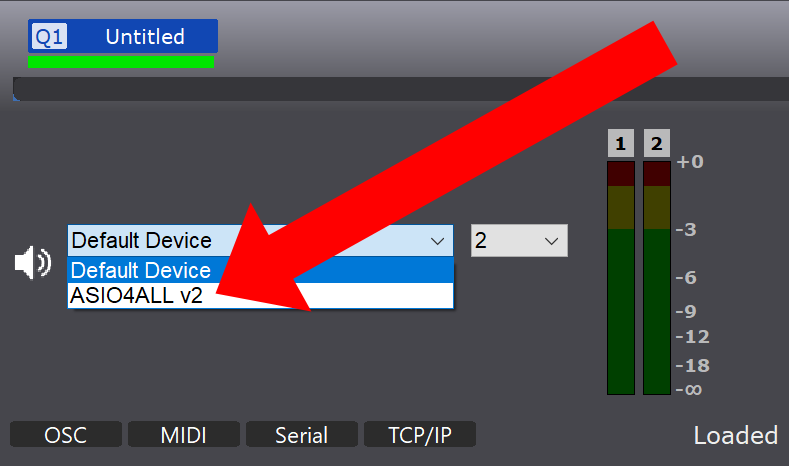

Some of you might be happy to see what I got working in Windows today. ;-)

-

-

@mark in PD is implemented the ambisonic system for sound spatialization, but clearly it is not a priority. I am very glad for your fantastic work, best!

-

@mark I want to generate a few tests that relate to some work I was trying to acheive, here is the first:

https://www.dropbox.com/s/wdrx...

It is a 16 channel audio file, wav format 24 bit, 48k interleaved. The channels have a rising burst of tone and only one channel has sound at a time, it cycles through the channels one at a time a few times. Will this work with the setup you are developing?

-

@fred said:

t is a 16 channel audio file, wav format 24 bit, 48k interleaved. The channels have a rising burst of tone and only one channel has sound at a time, it cycles through the channels one at a time a few times. Will this work with the setup you are developing?

You can find the answer to your quesiton in this video link. ;-) Note that the speed is set to 2x so the whole sequence goes by faster.

Best Wishes,

MarkP.S. To be honest, it didn't work it until I updated the WAVE parser to understand the WAVEFORMATEXTENSIBLE structure used in this file -- but it was a only 10 minute job. I'm glad you send the test file along. ;-)

-

@mark that's amazing!!

I curious about working with video files as well. Do you think it could read the same if it was part of a hap AVI? I can try make one tomorrow if it is worth a try. Virtual dub should let me does this pretty easily.

-

@fred said:

I curious about working with video files as well. Do you think it could read the same if it was part of a hap AVI?

As mentioned previously, no work has been done on the video side of this. We're going to get the audio part out to beta testers first, and once we've used that to review the user interface and how it all feels, we'll dig in to the video part.

But in theory, yes, it's going to work the same. But you shouldn't expect to try it immediately.

Best Wishes,

Mark -

Beat Detection

OMG beat Detection"network radio/broadcast" - I've been using vban(voicemeeter audio network) to create point to point audio tunnels and I think there's better functionality built into izzy so I'm sorry if this is my lack of knowledge but ...

(relating to vban) I would prefer to be able to broadcast the audio without a static destination and pick it up in other machines without the need to specify the destination from the source (maybe I haven't looked hard enough, this may be a feature alreadyI don't know if this is exactly an audio feature.. but this is my biggest desire in Isadora.. *blush* sure I know I shouldn't rely on it so much but oh my please Mr. Coniglio:

milkdrop avs+spout (projectM) support built directly in to izzy (even as a plugin)

- expandable with some of the advanced preset editing options in node format for non-programmer-peoples

--even rudimentary controls to manipulate waveform size color sprite etc.,

Winamp works fine but it's no longer supported and adds overhead/cpu/gpu, potential vulnerability and could be streamlined without needing another application/spout/NDI Video connection

** and add DYNAMIC PRESET CONTROLLING right in izzy 🙏having to click on the Winamp vis window then hit space/scroll/back just kills me - I've never found a workaround