Alpha Mask with Live Video

-

@Skulpture I noticed in your video that the mask has a nicely defined smooth edge, compared to what raw data from kinect will give you. Do Gaussian Blur and Motion Blur do that, or NI Mate? What happens without the Blur filters?

Thanks.--8 -

Yeah it nearly always needs some blur.

I used to knock old CCTV cameras out of focus slightly to get similar results rather than adding blur in the software. But with ever increasing faster machines i started adding it into the software. Normally Gaussian Blur on 1 or 2 works nicely. -

Hi Guys,

Thanks to @Skulpture I was able to create this new interactive piece : Indicible Camouflage

I'm presenting it next week to a jazz event and I realise that in Ni Mate only the active user's alpha in active.

Is there a way to capture all alphas no matter how many peaple stand infront of the devise ?Thank you all for your great knowledge !

David

-

Glad i've helped you David. Be sure to take some pictures and I wil put them on my blog.

:-) -

Thanx @Skulpture I will do so !!

:-) -

Hello Sculpture, i tried your patch but instead of the syphon actors , I used kinnect actor and the kinnect. My goals is to project video on a dancer. Do you think it's possible with the kinnect? Wher can I find the QC syphon actors? I send you a picture of my patch, just have a look on the right side.

Thank you for all your workBest wichesBruno -

It looks like you will need a threshold actor - this will boost the white and make the black/grey more black. (if that makes sense?)Out it directly after the output from the Kinect Actor. -

hello everybody.

i have some troubles to use the kinect with my new mac book pro.i have nimate license but it crashed at launch , like synapse....i plugged the kinect on the usb3 on my mac and i think its the problem.but i dont have any other usb2 port.....do you know a solution about that?thats a lot -

I use a kinect on a new macbook with only USB3 ports and no problems.

-

Me too no problems

-

It won't be the USB ports. It will be software/driver related.

-

ok thanks for your answers. .....

-

@Skulpture

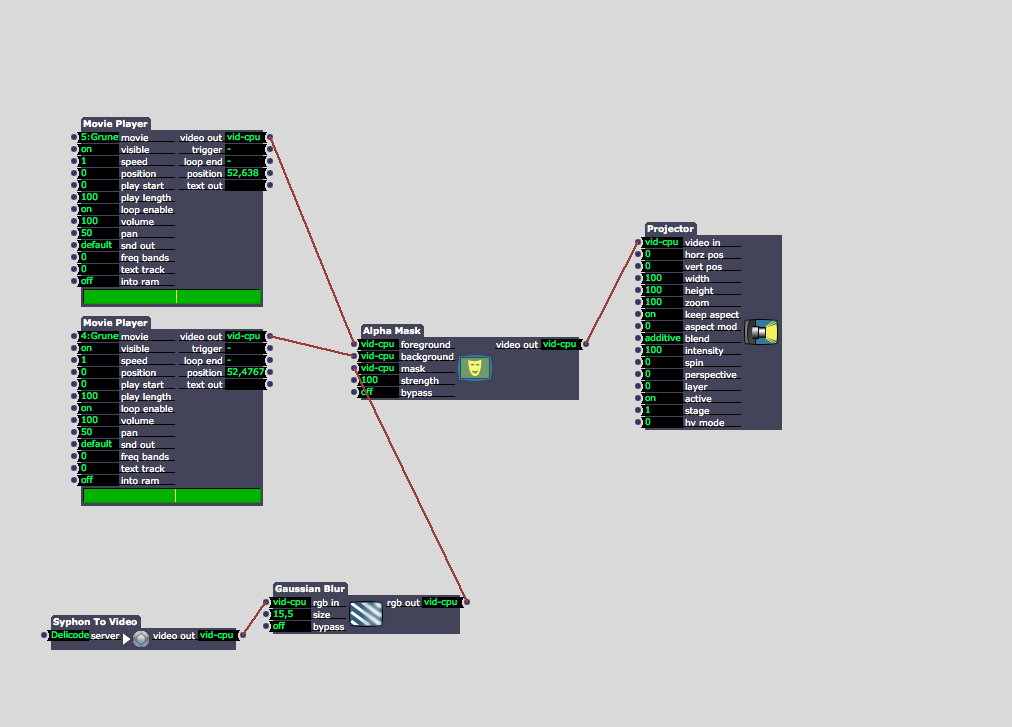

Hi, regarding your screenshot I wanted to know if the video actors are gpu based and if so, because of the core video plugin?I have a similar arrangement with my kinect. But becuase its all vid-cpu obviously its very laggy.

It's gonna be a big projection, video-installation project. So its quite crucial, that the resolution is the maximum.

I would be probably able to borrow a new mac pro for the exhibition, but it seems weird to me that my computer can only make 6-10 fps.The isadora project won't exceed this two video layers and the alpha mask probably. so its fairly simple. its just the performance, which disturbs me.

and second question: is this gone with izzy 2? Because my uni owns the v1 license and it would be probably worth to upgrade for that 125$...Thanks for helping!!!

Leo

I have MBP early 2011, i7 2,2 GHz with an ATI Radeon 6750M 1GB and 16GB Ram.

-

Hi,

Yes the screen shot of mine from March 2013 is all CPU based; I don't think I even had access to much GPU stuff back then; or if I was I was under strict orders from the boss not to tell anyone. :-)You do want to keep it as much GPU as possible. But it can get complicate; because there is no GPU alpha mask actor just yet so you'd need to convert it to CPU again.But the movies themselves could be the new version 2.0 players which should help frame rates - with the right h264 codec.I get a lot of questions, emails and PM's about this topic so it may be time to revisit it and give it a refresh.I am not sure what you mean by _"__and second question: is this gone with izzy 2"_ - is what gone? Sorry, not following.Hope this helps for now. -

oh what I meant is, if version 2 of isadora (I though you're all calling it izzy :)) is not gonna have this kind of problems because more actors are gpu based.

so the core video upgrade is good for what exactly? For better hardware acceleration in some specific actors I suppose? -

Two points:1) In Isadora 2.0, the Core Video and Core Audio upgrade are both included -- all of those features are part of 2.02) The reason Core Video upgrade is still important is mostly because it allows you to use Quartz Composer patches as Isadora plugins.Best Wishes,Mark -

I finally got the isadora 2 upgrade and like you mentioned before, as there is no alpha gpu based effect, I can't get so much speed advantage out of the new version.

I'll try to find a workaround for mixing those to layers with an alpha layer. -

@mark regarding your 2) point. Would it be possible to build a gpu-based alpha mask patch in QC and then use it in Isadora?

And is there a list of all the gpu based actors?

Sorry for my newbie kind of questions. Im just beginning in this field.best wishes

Leo

-

No need to build it in QC. You can use the Core Image actors. Generally speaking, they all run on the GPU just like FFGL.

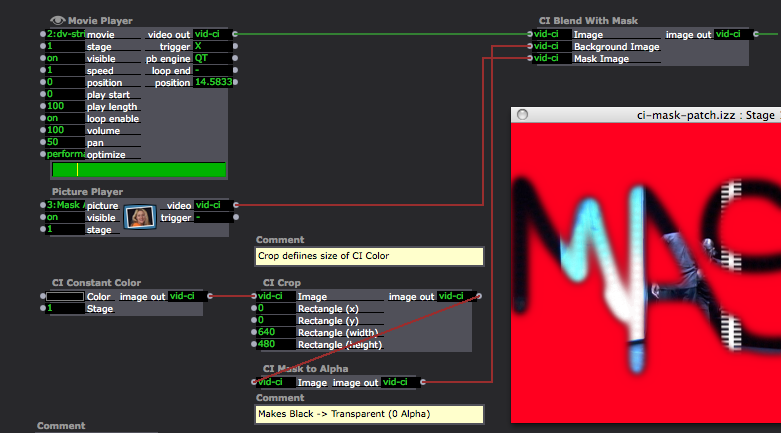

CI Blend with Mask to get you what you want.You'll need three images - the foreground, the background, and the mask. The mask image should be black and white (or grey for transparency.)See snapshot of a patch in action to get the arrangement.Note that the CI Constant Color always needs a CI Crop actor to define its size. I'm also using the CI Mask To Alpha to convert the solid black color to transparent (i.e., 0 alpha.)Hope that helps,Mark -

Just saw this thread

I made a little processing app way back that makes a black and white image from a certain depth of the kinect depth image and syphons it to isadora. you can just use it with the mask actor. Works with kinect 1414.Set it to 32bit in the infor panel and use the arrow keys up and down to find your desired depth.It also sends you a XY value for the center of the blob over OSC so you can move your background image with the mask.F