@princecarlosthe5 ive achieved this kind of thing with nintendo joycon controllers, but you can do it quite easily with an iphone or android phone using applications like gyrOSC or DATAosc.

You can use Chataigne (osc timeline PC/Mac/linux application) to get joycon data natively out to OSC messages which can then be ingested by isadora

Hi all

I have been toying with isadora as a game engine for a while now for fun and ive had a great time over the past couple of weeks making a tank controls user actor. Its surprisingly tricky to get this kind of control of a character model normally. I might make the actor into a macro and allow downloads if anyone is interested.

Ive been pushing Isadora to its limits over the last few years as part of my job at the Academy of Live Technology UK, lecturing in live events technologies. My specialisms are sound and video systems but have got very into automation and that kind of thing. I dont know how i will use this yet but have been toying with the idea of a video game presentation that takes a person through different interactive game eras in the same experience - 2D into 3D with probably a health dosage of plagerism!(Joke)

I'm looking for a device that I can hold in my hand wirelessly and have it's position be tracked across a screen (ideally three displays). This device would act like a mouse that can be visibly seen and click on items on the screen. Are there any devices I should look at? Or controllers I can use to make my own and 3D print a case for?

Thanks in advance.

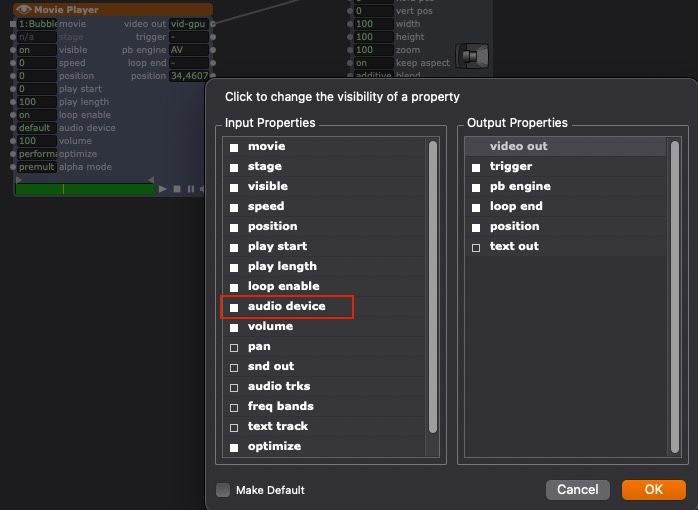

Here is a workaround, as this function no longer works in Movie Player since Quicktime X. You must have Blackhole (or similar) installed, and if your Movie Player does not show "Audio Device", click on the eye to the left of Actor name and activate this input.

Then select "Blackhole" as shown in the picture and you can adjust Pan and Volume in the AUMixer.

Best regards,

Jean-François

thanks for quick response, but i don't want to do that, as i am working with multiple video files often at quite short notice, so additional processing is not desirable for the workflow.

the sound actor pan works fine for me, but I really want to pan movie file audio within Isadora

@notdoc why are you specifically wanting to use the video player for audio? Maybe convert the video to a .wav file and use the audio player instead - there may be more useful features on that?

You could then still sync the playstart of both the sound and video player actors?

Let me know how you get on!

i would like to be able to pan movie file output audio within Isadora, but whatever i do the 'pan' is greyed out.

my movie files have 2-channel stereo audio, which i am able to distinguish clearly in external output.

tried with multiple output sources, if i play around too long Isadora crashes

no project to show, just trying with a movie player in a freshly opened project

ventura 13.7.2 on M1 Max '21

@dusx Hey Ryan, thank you for getting back to me. Yes, my current set up is as my signature.

Simon

@kfriedberg said:

In the NVIDIA Control Panel there's a setting for power management, which I set it to prefer maximum performance and rebooted.

Interestingly, I took another look, and the performance management utilities on my Lenovo Legion, automatically switches this based on my mode. So 'game' mode which I use for shows sets this to maximum.