Body tracking with Yolo

-

@n-jones said:

without a need to install python

Well, this code will require some configuration of Pythoner.

By default Pythoner offers the full standard library included with Python, however, this doesn't include everything we need for this script.

So, a new Virtual Environment will need to be created, and then MediaPipe, OpenCV, and Numpy will need to be installed.

The module names for install are:- opencv-python

- mediapipe

- numpy

This is worth knowing, because the names for installation don't always match the names used for import into the python code. eg: opencv-python uses, import cv2, in the python code.

You can find available modules at: https://pypi.org/If you haven't I recommend watching: Getting Started With Pythoner to get an understanding of how the configuration is handled. It really should only take 3-4 minutes to create the environment and install the modules needed, once you know how it works.

I will create a quick and dirty video tutorial to walk thru this for this specific script/project. ASAP

-

-

@dusx thank you

-

-

I have posted a 'rough cut' of a tutorial video on setting up Pythoner to work with MediaPipe to my personal YouTube channel.

Please let me know if you find any issues, or feedback regarding clarity etc.. I will do a round of updates to the video before making it available officially on the Troikatronix YT channel.Attached here is an Example file (used in the video, but now updated).

Again, if you have suggestions to improve this let me know (it is meant as a starting point).

NOTE: Pythoner has the ability to use 3 different configurations for which python environment is used.

I will make a video to walk thru these and their pros and cons soon.

In this video, I focus on the global virtual environment setup Isadora supports.

The other options are a Local (to the project root) Virtual Environment, and the default environment included in the Pythoner actor (the most portable option, but limit in features). -

@dusx Wow Ryan Bravo !!!!!! Fantastic !!!!!

-

I have so much to do until the end of the month that I can't try. I'll get back to you as soon as I can. Thank you so much for your fantastic work

Best regards,

Jean-François

-

-

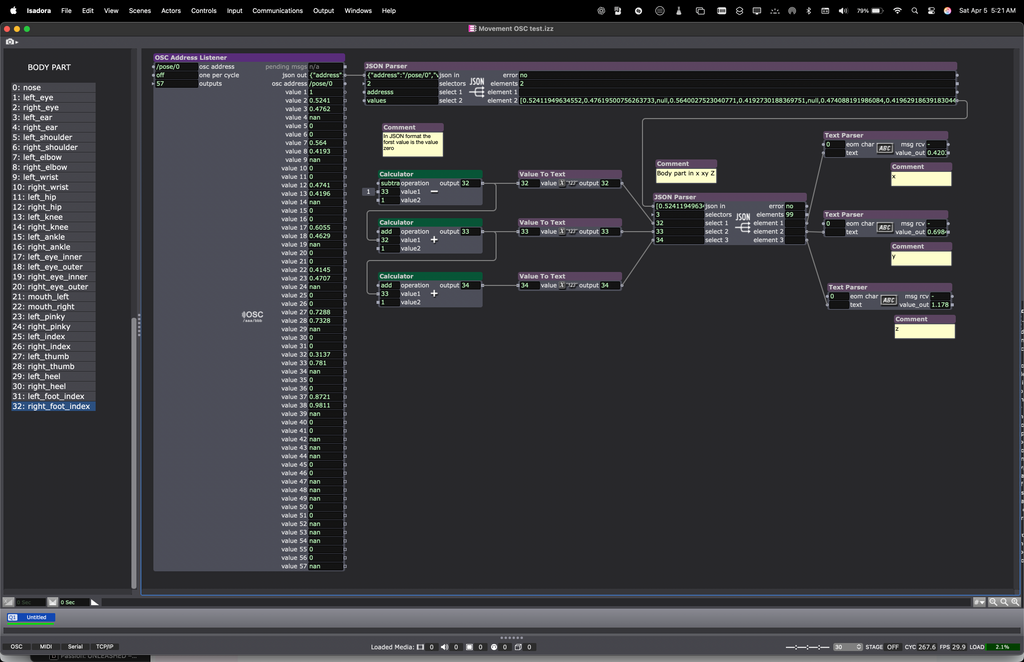

I just found a tool I think you'll love that was given me by the author. Colin Clark, an incredible person, a composer and software developer (and much more !) based in Toronto. He created a software called Movement OSC, (mac PC and linux) that does pose estimation and sends osc. It can also compare Movenet and MediaPipe so you can compare them ! The software formats the osc messages 4 ways Bundeled messages per axis, messages per axis, and bundled xyz array. I use just the latter then parse it in the Json parser

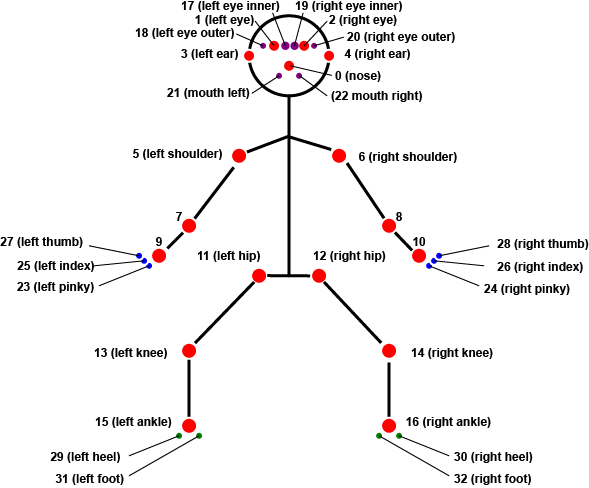

Here are the keypoints and the keypoints diagram

Enjoy !

0: nose

1: left_eye

2: right_eye

3: left_ear

4: right_ear

5: left_shoulder

6: right_shoulder

7: left_elbow

8: right_elbow

9: left_wrist

10: right_wrist

11: left_hip

12: right_hip

13: left_knee

14: right_knee

15: left_ankle

16: right_ankle

17: left_eye_inner

18: left_eye_outer

19: right_eye_inner

20: right_eye_outer

21: mouth_left

22: mouth_right

23: left_pinky

24: right_pinky

25: left_index

26: right_index

27: left_thumb

28: right_thumb

29: left_heel

30: right_heel

31: left_foot_index

32: right_foot_index

-

-

A thousand thanks. It works fantastic. Do you have an idea how to change the standard port and the format ? By every start it go back to 7500 and bundled Message per Axis and I cannot found how to change it.

Again a thousand thanks for this found.

Best regards,

Jean-François

-

@gibsonmartelli Hello Bruno and ruth !!!!

-

Here is a test. In fact the software is even more interesting than what I see, apparently. It is a framework for testing different models that can live parallel lifestyles and be alternated and studied. Please enable from the menu the developer panel Astonishing.

-

I have also made a test and more: I put it in a patch for an installation and it works very well. But for an installation there is two limitation:

- the window of movementOSC has to be active on a screen

- I haven't found the way to save the settings or to change the standard setting.

Anyway it is a very interesting software.

Best regards,

Jean-François

-

@jfg Yes you are right I also noted that. Give feedback to Colin, but have you seen the developer mode ? I am sure there is the possibility to correct that from within the software.

-

Hi @jfg, I'm the author of MovementOSC. I'm really glad to hear it's been useful for you!

For the first issue, I haven't noticed this issue myself so I'm very interested to learn more and see if I can reproduce it. I just finished an residency at Jacob's Pillow recently where we used MovementOSC running in the background while another app (which transformed, visualized, and proxied OSC message to a robotic system and to Isadora) was running in the foreground. What operating system are running MovementOSC on, and what do you notice when it's not active on the screen?

The second issue, you're right, there's currently no persistence for settings. So it forgets any changes you make to the IP address, port, or model settings. I work on MovementOSC in my spare time, so I'm not exactly sure when I'll be able to add this feature, but I've filed an issue for it and will try to implement something when I can.

I'm excited to see what kinds of things you end up doing with it!

Colin

-

Hi Colin,

First of all, thank you very much for this wonderful software. I have been looking for something like this for a long time to make interactive installations.

In the interactive installation, an image is projected onto three walls (3 projectors), which changes as the audience progresses into the room. One camera is positioned so that the entrance is on the left and the “climax” on the right. It would be ideal if I could capture the audience from above, but I don't think MovementOSC could recognize people from this perspective. I haven't been able to test it yet. I had tried from above with the Eyes+ actor but as the room is low I had to use a fisheye camera and the distortion makes everything very difficult. My first tests with MovementOSC have been very positive so far (as described above from the side and not from above), although I have not yet been able to test with several people. I hope to be able to do so in the next few days.

For the installation I use a Mac mini Pro M4. “It is possible to connect “only” three screens/projectors, which I need for the three walls. When I use MovementOSC with Isadora it works well as long as I am in "Stage preview" mode. But when I put the stages in full screen mode (Cmd G), MovementOSC no longer works and the OSC data freezes. If I only needed two projectors and had MovementOSC on the third screen, there would be no problem. As a workaround I made a 4th virtual screen with the app Betterdisplay and moved Movement OSC there. It seems to work but is too unreliable for a museum/gallery installation and must be set up manually every time, which is usually not reasonable for the staff.

I hope I was clear enough and it can help for further development.

I also tried to set the format at startup in the file /Applications/MovementOSC.app/Contents/Resources/app/src/renderer/format-selector.js

I change the order in " const OSC_FORMATS = [ " and in fact after a new start "bundled-xyz-array" is shown immediately but it does not work. Not even if I

This.select = new Select(container, [], "bundled-message-per-axis");

change to:

This.select = new Select(container, [], "bundled-xyz-array");

Since I'm not a programmer, I gave up before I completely broke something.

I will continue to try with the virtual screen and get back to you when I have tested further.

Edit:

Unfortunately I have to correct something. I did the test on the Mac with „monitors use different spaces" and only used one monitor. When I switched Isadora to fullscreen mode, it worked fine, but in "different spaces" mode, Isadora only recognises one external monitor. When I turned this mode off and consequently had only one space, the virtual monitor was also part of the space, and MovementOSC also became inactive when I switched Isadora to full screen mode, which is the presentation mode. To get it to work, I have to bring MovementOSC to the foreground, but then I see the menu bar. That means no workaround.

Edit 2:

Perhaps at least a way with the virtual screen if I set this screen as the main screen. But this will cause other problems. I will try it in the gallery tomorrow

Best regards,

Jean-François

-

You asked what I was doing with the software. Here is a short video about it:

https://www.guiton.de/seite-2....

It works pretty well when I only have one person in the installation, but as soon as there are several people, the detection keeps popping on and off, even if the people are not covering each other. What can I do about this? Is it a light or distance problem or both?

I have several questions:

What does “Minimum score” do?

Is it normal that I don't get z parameters in MoveNet Multi-Pose Lightning mode?

If I select “MoveNet Multi-Pose Lightning” and Bundle xyz Array I still get 33 parameters for x,y, z (z without data). Is there a way to get only the parameters for the box (x, y of the center, width and height)?

How many people can be recognised at the same time?

Is there a maximum distance?

It seems that the detection is light ratio dependent but what about infrared light?

And a completely different question. Do you know a method/software to recognise the people from above. I tried with MovementOSC and it doesn't work when you see only head and shoulder from above, which is quite normal.

Thanks a lot

Best regards,

Jean-François

-

Hi @jfg, your video projections look beautiful! Thank you for sharing. I'll try to answer your questions as best as I can:

1. All the ML models supported by MovementOSC provide a confidence score for each of the keypoints they detect. Increasing the minimum score will require a greater level of confidence in the keypoint to be sent as recognized over OSC. So it provides you with a way of filtering out less accurate, more noisy keypoints by increasing the minimum score to a value closer to 1.0. Confidence is expressed as a floating point number between 0 (all keypoints, no matter what), and 1 (100% confidence).

2. Yes, it's normal that the MoveNet Multi-Pose model does not provide the z axis. Only the MediaPipe BlazePose model provides depth data. As I understand it, BlazePose was trained with synthetic data from 3D-rendered images, so I don't know how accurate that data is in practice. All other models will return NaN for the Z axis.

I've designed the OSC output of MovementOSC to be stable, consistent, and monomorphic across all the models. This makes it more amenable for use in statically-typed languages and environments where having a variable number of keypoints changing on the fly when you select models will cause issues. Regardless of the model, the keypoints are always in the same order, and if a keypoint is not recognized, its x/y/z values will be returned as NaN. Some day I'd really like to create a nice mapping user interface for the software, where you can supply your own format and filter out keypoints as desired, but for now I think this is the least error-prone and most flexible approach

3. There's no way currently to get the coordinates of the box via OSC, but it's something that makes a lot of sense for me to add. I've filed an issue about this, and will look into it when I have an opportunity.

4. Up to six people can be detected by the multi-pose model. Like you, I've also noticed that this model has some challenges when bodies overlap a lot and in cases where lighting conditions are unusual or less than ideal. For examples, dancers would sometimes swap pose numbers when they crossed each other.

5. I don't know if there is a maximum distance, and it likely may depend on the model used.

5. I've not tried it with infrared light, and I'd be very curious to know how the results are. The models have, as far as I understand it, been trained on content illuminated in visible light so their accuracy may degrade on IR images, but they may not. If anyone has the ability to try it out, I'd be very interested to know what the results were.

6. For distinguishing bodies from above without doing any kind of keypoint/pose detection, I wonder if a simpler strategy like blob detection might work reasonably well? I do think there's a huge amount of potential for training or fine-tuning custom models for better results, and it's something I'm planning to explore more, but it's a big project and will take time to fund and coordinate.

Here is some more information about the models used in MovementOSC:

- Google's TensorFlow.js blog about MoveNet: https://blog.tensorflow.org/20...

- The model card for BlazePose: https://developers.google.com/...

I hope this helps,

Colin

-

thank you for all the informations. I am not clear about the confidence score. You say it has a value between 0 and 1 but by starting the software the value is 2.5. ??

With the infrared it seems to work well but I cannot compare while the room is without light except the video projection so I cannot tell how it is in the same configuration (room, camera position) with a normal daylight.

Again thanks a lot for all your information and the software.

Best regards,

Jean-François.

The video makes it so much easier for me to understand now .

The video makes it so much easier for me to understand now .