Eye is cpu only?

-

Hi All

I'm trying to set up a patch using multiple Eyes actors with a live USB camera input as a source of triggers. I'm using the latest greatest Isadora on an older MacBookPro 5,1 (10.6.8) and am trying to follow the edict to use gpu-based actors. My camera input seems to be cpu as does Eyes. Any way around this, or should I just get on with it?Many thanks,John -

For the moment you will need to use the GPU to CPU Converter actor. We will make this actor fully GPU compatible in the v2.2 release in late December, and an updated version of the plugin will be made available as soon as it is ready.

(Also, please note that the updated plugin will have no advantage over using the GPU to CPU Converter, because the video _must_ be on the CPU to do the analysis; the updated plugin will simply do this for you.)Best Wishes,Mark -

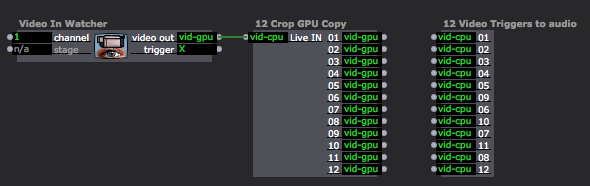

Thanks Mark... a wee bit confused as usual. My live video can, it turns out, come in as gpu. If I connect it to a crop actor, then the crop is gpu. My current question concerns mutability of inputs. I made a user actor with 12 crops with a video in. Originally that was a cpu in. If I hook up a gpu input all the crop actosr don't change to gpu. If I try and set the input in the actor, Izzy sort of crashes. I thought inputs might change with a new type input. I can redo my user actor, but I'd like to understand better the workings of mutable inputs. I am assuming that gpu processing on my old MBP is worth the effort.

Thanks!John -

Hello again

My little brain is having a hard time with GPU and CPU processing. Here's what I know: my live video input seems to mutate to either GPU or CPU depending on what I hook it up to. Eyes seems to be CPU only, though I made 12 instances last evening as GPU and saved it as a user actor. I thought using GPU for cropping with be the most efficient. Maybe not?This morning, upon reopening it is back to CPU. If I feed Eyes a GPU or CPU input it doesn't change from one to another. Or, as seen in this screen shot, it seems to say they are both working but I can't hook up from GPU to CPU. Really confused. On Mark's suggestion, I tried adding a convertor actor from Crop (GPU) to Eyes (CPU) but there was a substantial hit on cycles when adding 12 convertors.John

-

This is a bit of a difficult situation, that, I think will be solved as Izzy passes this transition phase, however there is something that rings a few alarm bells about the way you have approached this.

I would look at using eyes ++ and looking at the location of the blobs and routing and culling data instead of cropping the image (I assume you are cropping into 12 different regions otherwise you would not have 12 crops).The blob decoder from eyes++ tells you where the data is - ignoring data within a set range of x and y values will do the same thing as cropping the image but much more efficiently. As far as I can foresee at the moment there is no reason to use 12 crop actors when the data will be filtered the same way from the blob decoder. You can set up 24 inside range actors (one for each axis) to gate the data from blobs.I would really look at changing your approach to this to improve efficiency. Isadora is technically a programming environment, and lets you connect whatever you want whether it is a good idea or not, in this case amazingly your patch apparently still runs this way which is a great credit to Izzy (especially seeing as though you are using a 7 year old computer with a tiny onboard GFX card- most phones have more GPU power these days).If you give a little more details about what you are doing inside those user actors I can help try make it a bit more efficient.Fred -

Thank you Fred for such a helpful reply. I started with eye++ then abandoned it for no particular reason. I want to build a trigger matrix based on my live manipulation of objects via a live non-moving video camera. Redoing with eye++ and blob detectors!

John -

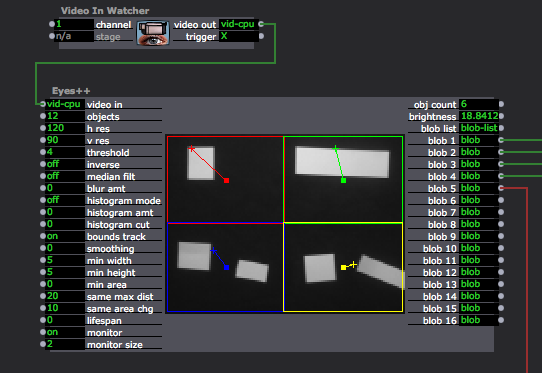

So I'm experimenting with eyes++ and have two questions. It is CPU only? And how do I set it up for multiple objects? I'm using a black fabric background with white paper squares. I the attached picture, I've got 5 objects but only four areas. You can see that four blob detectors are working but not the fifth. I want 12 "regions" which I can use to trigger other events.

Any suggestions would be most welcome!John

-

Yes, eye++ is CPU only. Can you explain your scenario a little more? I think (but it depends on your needs) you can just track the objects and you set the region by filtering the blob centroid output from the blob decoder to correspond to your 12 regions using the inside range actors, or more efficiently using the javascript actor.

-

Hi

Just to follow up on this process, not that anyone is interested, but... I ended up using 12 Crops in GPU mode to divide my live camera input into zones. Then I added CalcBrightness to each zone with (right now) 3 levels of brightness which are triggering sounds and video as I make a graphic design. On my old MBP 5,1 I'm is running around 240 cycles, 30 fps @ 33% processor. That's sure to go up as I add details and complexity, but I'm please at this point. Thanks to Mark and Fred!John