[ANSWERED - TICKET] OpenNI & Orbbec crashes

-

Assuming you want to send both skeleton tracking data (OSC) and video data (NDI) you should get a Gigabit ethernet router, and some gigabit ethernet cable (one for each computer). You will also need ethernet adapters for your Macs (if not built-in).

OSC (numbers / text)

You can send the skeleton tracking data via a number of OSC channels, or you can group the values into JSON (using either the JSON Bundler add-on or the Javascript actor) and send each skeleton as a single JSON string. You will then need to use either the JSON Parser add-on or the Javascript actor on the receiving system to unpack the skeleton data values.

NDI (video)

Sending the video via NDI can be achieved by creating a Virtual Stage in Stage Setup for each video stream you want to send and checking the NDI output option on the Virtual Stage settings pane. Then send the video from the OpenNI Tracker actor to one or more Projectors with the virtual stage/s set for the stage input.

You will need the NDI watcher actor from the Add-ons page to bring the NDI video stream into your other computer.

-

@vidasonik said:

I believe I need to connect my two macs via ethernet? I have to use my older mac for OpenNI and send data to the new one (M1 max).Can you advice what cables/router etc I would need?

@Juriaan Got any specific gear recommendations for this?

-

@dusx said:

OSC (numbers / text)You can send the skeleton tracking data via a number of OSC channels, or you can group the values into JSON (using either the JSON Bundler add-on or the Javascript actor) and send each skeleton as a single JSON string. You will then need to use either the JSON Parser add-on or the Javascript actor on the receiving system to unpack the skeleton data values.NDI (video)Sending the video via NDI can be achieved by creating a Virtual Stage in Stage Setup for each video stream you want to send and checking the NDI output option on the Virtual Stage settings pane. Then send the video from the OpenNI Tracker actor to one or more Projectors with the virtual stage/s set for the stage input.You will need the NDI watcher actor from the Add-ons page to bring the NDI video stream into your other computer.

I already built this setup for @Vidasonik , the only thing left is getting equipment and settings down for a solid network connection.

-

@woland @Vidasonik

I would recommend a Gigabit router with multicast support for OSC communication between two computers. You could simply pick-up a gaming router, that would work fine. Also, the UI / settings menu is friendlier on those devices than the pro devices that I use in the field.A Netgear router is a great option for example that can be picked up without breaking the bank. Just make sure that you are on the medium/high-end regarding prizing to get proper support for high bandwidth / multicast support / vLANs.

Pro devices that I personally use;

https://eu.store.ui.com/produc... with an dedicated WiFI access point

-

@juriaan Thanks! I'm currently bidding for this on ebay after some advice from Ryan Webber

NETGEAR Nighthawk R7000 AC1900 Smart WiFi Router

I guess i just need 2 standard ethernet cables to connect? Hopefully then I can get some nice smooth curves from the tracked hands of the dancer. The theatre internets I've used I don't think can transfer enough data.

Thanks everyone, David

-

@vidasonik but then, of course, I'll have to get a couple of connectors so I can plug into the USB-C sockets on each mac !!

-

@juriaan I now have a netgear nighthawk as advised, ethernet cables and adaptors but I don't seem to be able to connect the two macs?

-

You likely need to do this. Unlike the video, you will likely want to disable the wifi.

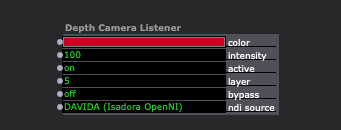

In the "Depth Camera Listener" User Actor I made for you, you might also need to go to the 'ndi source' input and re-select your NDI source.

Best wishes,

Woland

-

@woland I don't have ethernet in my list when I open Network folder in system preferences? I've no idea what to do having spent over £100 on connectors, adaptors and the Netgear Nighthawk!

-

I sent you a message through the ticket system. I may be able to help.

-

Finally, I have solved the problem of depth camera not working on my new mac and having to send data to a second computer!

I have dispensed with the Netgear Nighthawk (thank god!) and not needing to connect my two macs at all! I simply connect the orbbec and webcam to my old mac and have a duplicate ableton project on the old one to trigger effects etc. The sound is only sent to Izzy.

The audio on the new mac plays out to pa with live guitar etc. I don't need to send sound to Izzy at the same time because now Izzy is controlled by the old mac. The only issue is the live guitar cannot be used to trigger effects on the old mac (which has the main izzy file which outputs to main projector. The new mac out puts a simple (non-interactive) izzy file to a separate projector.

All I have to do is press the same key on both macs at the same time to trigger the start of the show and proceed through all the scenes together. They are then both running more or less in sync!

It's taken me about 3 months to reach this position!

I have one question though, I can't understand why the orbbec orientation is the opposite of the webcam. That is, the dancers right hand is to screen right with the depth camera (correct) but to screen left with the webcam??

Best wishes, David

-

@vidasonik said:

I have one question though, I can't understand why the orbbec orientation is the opposite of the webcam. That is, the dancers right hand is to screen right with the depth camera (correct) but to screen left with the webcam??

Maybe you enabled the 'mirror' input on the OpenNI Tracker actor to flip the depth image?

-

@woland Actually no I hadn't. But now I have mirror selected it matches the webcam !!

Another question, I am using an outline of the body tracked by the depth camera with DIFFERENCE actor but when I select COLOUR I only get a red outlined figure despite changing the hue offset with the HSL adjust actor?

-

@vidasonik said:

Another question, I am using an outline of the body tracked by the depth camera with DIFFERENCE actor but when I select COLOUR I only get a red outlined figure despite changing the hue offset with the HSL adjust actor?

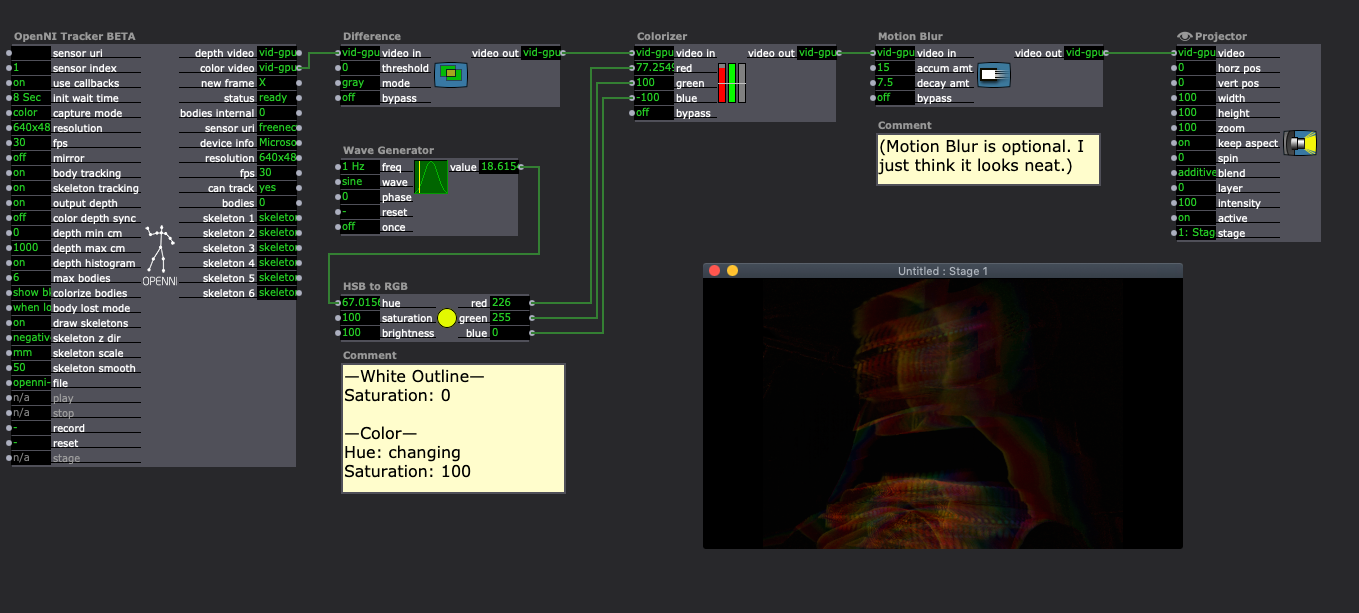

Try this instead

-

@woland wow - thanks so much! Looks good - Can't wait to try

-

@woland For some reason I can't connect to the colour video node (it is faded in grey and says n/a) - only the depth video is accessible?

-

@vidasonik said:

I was using a Kinect. I think Orbecc would use the Video In Watcher and Live Capture for RGB video.

Also, I misunderstood. I thought you were trying to use the color video output of the OpenNI Tracker actor.

You can just disconnect the link from 'color video' and link 'depth video' to the difference actor instead.

-

@woland - thanks again! I've used your example and changed my User Actor accordingly. Weirdly though, I get colour only when I set DIFFERENCE to GREY! When DIFFERENCE actor is set to COLOUR I just get RED again, as before. But thanks I've got there in the end. And I love the blurr effect ...

-

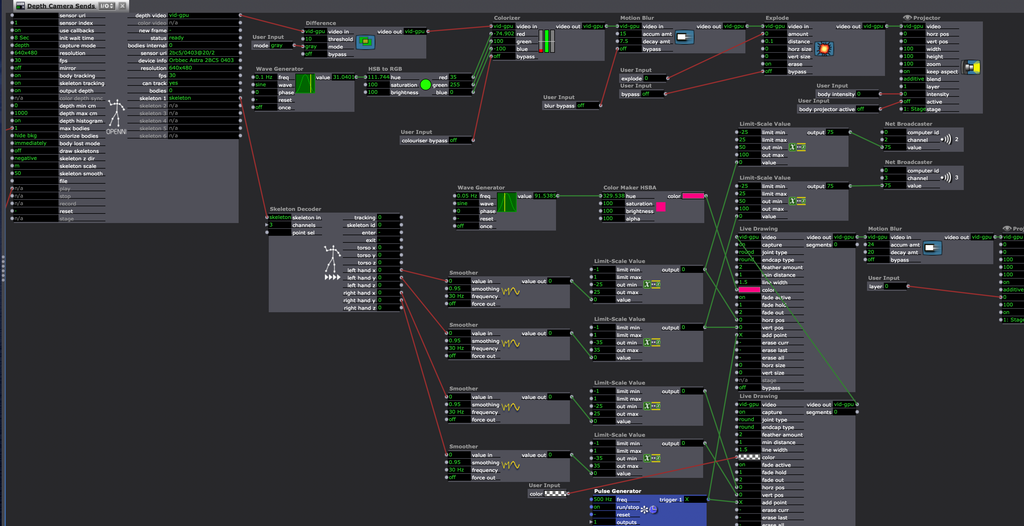

@woland Hi! I'm nearly there with the depth camera now, thanks to all your help. Just fine tuning. One issue that is puzzling me is the distance between the tracked hand and the start of the coloured line. It would be great if the line emanated from the hand immediately rather than there being a delay or gap. I've checked all the settings and can't find where this distance might be adjusted? No big deal anyway. I attach the user actor patch in case you can see anywhere I may have overlooked. Thanks as ever, David

-

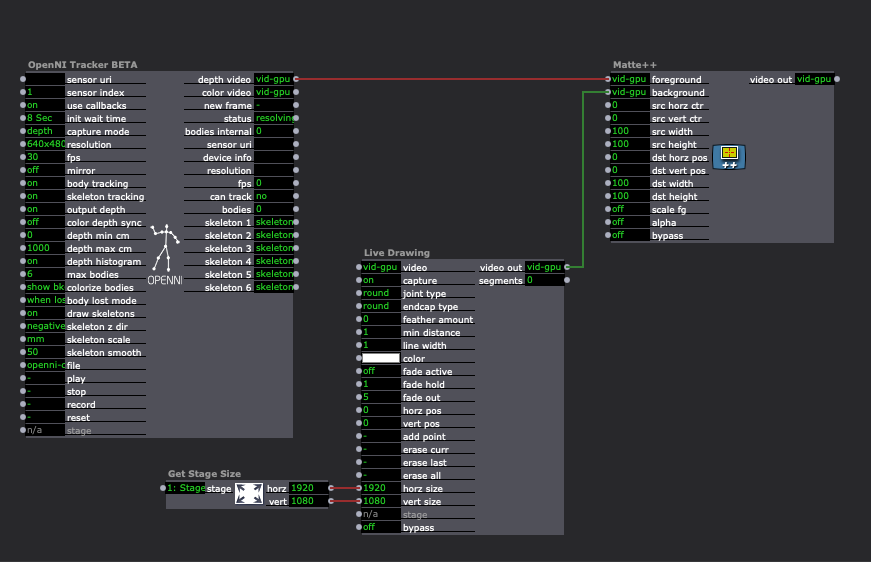

It's likely the difference in aspect ratios and resolutions, at least, that's what I've had to solve before in similar situations. The depth camera is a 4:3 aspect ratio and a different resolution than the output of the Live Draw actor (which is 16:9 and uses the default video resolution set in the video tab of Isadora Preferences unless the Live Drawing actor is given a specific resolution using its 'horz/vert size' inputs.)

Using a Matte++ actor with the 'scale fg' input set to 'off', and feeding it a background the size of the stage (i.e. the output of the Live Drawing actor scaled up to the stage size) I think can reconcile the resolution difference by putting them both in the same 16:9 frame, but the openNI depth video will still be in 4:3 so it doesn't solve the aspect ratio difference.

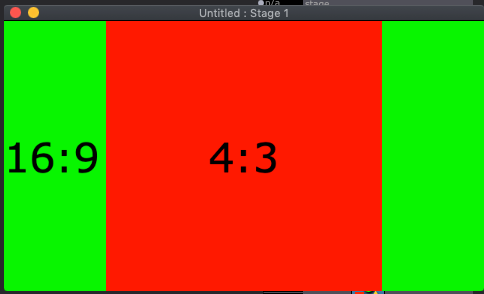

Even after doing that you may need to scale the values sent to the Live Drawing actor by a certain amount to get the drawing point to line up with the tracked body part. Below is a simple representation of the problem. The depth camera's resolution is represented by the red box and the Live Drawing actor's output is represented by the green rectangle (which goes behind the red square). As you can see, the camera will only ever see you moving within the red box, but the Live Drawing actor can draw all the way out into the green areas.

You could solve this by zooming in on the video of the openNI depth output so that it fills the screen, but you will lose some of the depth image as a result if you do that.

You could also solve it by scaling the values so that the live drawing is limited to the area of the red box.

A third way is not to solve it and just to accept the fact that the live drawing will be further off the body part location the further from the center of the camera's vision you move.

There could be other factors and solutions that I am not remembering right now but hopefully the information I provided is useful.