Alpha Mask with Live Video

-

Ok so I am fairly new to Izzy but so far I am loving it. I have an idea that I have been trying to accomplish and I feel that I am close but just not getting the results I want.

What I am trying to do is use live video of a person (a dancer) as an alpha mask so that the silhouette of the dancer is translucent and the background is opaque (black preferably). Then I want to play content thru the mask so that there is video content playing in the form of the live dancer.I have played with threshold and alpha mask actors but because light levels are uneven and the background is not a solid one-color wall, I am unable to get a clear form of the dancer.So is there a way to do what I am thinking about? Or would does it need to be more involved (tracking with Kinect) or something?Thanks Guys!-Drew -

I am writing this from my phone so can't give you a fixed solution but try using the desaturate actor and the contrast adjust, maybe this helps to get a consistent mask. And yes it would be very easy with a kinect. Best Michel

-

Well I'm no expert, but little is "easy" with a kinect and live video feeds in my experience. Doable, but had to use two MacBookPro computers and syphon etc etc. I used the kinect image into Isadora and processed it using color keying to combine the kinect "blobby" take on the human body with other footage. Go for it!

John -

Tried the contrast and the desaturate actors and it helped a bit more but since the system of actors still revolves around luminance I unable to key out other bright spots. I am currently attempting it in my apartment right next to the window so imagine once I'm in a more controlled color and luminance environment that will help. I'm essentially looking for the magic lasso tool from photoshop for live video.

http://notabenevisual.com/?p=443The link above is to a video of the concept I am trying to achieve. My idea of thinking is that they somehow keyed out the bodies and made a mask from it and projected their silhouettes directly to the wall. -

I would go for IR lighting the screen and IR capable camera that will see the moving body as a shadow. You may need to filter IR from projector.

-

This thread has a lot of information that might help you:

http://troikatronix.com/community/#/discussion/265/tracking-two-dancers

-

THIS youtube video that Mark posted about the technology used in 16 revolutions can help you aswell.

Best,

Michel -

Hi there,

I'm in the same boat except I am using a Kinect + Isadora.I'm trying to create a similar effect as in this one created by Grahamhttp://www.youtube.com/watch?v=Spd77d6yZ-s&list=UUhr7aQm3W7xQmn4YblveryA&index=21Could someone give me a lead please? -

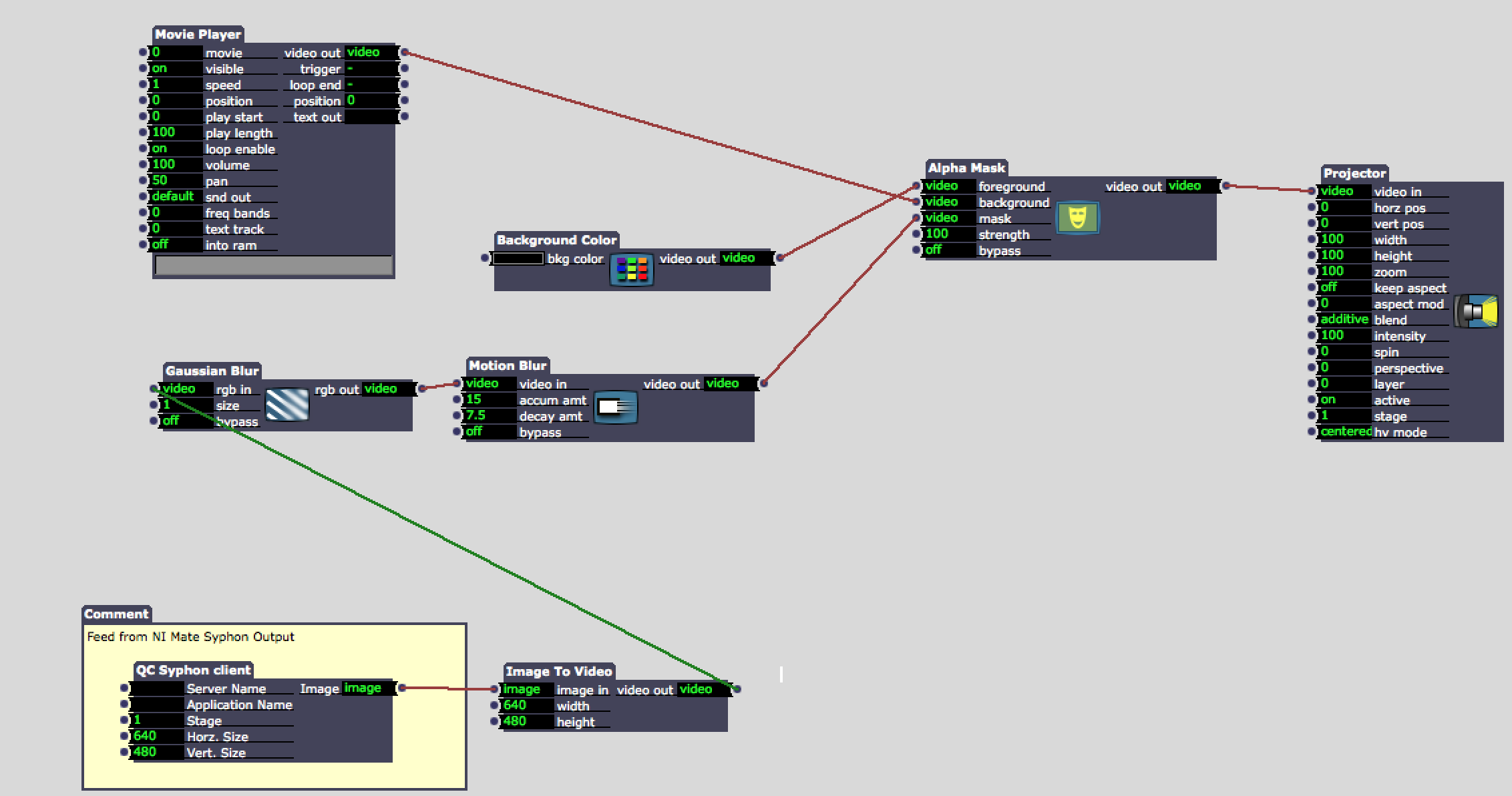

I never saved that patch it was a quick demo I did for some ex students but it something along these lines.... (open in a new tab its quite big)

-

@Skulpture I noticed in your video that the mask has a nicely defined smooth edge, compared to what raw data from kinect will give you. Do Gaussian Blur and Motion Blur do that, or NI Mate? What happens without the Blur filters?

Thanks.--8 -

Yeah it nearly always needs some blur.

I used to knock old CCTV cameras out of focus slightly to get similar results rather than adding blur in the software. But with ever increasing faster machines i started adding it into the software. Normally Gaussian Blur on 1 or 2 works nicely. -

Hi Guys,

Thanks to @Skulpture I was able to create this new interactive piece : Indicible Camouflage

I'm presenting it next week to a jazz event and I realise that in Ni Mate only the active user's alpha in active.

Is there a way to capture all alphas no matter how many peaple stand infront of the devise ?Thank you all for your great knowledge !

David

-

Glad i've helped you David. Be sure to take some pictures and I wil put them on my blog.

:-) -

Thanx @Skulpture I will do so !!

:-) -

Hello Sculpture, i tried your patch but instead of the syphon actors , I used kinnect actor and the kinnect. My goals is to project video on a dancer. Do you think it's possible with the kinnect? Wher can I find the QC syphon actors? I send you a picture of my patch, just have a look on the right side.

Thank you for all your workBest wichesBruno -

It looks like you will need a threshold actor - this will boost the white and make the black/grey more black. (if that makes sense?)Out it directly after the output from the Kinect Actor. -

hello everybody.

i have some troubles to use the kinect with my new mac book pro.i have nimate license but it crashed at launch , like synapse....i plugged the kinect on the usb3 on my mac and i think its the problem.but i dont have any other usb2 port.....do you know a solution about that?thats a lot -

I use a kinect on a new macbook with only USB3 ports and no problems.

-

Me too no problems

-

It won't be the USB ports. It will be software/driver related.