Body Mapping with Isadora

-

Hi,

Ive been trying to use Isadora for a body mapping project, where the movements of a choreographer will be captured via a kinect, and having animated content being projected to her (moving) body in real time. For this, the idea is to apply an alpha mask to the live feed from the kinect, insert some effects to the mask and project over the moving body via Mad Mapper (or Isadora itself).

Following several tutorials and advises, I was able of creating a scene that would possibly (logically?) work, but for some reason am not getting the result I wanted from it.

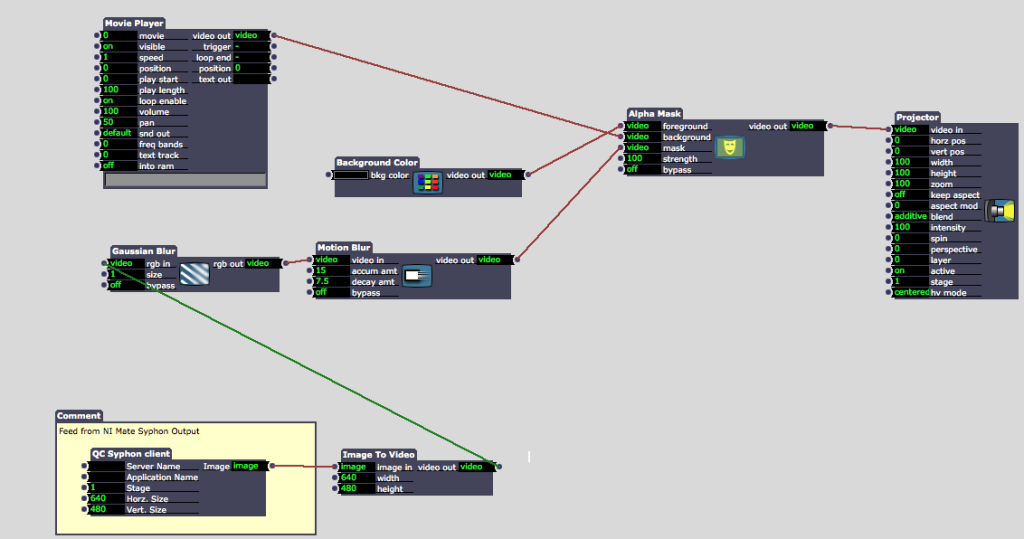

Follows a print screen of the scene developed so far:

As it can be seen, I wasn't able of getting an alpha mask from the subject on stage preview, just a blurred capture of the kinect (full frame).

Any advises on how to proceed?

Thanks in advance

-

Hi @The-Symbiosis -

Sounds like you just want the ghost image of the performer's body, yes?

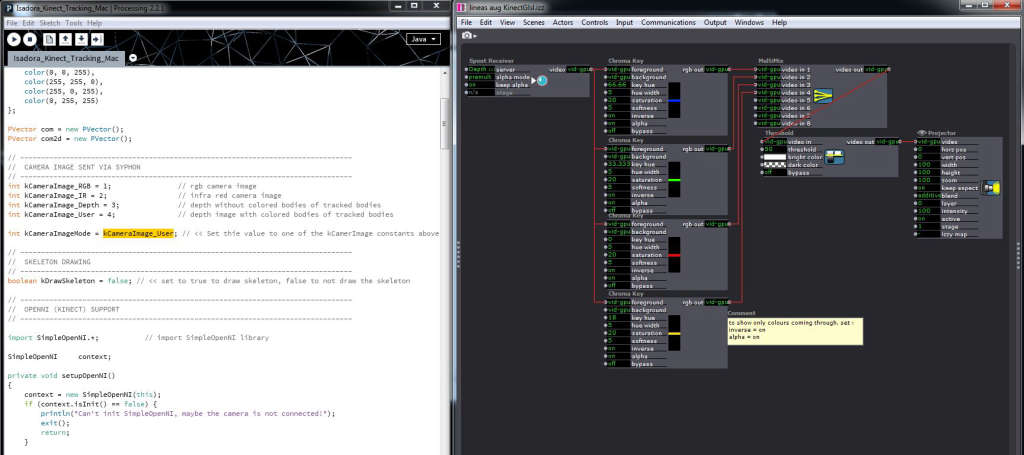

In the processing file - if it's the one from the tutorials - there is a set of variables that lets you define what images you are passing through from the kinect sensor - straight RGB camera, IR camera, Depth imagery, or Depth imagery with the coloured bodies on top. The variable is kCameraImageMode - I have it set in my processing patch to send the outline of the figure. Then I set the skeleton drawing off. these are the lines you want for that.

// --------------------------------------------------------------------------------

// CAMERA IMAGE SENT VIA SYPHON

// --------------------------------------------------------------------------------

int kCameraImage_RGB = 1; // rgb camera image

int kCameraImage_IR = 2; // infra red camera image

int kCameraImage_Depth = 3; // depth without colored bodies of tracked bodies

int kCameraImage_User = 4; // depth image with colored bodies of tracked bodiesint kCameraImageMode = kCameraImage_User; // << Set thie value to one of the kCamerImage constants above

// --------------------------------------------------------------------------------

// SKELETON DRAWING

// --------------------------------------------------------------------------------

boolean kDrawSkeleton = false; // << set to true to draw skeleton, false to not draw the skeleton

then in Isadora use chromakey to select for the colours kinect uses to show people - this scene is from the tutorial @mark gave at the first Werkstatt -

... the threshold actor tweaks the sensitivity and strips it of colour information. that should get you started...

-

In processing when the depth image is drawn, its quite easy to make a treshold value and color every pixel white within its range white, and everything else black, this image can be syphoned to isadora directly from processing and there is your live mask, and you can bounce it out as a small app to run in the background.

Can use the processing osc library to control the treshold value from isadora, this allows for tuning the mask live for things happening at different depths.

And you can send along some other free intel from the kinect tracker like the blob center X,Y. So your content stays centered behind your mask.

All of this is just using and configuring libraries and a little tweak to the kinecttracker code.

-

Hi @Plastictaxi and @Fubbi,

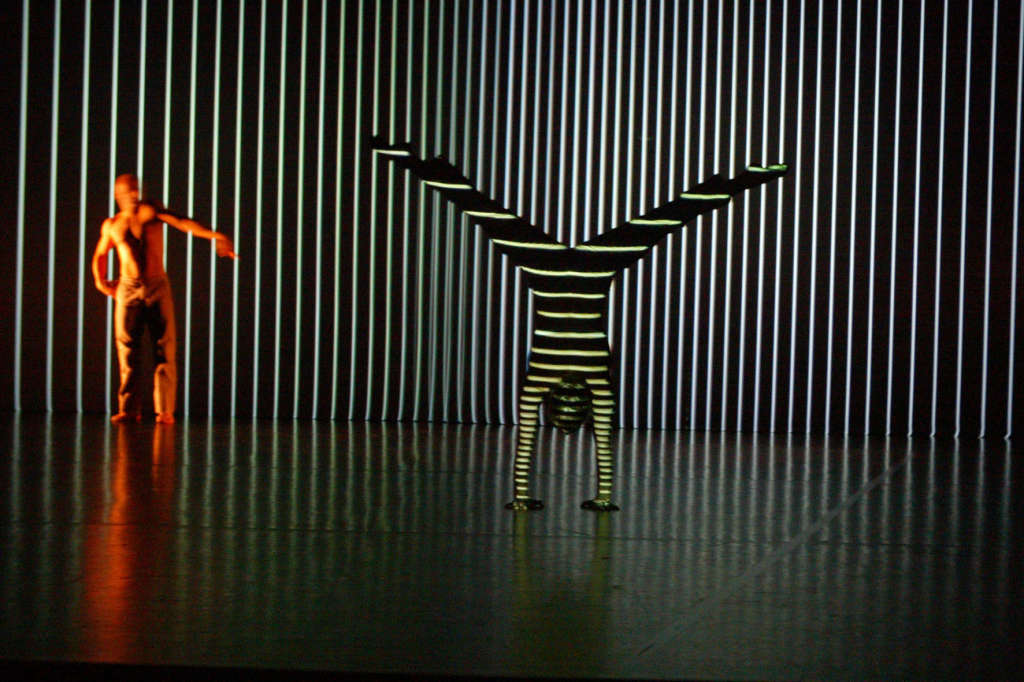

Many thanks for your input! The aim of what I’m trying to achieve is something in the framework of Apparition, by Klaus Obermaier & Ars Electronica Futurelab (check image below)

I am not very proficient in Processing (thus using the tutorial file…), but for what I’ve been reading and watching so far, it’s maybe possible to get the result I’m searching for in the realm of Isadora only.

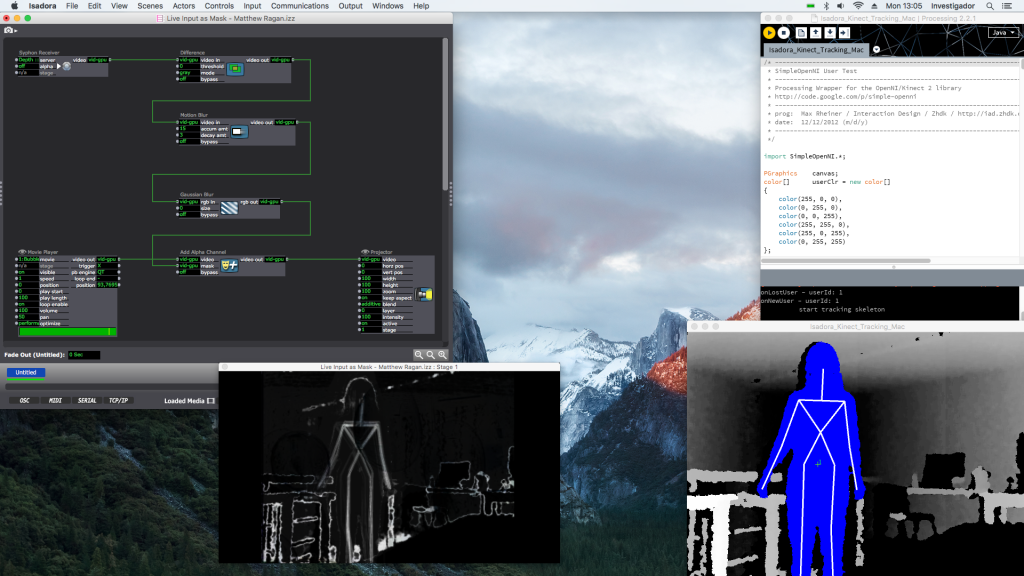

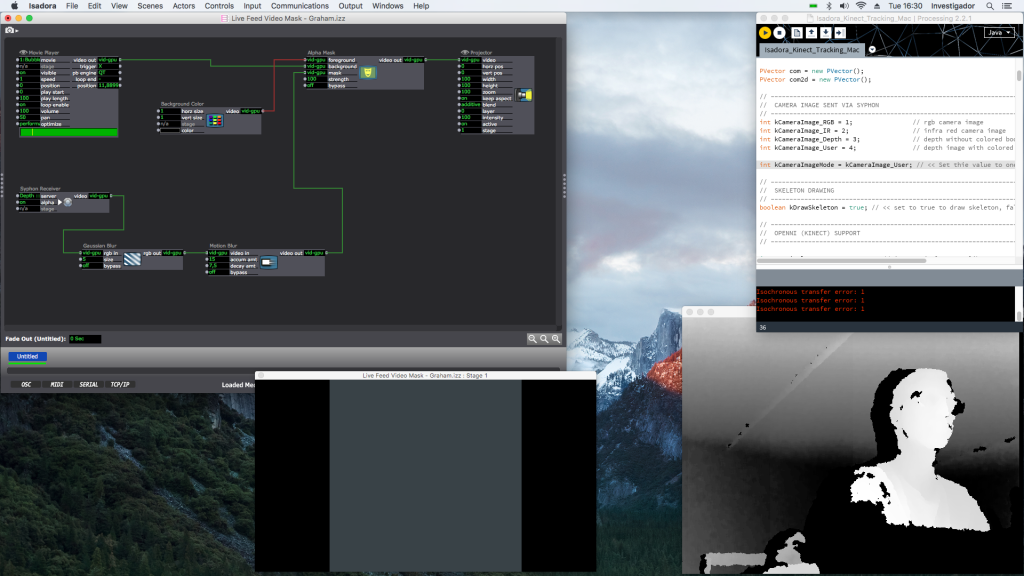

Graham Thorne has a tutorial Isadora & Live feed Video Mask which resembles what I’m seeking.

I’ve tried to rebuild Graham’s scene changing the input to syphon (Kinect), but couldn’t get a proper result. He told me I need to take a layer of depth and use it as a mask and then layering video/media over it, but so far I got stuck in the process…

Follows an image with Graham’s scene followed by a print screen of the scene I’ve created:

Do you think I can get there following this path? Or should I deepen it and go through Processing as you previously mentioned?

Thanks for your time, truly appreciated

-

question related but a bit OT: how do you manage the parallax bias from kinect lens and projector lens?

All the rest I did it many time just using NI-MATE (now finally with a buy to own licenses system )

-

Hi @maximortal,

Still didn't get that far, and haven't thought about the parallax bias between the 2 lenses to be honest.

I am thinking of using MadMapper for the projection, which could compensate (via warping) the difference in lenses, but am saying this without trying it so far.

You mention you did the rest many times, can you follow up on that please?

-

Mad mapper have a beautiful feature that is the calibration through Canon camera.

Btw if you want to use Isadora here is my set up and my thoughts.

- Kinect sensing area is not so big even kineck one ( effectively usable ) is like 5 m from sensor and not more and is also not so wide... around 4 m at 5 m of distance.

- I place the sensor on floor and projector immediately up the Kinect ( with a self made stand ) this reduce parallax as much as possible

- I catch shapes of the performer through no mate and I send it in Isadora with spout/Syphon

- You have always a delay, try to avoid very rapid movements or introduce some video effect to mask it

- Parallax is not linear in this scenario but it follow polar coordinates so is like a combination of parabolic and linear bias. In Isadora mapping I create a simple bezier surface, 3 sector wide is enough then I manually manipulate incoming images to fit the body. Just place the performer inside every sector of the bezier surface and mess with corners.

- Kinect is not a miracle. To reach a perfect body mapping you need better equipment or different setups. It exist some application made for open framework that do the lens calibration like madmapper but I never used it.

- Join with me to ask to Mark for a lens calibration feature for Isadora ;-)

-

How funny, I have just spent the afternoon working with Desiree, the woman doing the handstand in that Klaus Obermier piece. I saw it live and it was just magical, and I think that it would be very difficult to reproduce the same quality with the Kinect.

I can tell you from snooping about backstage, and a brief conversation with Obermier at the time, that the tracking in 'Apparition' was done using a very complex IR system, with a lot of IR emitters and I believe several cameras. As @Maximortal says "Kinect is not a miracle. To reach a perfect body mapping you need better equipment or different setups"

But, if you limit your ambition, keep the projection simple, keep the movement slow, try to eliminate the parallax issues, and add a lot of patience, you can get something reasonably convincing. I do use this from time to time, and like Maximortal I use the output via NI mate rather than messing about with Processing. -

@the-symbiosis said:

Ive been trying to use Isadora for a body mapping project, where the movements of a choreographer will be captured via a kinect, and having animated content being projected to her (moving) body in real time. For this, the idea is to apply an alpha mask to the live feed from the kinect, insert some effects to the mask and project over the moving body via Mad Mapper (or Isadora itself).

Keep your eyes peeled for Isadora 3 my friend ;)

-

@mark_m said:

How funny, I have just spent the afternoon working with Desiree, the woman doing the handstand in that Klaus Obermier piece. I saw it live and it was just magical, and I think that it would be very difficult to reproduce the same quality with the Kinect.I can tell you from snooping about backstage, and a brief conversation with Obermier at the time,

Stop being so cool! You're making the rest of us look bad!

Seriously though, that's amazing. I'm incredibly envious.

-

@woland

I can't help it: I'm just naturally cool

I see that the guys who did the body mapping for Apparition are based in Berlin... maybe you can make friends with Dr. Marcus Doering...

http://www.exile.at/apparition...Here's a little more info about the system:

https://pmd-art.de/en/about/ -

Hey guys,

Wow, thanks for all the answers.

@maximortal, cheers for the in-depth step procedure, I'll surely try this one out!

As with the limitations of kinect for the tracking (and the lags faced with it), we're well aware of that... we do have a Vicon system available at our research centre, but can only get the skeleton information from it (unless someone knows any tweek to get some extra info from it? ;)

The idea for this installation is to have a performer being captured in the Vicon room, send its OSC coordinates to the stage room, where another performer shall mimic the choreography being captured via kinect. Now... if someone knows if I can do it directly with the Vicon system, that would be another story!! :)

-

hummm, regarding the Isadora 3 I can't reach the link... :/ Can you post the image (I think it's one) here?

-

-

@mark_m truly funny as Desiree was a dancer in my Aquarium piece and a dancer in other pieces which i supported technically. i haven't seen her i years. say hello from peter/posthof/vienna ! i really mean it.

-

@mark_m said:

very difficult to reproduce the same quality with the Kinect.

I agree. I was there at the premiere of Apparitions at Ars Electronica in 2004, and know Zach Lieberman (of Open Frameworks fame) who implemented the system. So I know quite a bit of what went on technically to make this piece happen.

The biggest problem is that the Kinect's range is so limited. It simply can't handle getting a good image of an entire stage. You really need to switch to an infra-red based system and an infra-red camera, which is what they used in Apparitions (and what Troika Ranch used in 16 [R]evolutions, and in Chunky Move's Glow.) Then you have the potential to see a much larger area, albeit without skeleton tracking.

Another issue is the delay of the camera, the delay of the processing system, and the delay of the projector. The camera is always the worst, but they struggled in Apparitions with the fact that no projector they could get their hands on at that time had less than a two frame (66 mS) delay. This was critical for sections like the one you pictured above (vertical vs. horizontal stripes) because fast movements would immediately show the wrong orientation of stripe (i.e., the horizonal stripes would appear on the background instead of on the dancer.) My friend Frieder Weiss, who did all of the technology work on Glow, wrote his own camera drivers to get the delay down to the absolute minimum for that piece -- much less delay than any other system I've seen. (No, they're not publicly available.)

(As a total side note, because the two performers were from a group called DV8 which were world reknowned for their fast, extremely physical choreography, I was shocked that the movement in Apparations was relatively slow throughout. I felt fairly certain then (and still do) that this was not a choreographic choice but a simply a response to the limitations of the delay.)

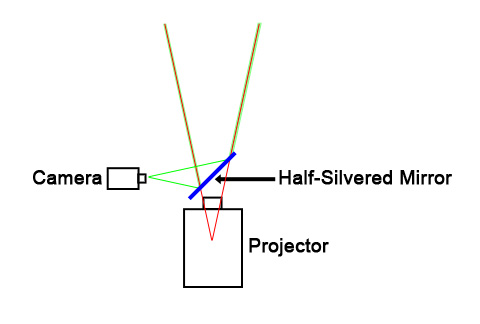

In addition, they had to pay something like 5000 EUR for a half-silvered mirror that allowed the focal point of the projector lens and the focal point of the projector to match as perfectly as possible so that you wouldn't get shadows on the screen due because of the parallax problems.

Finally, this amusing fact: to create the infrared field that could evenly span the entire stage, they focused numerous infrared heating lights -- like you've seen in restaurants -- on the rear projection surface.

Apparently the temperature backstage was blazingly hot beause of this; there was at least some fear they would melt the rear-projection surface as a result. ;-)

Anyway, watch my tutorial on infrared tracking for techniques and tips on making use of that approach to tracking. It has its own issues and there's a learning curve to make it work, but I can verify that once you get the system down, it's very reliable.

Best Wishes,

Mark -

@mark I never though to use a half-silver mirror, is brilliant, but for what I can understand focal distance of camera and projector must be the same to avoid problems with polar coordinates, or am I wrong?

-

Hi everyone, and many thanks for all your input, truly appreciated.

I should have mentioned this is a research project, more a case study regarding the bidirectional communication between systems than a performance itself.

The goal is for us to study and understand the possibilities (and “less possibilities”) to use our systems for expressive movement analysis in performing arts, and the idea of body mapping was just a “small” extra we added to increase our enthusiasm with the experience.

I agree with what @mark_m mentioned, that if we limit our ambition and keep the project simple we’ll be able of getting something reasonably convincing.

Also going to watch the tutorial on infrared tracking, thanks for that @mark.

This topic is getting quite interesting I have to say, please feel free to keep the discussion happening, as new ideas may arise from it.

Regards to you all from sunny Portugal