Evaluating lux vs lumen to decide which projector is best

-

Hi, everyone!

I'm trying to find a simple way to evaluate the amount of lux I need, and use it for a good cost benefit projector choice.

Just to review what lux means, let's imagine a projector with 1200 lux, aspect ratio of 4:3, projecting on a screen of 2mx1,5m, s the total area of projection is 3m^2. Lux is equal to one lumen per square metre. In this case, 1200/ 3 = 400 lux. This is used as a measure of the intensity, as perceived by the human eye, of light that hits or passes through a surface (Wikipedia).

The projection is always in a contest against the ambient light. Following the previous exemple, if we have a 400-lux projection in a room where the light fills the wall with 600 lux you'll basically see nothing.

Is it right?

Back to the subject, I try to measure the mean lux on the projection site using a luximeter (an app on my cellphone), and try to be at least 50% above it. Naturally, the higher we go, the best, but high lumen projectors are not cheap. The 50% rule seemed a good choice when I faced this situation for the first time, but I wanted to know if any of have a general or personal criteria for this.

Best wishes

-

Take a look at this tool from Projector Central https://www.projectorcentral.com/Epson-Pro_G7400U-projection-calculator-pro.htm

They use foot lambert as a the measurement of luminance. Somewhere else I read that an image brightness of 20 to 25Fl is a desirable image brightness.

-

HI,

There is a lot of great information and references here: previous thread Link

best wishes

Russell

-

Thanks to bonemap for the link to that previous thread.

A couple of additional things. I think that having merely 50% higher illuminance from the projector than the already existing lighting is almost certainly far too low. Due to the human visual system's inherent nonlinearity (see Aolis' post), the 50% brighter won't actually look 50% brighter; it'll look 20% brighter at best. If you have any degree of control over the lighting in the venue, it's best to light up the projection surface as little as possible with other lights.

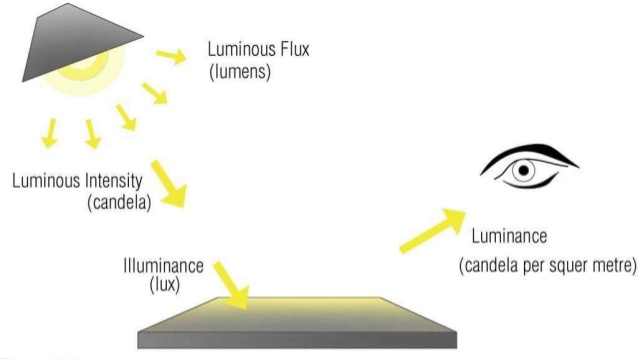

Illuminance vs Luminance: Best not get those mixed up. Casually speaking: illuminance, measured in lux (Si unit) or footcandles (imperial) is a measure of incident light hitting a surface. Luminance, measured in Candales per square metre (Si) or footLambert (imperial) is a measure of light being reflected or emitted from a given surface. When setting up cinematic projection (or for calibrating monitors), luminance is key; for example 2D cinema projection is specified at 48 cd/m^2 or 14 fL. For a super-rough rule of thumb when projecting onto a white surface, if you divide your illuminance in lux by something like 3.5, you've got a likely luminance in cd/m^2. (This will change somewhat if you have either a dirty/darker surface, or on the other hand, a screen with gain > 1.0.)

I've been researching this general question myself recently, and was looking for a real-life rule of thumb for estimating a projector's illuminance on a given surface based on the ANSI Lumen rating. As DBO wrote in the other thread, the expected illuminance would be the luminous flux (number of lumens) divided by the surface area. This is based on the bold assumption that the published lumen rating of the projector is accurate. I've only measured one office-grade projector so far; it's rated at 2000 ANSI Lumen but only achieves roughly 1300 without any further correction. When correcting the (greenish) native white point to something closer to D65, it predictable got worse still, measuring no more than 1100. Based on this single sample, my rule of thumb seems to be something like illuminance = ANSI rating * 0.55 / surface area. I hope to measure more projectors moving forward.

I would be somewhat distrustful of "light metre" apps on phones. I must admit I haven't tried any though. If you can, see if you can borrow a genuine light metre from a photographer. If it doesn't give you an actual lux number but has EV (exposure value), you can use a chart to estimate the illuminance.

https://www.sekonic.com/united...

-

Thank you all for the information! I think it's not easy to define a good rule of thumb for this because of all the variables involved. Summarizing, I would say:

- The published lumen rating of a projector is not reliable. I'll start to use the 0.55 factor, thank you @3perf . I have an experience on this, since I compared a 5000 lumen new projector to a 75000 lumen old projector. Side by side, the first one was much better. Lamps get old eventually, end rental companies don't always replace them. - The difference between illuminance and luminance is roughly a consequence of the screen gain. Only this, the screen gain, would give us a headache on trying to estimate, right? Fabrics may be easy to evaluate, but how to evaluate the gain of a colored wall?

- The website projectorcentral.com gives us a reference based on NITs: -> 51 or less: too low -> 52 to 171: good for low ambient light -> 172 and above: good for high ambient light - Still, it depends on the ambient light. Maybe it's best to make some tests, even though it's costly.

-

If you're talking about coloured walls, I don't think it's useful to think in terms of a screen's gain. A screen with a gain of 1.0 ideally reflects the light in a completely diffuse manner. Whether you're looking perpendicularly at the screen, or from an angle, you would see the same luminance. On a screen with a higher gain, you would see a BRIGHTER image when viewing perpendicularly, but a DARKER image when viewing at an angle. In fact even if you're viewing the picture at the centre, you often see a pronounced hotspot at the middle (which is independent of the projector's own hotspot), and the image rapidly darkens off to the side. This is a common annoyance for example with the silver screens designed for polarized 3D stereoscopic projection.

I think that, if you have a dark / coloured wall, it may be *technically* correct to speak of gain, but it's a bit misleading, because the gain is usually an aspect of a projection surface which is consciously designed into it and describes HOW it reflects the light. Think more in terms of absorbed and reflected light.

The difference between luminance and illuminance … you can't easily compare them, it's more complicated than throwing in a screen gain. They are two completely different measures. Like Volts and Amps, or Litres and grams. I did my best to describe the difference in my earlier post. But throwing in that factor of 3.5 was a gross oversimplification on my part (because it throws all the math overboard) and I maybe shouldn't have done that.

If you're serious about predicting light levels for projectors and the surfaces they project onto, ideally you'd get yourself both a luminance and an illuminance meter, or a device which has both functions.

Are you sure your older reference projector had 75000 lumens? Because that would have been a massive beast, probably designed to fill the very largest of cinema screens. ;-)

-

There has been lot of projectors in the market. It gets quite difficult in finding the best projector for the business or personal purpose. You can check the list of best projectors available.