Seeking Beta Testers: Rokoko Smartsuit Pro and Isadora

-

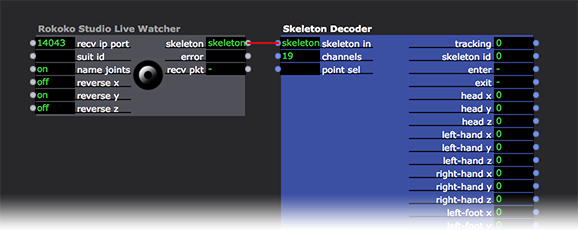

I am happy to announce "Rokoko Studio Live Watcher," a new actor that allows Isadora to receive live motion capture data from the Rokoko Smartsuit Pro, an affordable, wireless, markerless motion capture suit.

If you don't know the Smartsuit Pro, I suggest you take a look at this video to see it in action and this page to learn more about the system itself. In short, the Smartsuit Pro uses 19 six-degree of freedom sensors that sense the movement of a performer. Used in coordination with our Rokoko Studio Live actor, this gives you an equal number of position x/y/z position outputs that report the position of the sensor in space. Because the data is sent to attached computers via WiFi, you can track the performer over a much larger area than you could when doing traditional camera tracking.

We've now formed a relationship with Rokoko, who will be adding an Isadora preset to the the output options in their software Rokoko Studio Live, which handles the communication between the Smartsuit and third-party applications like Isadora. This will ensure fast and easy setup when integrating their system with ours.

Luckily one major choreographer has already contacted me about using this plugin in a performance next April. If this works out, their show will serve as a great testbed to ensure this plugin is robust and reliable.

But for sure, "the more the merrier." If you have a Smartsuit Pro and would like to participate in the beta test phase for this actor, please open a ticket and let us know. We'll hook you up with the beta version of this plugin.

Sincerely,

Mark Coniglio -

Awesome! I've been talking to the guys at Rokoko for a couple of years, trying to borrow a Smartsuit for Oulu Dance Hack. Maybe this year we can get one, now that the Isadora link is in place...... fingers crossed.

-

@dbini said:

Maybe this year we can get one, now that the Isadora link is in place...... fingers crossed.

If that doesn't work out, maybe my partner @enibrandner and I can come, and she'll bring her suit. ;-)

Best Wishes,

Mark -

well that would be lovely :) you are very welcome. we've got some awesome artists this year, and some streetlights to hack. I'm really looking forward to it. https://taikabox.com/hack/

-

OOOh! WoW! This looks exciting!

-

Hi,

it would be great to see a demonstration of the smart suit running through the Isadora plugin as a screen capture. It would be helpful to see some evidence of the systems capability to assess and evaluate its effectiveness.

Best wishes

Russell

-

Hi there,

In the next week I'm going to expirement with the suit with my collegues at the HKU. Will make screen recordings and record the movement.

-

I am interested in exploring this with a local dance troupe. A couple of quick questions: (1) In terms of live integration, would the input data coming from the suit be able to go to 3D objects to say animate an Unreal Engine Model? (2) What are the anticipated data sets designed to integrate to in Izzy? (2) What is the current latency?

Looking forward to exploring the possibilities.

-

As a follow up: Is there an investigation of using Izzy and Unreal Engine-4 in this capacity via their development of Live Link as discussed in this video at about 17:47. UE4 mentioned they are looking for software partners. Izzy, Rokoko, and UE4 would be a spectacular interconnection of arts technology with an amazing amount of creative possibilities. Hope this is or can be integrated sometime down the line.

-

Here is that link from Unreal Engine:

-

@kdobbe said:

) In terms of live integration, would the input data coming from the suit be able to go to 3D objects to say animate an Unreal Engine Model?

Wow! That is an ambitious expectation from Isadora. In the screen grab provided at the beginning of this thread we see the data module output to the Skeleton Decoder. This is also implemented with the beta OpenNi plugin - we can then anticipate that the output is a series of xyz floats for each joint. Unfortunately, the 3D Player in Isadora does not pass grouped geometry or implement any hinged constraints. You would have to use a 3D Player module for each bone and apply your own math to the transforms of each bone - and that is not a great workflow IMHO. It would seam more efficient to stream real time rendering into Isadora from a 3D animation engine using NDI or Syphon,Spout. Well, that is my guess based on working with 3D models in Isadora over the last couple of years.

Where I would be excited to work with the moCap data is through the 3D Model Particles module in Isadora because it does pass grouped geometry. It is not too difficult to imagine some credible real-time effects that take advantage of the dynamics associated with the Isadora 3D particle system.

I would be happy to hear of future developments with the 3D geometry line up in Isadora.

Best Wishes

Russell

-

@kdobbe said:

A couple of quick questions: (1) In terms of live integration, would the input data coming from the suit be able to go to 3D objects to say animate an Unreal Engine Model? (2) What are the anticipated data sets designed to integrate to in Izzy? (3) What is the current latency?

As @bonemap correctly indicated, what you're going to get in Isadora is a list of X/Y/Z points, one for each of the 19 sensors on the Smartsuit Pro. Isadora's 3D capabilities are relatively basic -- and certainly very basic when compared to something as comprehensive as Unreal Engine. It is not our intention to try to build something comparable to Unreal Engine in Isadora -- that simply isn't realistic and that functionality has been deeply addressed by software programs that focus on doing so. In short, you shouldn't expect to see the ability to rig up animated character skeletons coming in Isadora any time soon.

There is already a link between Rokoko and Unreal -- you can check out this video tutorial below to see it in action. That would seem to be a far more profitable route to go if you wish to do animated avatars in real time.

In terms of latency, I haven't measured it. But my personal experience is that it's pretty fast -- certainly as fast as any camera tracking system I've used.

One limitation of all systems that rely on a combination of gyroscopic, acceleration and magnetic measurement (e.g., XSens, Perception Neuron and Rokoko), is that the sensors are sensitive to magnetic interference. That means, if there's a lot of ferrous metal (steel, iron) in your environment you can run into problems. How well such systems perform in traditional theaters is something I cannot yet address, because I've not used the suit in such an environment. But for sure, it is a topic you should research carefully before investing in a suit that uses this kind of sensor technology.

I hope that helps.

Best Wishes,

Mark -

hi,

The OpenNi tracking skeleton is up-to 15 points, could anyone clarify what the 19 points of this smart suit are - or just the differences. For example does it add wrist and ankles?

Best wishes

Russell

-

@bonemap said:

The OpenNi tracking skeleton is up-to 15 points, could anyone clarify what the 19 points of this smart suit are - or just the differences. For example does it add wrist and ankles?

It's kind of a different setup entirely. The names they give the sensor points are as follows:

"head"

"neck"

"left-shoulder"

"left-upper-arm"

"left-lower-arm"

"left-hand"

"right-shoulder"

"right-upper-arm"

"right-lower-arm"

"right-hand"

"stomach"

"chest"

"hip"

"left-upper-leg"

"left-lower-leg"

"left-foot"

"right-upper-leg"

"right-lower-leg"

"right-foot"Best Wishes,

Mark -

I was just looking at the XSENS website and they claim that their system is immune to magnetic interference...maybe a new development?

@mark, do you know anything about that system, and if it outputs data that can stream into Isadora similar to a Kinect sensor, or would it need its own custom Actor?

John

-

According to the Rokoko people, the claim about being immune is not very well proven -- but of course they would be inclined to take this position. I know for a fact that one performance I attended that used Perception Neuron suffered numerous failures because it was in an underground location surrounded by tons of metal. But in the end I can neither say yes or no to XSens's claim.

Regarding input from XSens: unless they offer OSC, it would probably take another custom actor. I've contacted them to find out if this exists.

Best Wishes,

Mark -

@mark Does Rokoko output OSC?

-

@jtoenjes said:

Does Rokoko output OSC?

No, which is why I made the actor to allow a user to get the data from the suit. It is certainly possible, however, to use this actor to convert the Rokoko values to OSC in Isadora and send them on somewhere else.

Best Wishes,

Mark -

@mark Hi Mark- Kathleen from the NYC workshop. Was wondering if I would be able to get the Rokoko actor as I will have access to a suit in a few weeks. And please put me on a list (if you have one) to test out the perception neuron actor if you end up making one. Cheers-k

-

Please open a support ticket using the link in my signature and we can get started on the process of adding you to the beta-testing program.