video image processing / scaling - pixel to pixel possibility?

-

hej isadora community,

working on a new production with the fantastic isadora 3 on stage in berlin I have a new encounter with an old question: is there a possibility to use a video image processing that renders a videostream to an output / stage in its original size, without any scaling? two case examples to explain what I mean:

I have a 4k videoprojector / screen with 3840x2160 pixels as output / stage and I have a live-camera with a resolution of 1280x720 pixels as input. now I want to place a stream of the camera on the 4k output in a way, that it is not filling the whole image (as it would usually happen in isadora) but in its actual pixel size so that it only takes 1280 of the 3840 pixels in the width and 720 of the 2160 pixels in the height and that I can move it to all areas of the output image without losing its original resolution. if I just zoom down the automatically scaled-to-fill-image with the zoomer actor it's resolution gets very low as it seems that this procedure takes the original size (1280x720) as starting point and then zooms down to a very low resolution. the only way I found to achieve what I want is to scale the video with the scaler actor to the 3840x2160 size before I zoom it down with the zoomer actor. but this is quite performance hungry.

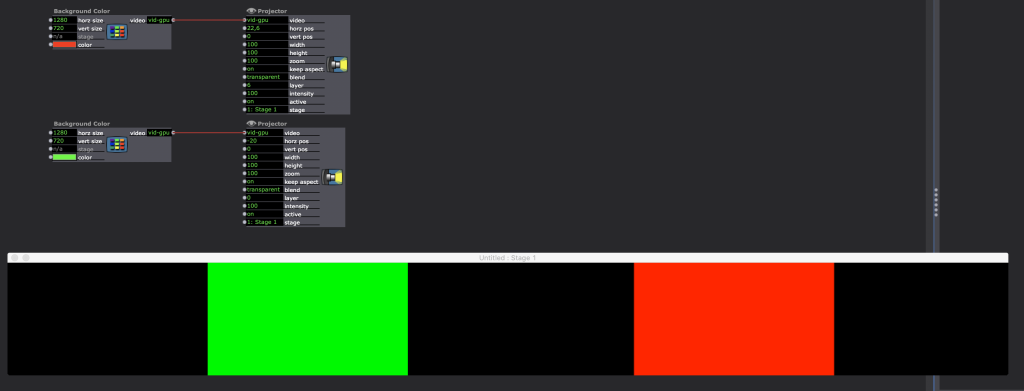

another example with an at least related problem: for my actual work I use a panoramic stage that is a combination of five stages (for five projectors with a resolution of 1280x720 pixels each) in the great new stage setup which leads to one stage with a resolution of 6400x720 pixels. again I place a 1280x720 pixel live camera image on that stage, which is just rendered in the middle of the stage in the right aspect ratio and - as stage- and image height are the same here - is actually resembling the real resolution of the input. but if I now try to move this image to the left or right edge over the panoramic stage, its just disappearing on the edge of its actual starting position - as if it would slide under a layer that covers it. the only way I found to move the image visible over the whole stage is an old workaround that I used in former projects to combine images with different aspect ratios and sizes: I send the 1280x720 live camera stream through a video mixer actor to which I connect a background color actor at the second video input. in the background color actor I set the "horz size" to 6400 and the "vert size" to 720 so that it resembles the resolution of my panoramic stage. in the video mixer actor I set "frame scale" to "largest" and leave the "mix amount" at 0. if I now place a zoomer actor between the output of the video mixer actor and the projector actor, I can move the image by using the "horz center" and "vert center". but also this solution is quite performance hungry.

are there any other more elegant and less gpu- (or cpu?)-intensive methods or work arounds to do this?

I hope my explanations are comprehensible and I'm looking forward to every hint.

thanks and best wishes from berlin,

benjamin

-

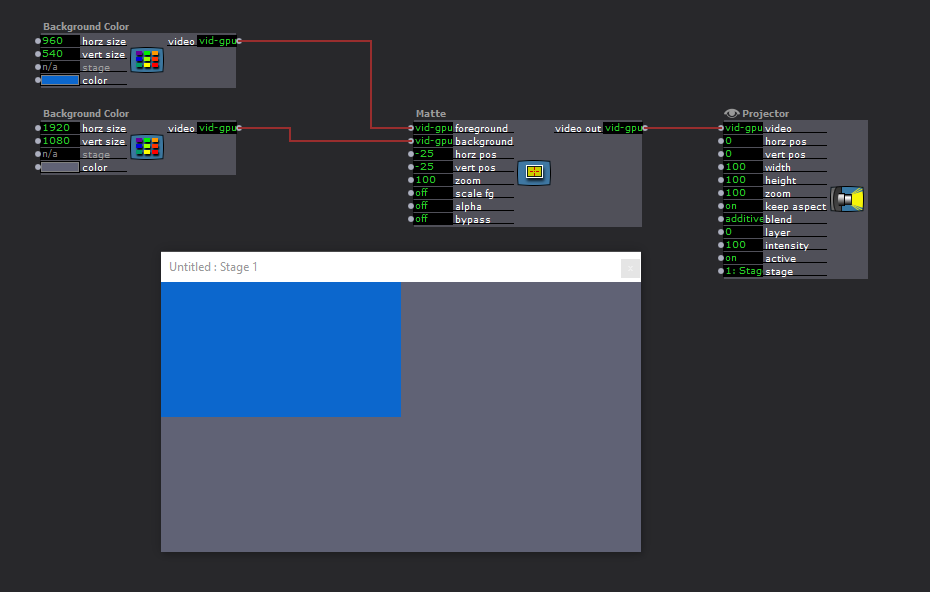

Yes, there are a few way to do this, but probably the easiest, especially for the Cam onto the 4k, is to use the Matte actor.

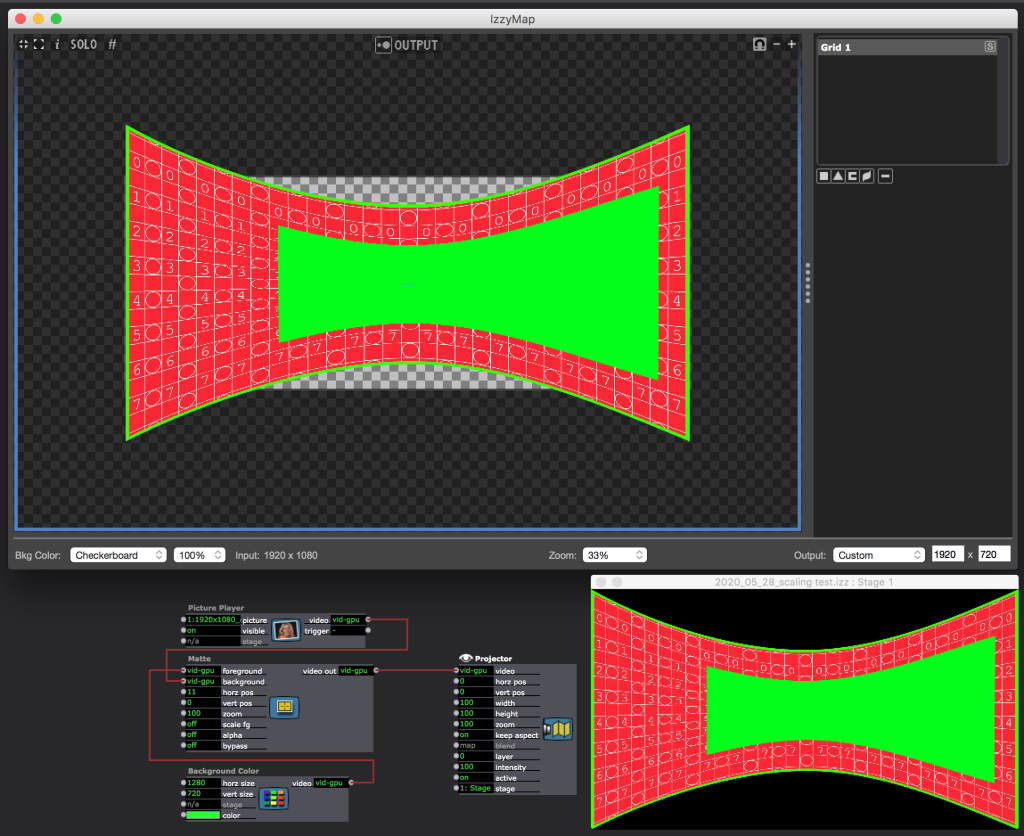

In the image above, the foreground (your webcam input) is placed on the Background input without scaling.

This is done by setting the 'scale fg' input to OFF.

I have placed the foreground video in the top left corner by setting the horz and vert pos inputs to -25, it will be centered by default. -

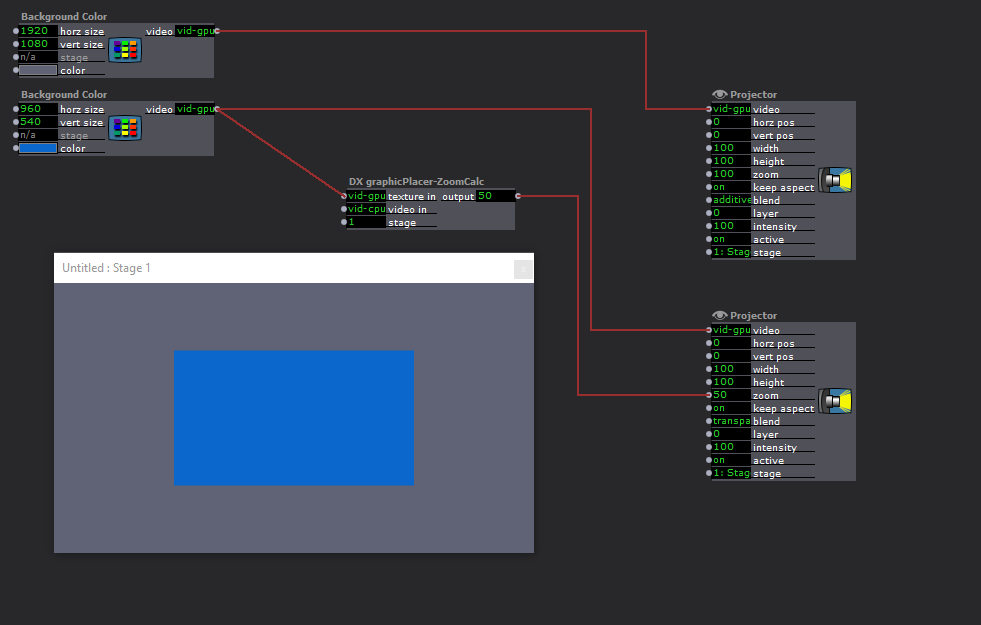

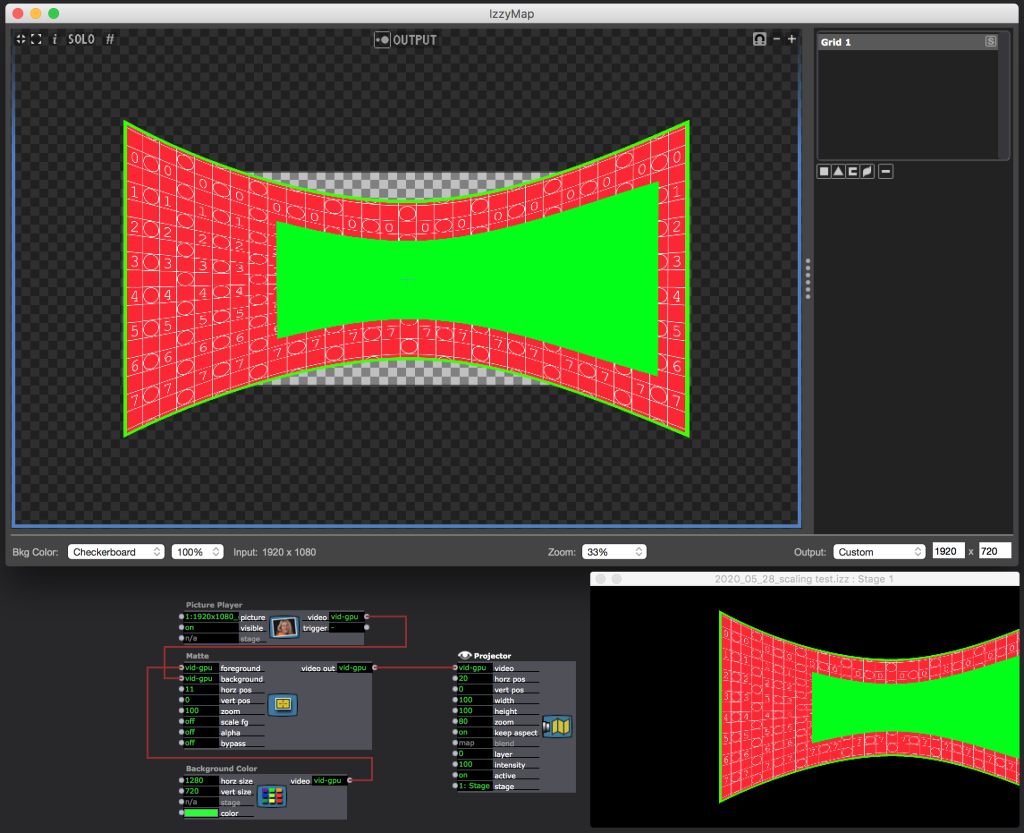

Another method, which may be more useful in other cases is accomplished by using the Projector Zoom input to set the size so that the input is shown non-scaled.

Basically, the zoom needs to be calculated based on the dimensions of the stage that the input is going to be placed on.

I have created a User Actor, that works for most cases. It looks at the stage number, and the input size and outputs the Zoom required for the projector.

Remember, that the projector will need the 'blend' changed to Transparent.

The User Actor I have been using: DX graphicPlacer-ZoomCalc.iua

-

thank you, ryan, for these fast answeres. both methods are quite interesting - for my purposes especially the first one, as it will also work on a mapped stage. it's just a pitty that it is not possible to zoom in more than 100 % in the matte actor, that would bring more flexibility to use this actor for animating the size and position on a bigger background. I'm looking forward to test this performance-wise against my solution with the video mixer.

the second example with your user actor is also good, but as the zoom of the projector (unfortunately) also zooms the whole mapping, it probably only works on monitors or unmapped rectangular screens, right? -

If zooming is holding you back from using these solutions, you can always throw one or more Zoomer actors or FFGLPanSpinZoom actors between the Matte actor and the Projector.

Best wishes,

Woland

-

thanks for the hint, woland, that's also the way that I did it . I just have quite a big project with six hd-sdi live inputs and 13 outputs - monitors and projectors - and I'll play and stream (and move) quite a couple of different sources at the same time, so I'm just looking for every possibility to avoid more actors in the stream. just because I have the idea, that every actor more takes a bit of performance - which might be thought too simple. therefore I just wanted to check if there is any way to do all this just in the end of the whole signal chain, like - I'm fantasizing - an input in the projector actor that turns all scaling off and renders the incoming image in its real pixel size on the mapped stage and then some separate inputs to move this image horizontal and vertical on the mapped stage and also zoom it there, different to the actual "horz pos" and "vert pos" and "zoom" inputs that all affect and move the whole mapping and not the input on the mapping . but probably thats rather a feature request :-)

-

If you need our foreground to be able to zoom to a larger size, you could simply use a 'Scaler' actor to resize it larger before placing it on the background. This will then allow it to zoom to this new, larger 100% size, giving your more flexibility.

-

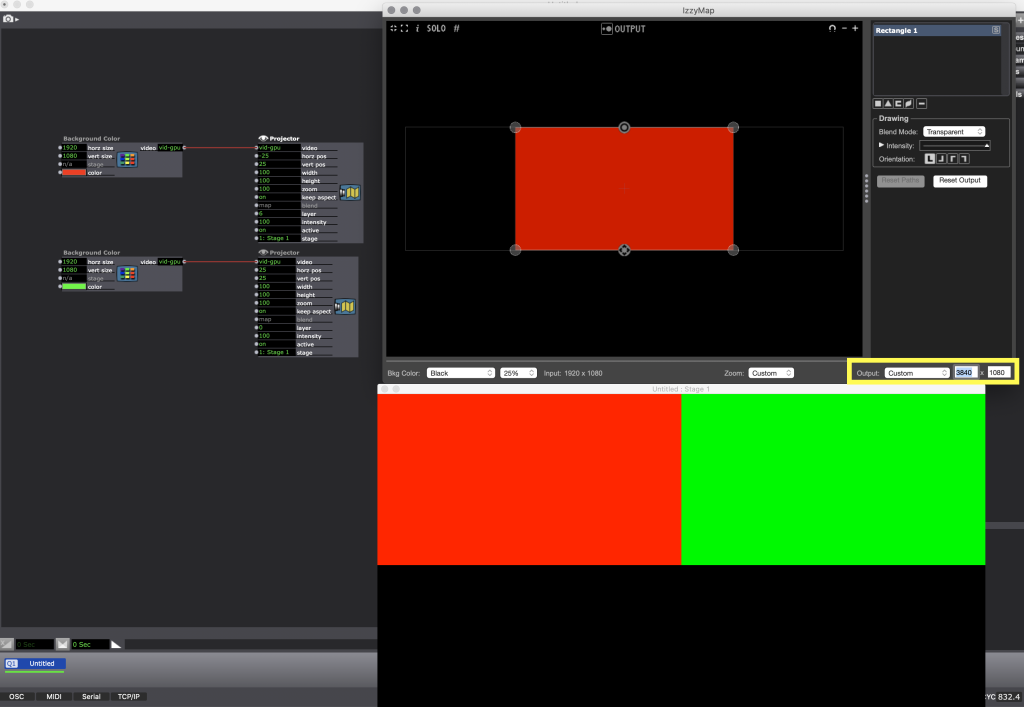

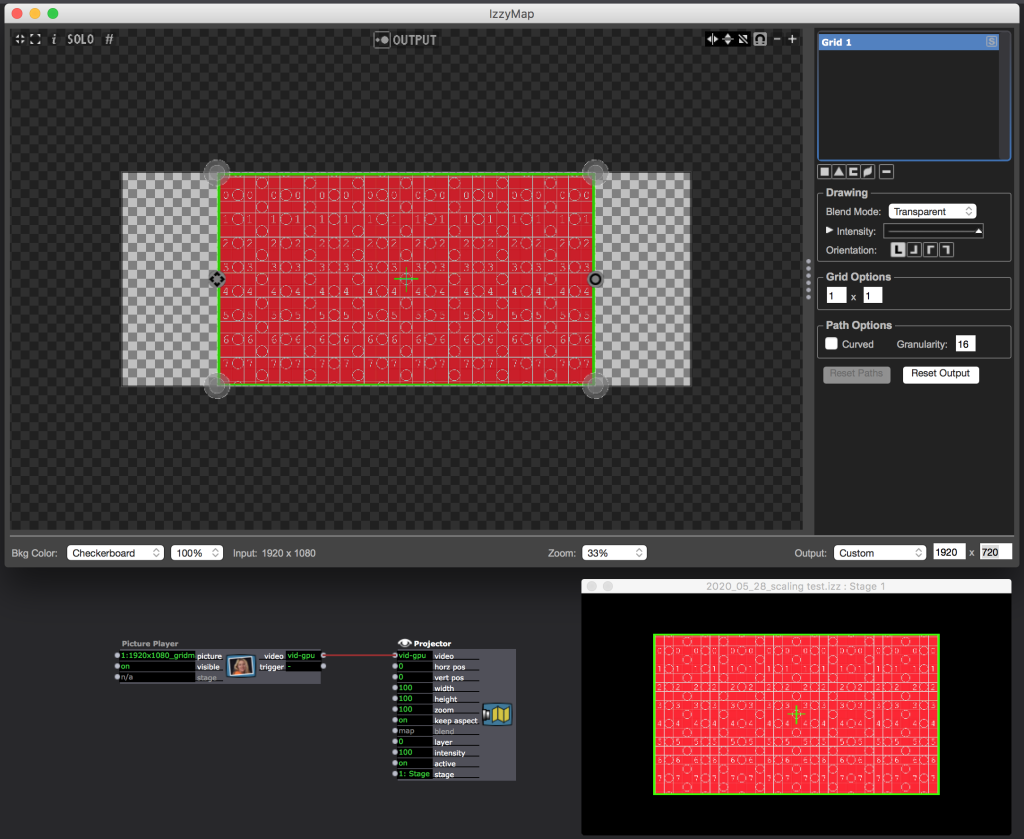

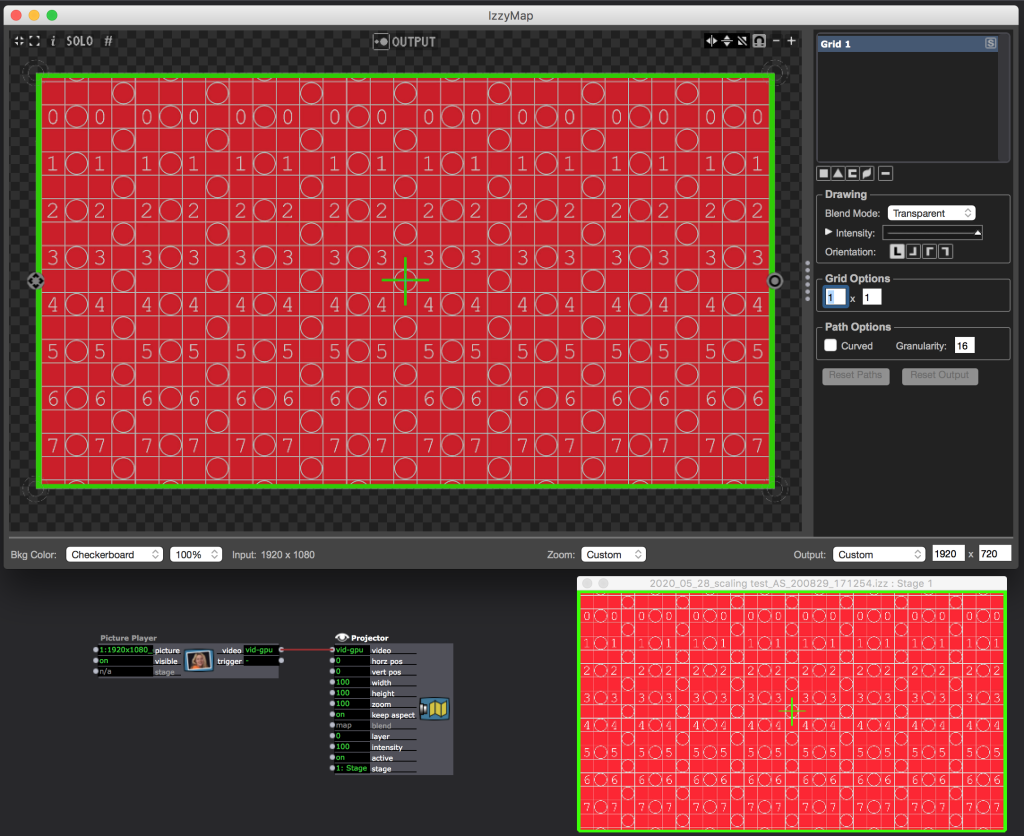

actually you can use Izzy map doing it for you easily. Set the output setting (see yellow rectangle) to custom, the width to the actual stage size and the heights to the heights of the source. This way the source will always be scaled to the original size but you can use the projector actor to position and zoom it for the stage.

Regarding the second issue, I actually can't reproduce it. The positioning of the picture works just fine with the projector actors.

Best

Dill -

thanks, dill, thats a good hint. I actually experimented with the custom output setting of the mapper already and you are right, this is a great solution if you are working either on monitors or on rectangular projection surfaces to which you can keystone your output through the simplified mapping options in the stage setup and leave the mapper unused.

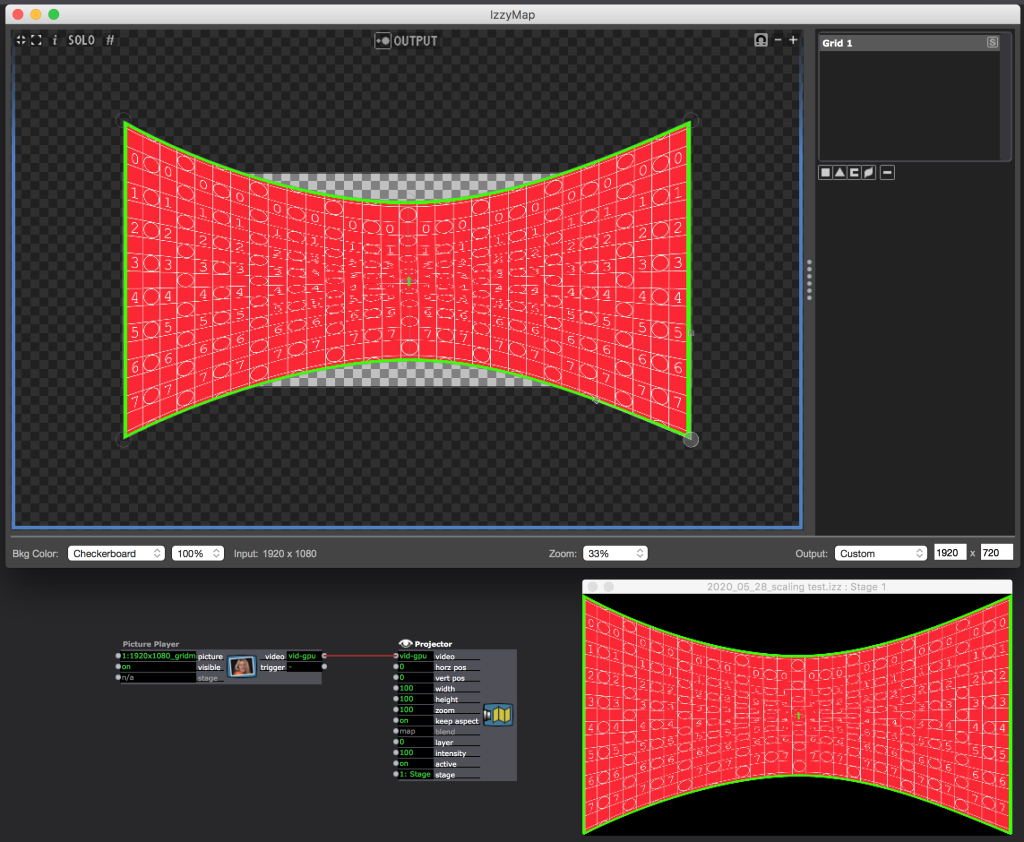

but as far as I tested it and as far as I understand the logic of the projector actor in combination with the mapper this does not work any longer as soon as you want to realize a more complex mapping for example on a curved wall or other architechture. lets take the example of the curved screen: let's assume I want to use the whole projection size of a 1920x1080 video projector to map an image on a curved wall. then I would like to show several live stream with a resolution of 1280x720 in its real resolution on this mapping and move it around. following your advice I'd set the mapper output to custom and 1920x720. If I do that, the grid (or image), that I will use for mapping get's smaller on the stage / in my projection as it now takes only 720 pixel in height of the 1080 total pixel of the projector.

thats fine so far, but as I want to use the whole 1920x1080 pixels of the video projector I have to enlarge my grid in the output until it fills the whole image again, so that I can do the mapping.

but by doing this I lose the effect of the right pixel height, that perfectly works on a clean rectangular surface. if I now send some 1280x720 material to the input it fills the whole mapped area again.

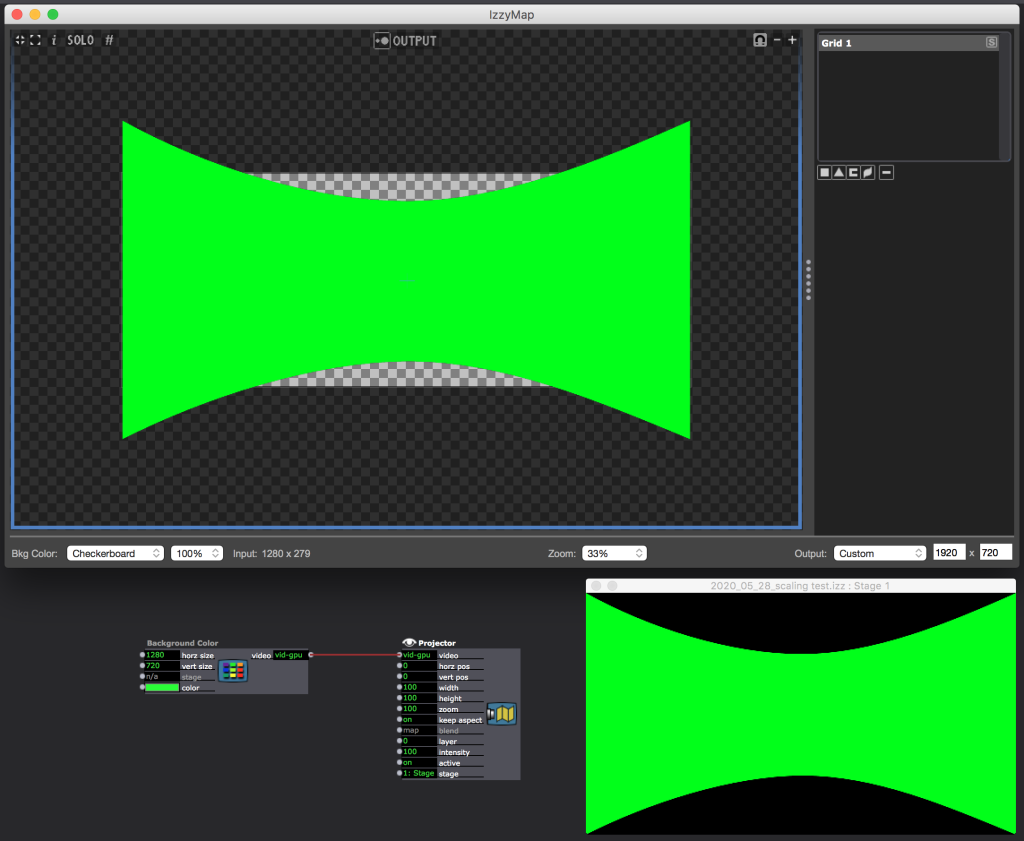

I know, that the idea of "pixel to pixel" is not really precise or right in the case of a projection surface that is that much distorted, its rather the right relation between the grid that I'm mapping that has the phisical resolution of the video projector and the stream I want to render on it. I hope that's comprehensible. I used the nice workaround of ryan to try to put that in an image (I think the strange distorted aspect ratio must be neglected as this is only a sketchy fantasie-mapping).

the other thing about the positioning throught the projector also works perfectly fine on a rectangular, non-mapped screen or monitor. but as soon as you have a more complex mapping you will move the whole mapping and not the input stream inside the mapping which does not help as long as you map on architecture that does not move.

please correct me if I'm wrong or just missed something here. anyway I think that the general idea is good as I can imagine to use it in a first step of two, output it unmapped to a virtual stage and then use the "get stage image" actor to send it to a second projector on which I can do the actual mapping on architecture - even though I dont know what that will mean performance-wise...

best ~ ben

-

thanks, ryan, a good hint again. I also used the scaler actor in this project before I posted my question, the problem is just again performance, as I need to be able to zoom from very small images to super big (with the zoomer actor sometimes 1000 %) and as i work with up to 8 or even more streams, scaling of all these brings the performance way down. but anyway, I got alot of good answeres and hints and workarounds in this thread and will try to test them all. I just wanted to be sure that I dont miss a possibility (like my fantasies from my last answere to woland) to hold down the resolution of the streams as long as possible to the 1280x720 - for performance reasons.

but in general I also want to say that I'm really impressed how stable and powerful isadora 3 performs with a late 2013 trashcan-macpro with 4 matrox triplehead cards connected and six hd-sdi inputs via black syphon from a blackmagic decklink quad and a blackmagic decklink duo, both in a sonnet echo express SE II thunderbolt 2 expansion chassis - so thanks to mark and the whole team for the great work!

-

@ben said:

to use it in a first step of two, output it unmapped to a virtual stage and then use the "get stage image" actor to send it to a second projector on which I can do the actual mapping on architecture - even though I dont know what that will mean performance-wise..

Yes, that would be the way i'd go. I think this is the one with the least performance impact you can get.