Best Motion Tracking Software?

-

HI,

Arguably the best Isadora tracking option for multiple performers is the OpenNI Tracker BETA plugin:

Isadora beta testing OpenNI Tracker plugin from Bonemap on Vimeo.

Three performers tracked simultaneously with the OpenNi plugin, with two depth sensors placed at 180°on each side of the performers, producing data for six skeletons made using OpenNI Line Puppet. In addition, two Luminance Key modules are taking depth image and movie player video to produce performer silhouettes at defined distances from the sensors. On top of that two Shape modules are connected to Measure Color modules to produce circles at defined distances from the sensors.

OpenNI x 5 sensors from Bonemap on Vimeo.

-

Thanks Bonemap, this is really helpful & I have been using the kinect before. The only thing is range - we have a 10m x 10m performance space which is viewed by an audience from all sides. I don't think the Kinect will stretch more than 4m away from the subject. do you know any way round this? Also if I place a depth sensor perpendicular to the performers it might pick up the audience behind them. A camera pointing down from the rig seemed a better option.

-

@nic said:

A camera pointing down from the rig seemed a better option

If you are considering pointing a camera down, you may want to consider pointing a few down and matting them together into a single image.

This may allow you to use Eyes++ to do the tracking. Using all 4 of Isadoras camera inputs, and creating a single image could if done carefully provide rather nice tracking. -

@nic said:

A camera pointing down from the rig seemed a better option.

Hi Nic,

This is a great topic and it is one that comes up a bit. It is also interesting for me because it prompts me to reflect on all of the tracking projects I have attempted and where it might lead next. The top down approach has its nuances too. So many variables to consider in terms of using a camera that is dependent on reflected light and lens ratio. So assuming you have a wide angle lens on a camera mounted in the grid, or an array of cameras as Dusx suggests, and have a consistent lighting state to capture a suitable image to provide clear tracking ‘blobs’. Your question then about the deficiencies of the Eyes++ is going to be contingent on all of the hardware camera lens configuration and rigging situation that you have or intend to set up.

You can simulate the optimum blob tracking image to then test the Eyes++ module against the desired tracking outcome for your project. Following is an example of a simulation I put together last year to test and calibrate the Eyes++ : blob tracking screen capture

The critical thing for me in doing the simulation, was the calibration of the Eyes++ in terms of blob occlusion and persistence of tracking individual ‘blobs’ as they came together or crossed paths.

I came to some conclusions based on running the simulation. Firstly, while I could control the simulation image, could I be confident of capturing an image of the people/performers moving around within the range of the camera? This question then becomes about consistency of the lighting. For example, creating a static lighting state that allows the Eyes++ to be finely calibrated for the blob tracking to work at its optimum in terms of occlusion and persistence. In the past I played with Infrared lighting ‘hacks’ - those that use an infrared filter over the camera lens and dedicated infrared lights. I then realised that a dedicated thermal infrared camera (like a FLIR) could simplify this by cutting out the need to use infrared light instruments. And this is the system I use currently for blob tracking with Eyes++. It means my tracking system is limited to a fixed lens that gives approx. 6 x 4 mtr tracking area from a 6mtr grid rig mount.

I found that the Eyes++ requires fine and nuanced calibration and that it worked better to have all of the blobs assigned so that blob persistence and blob instances could begin to find stability. There may be some further investigation to do.

In terms of using an RGB camera for tracking, another option is to consider tracking by color, although this brings its own nuances and compromises.

Best WishesRussell

-

Hi Russel,

Many thanks for taking the time to detail this. Yes of course I'd completely forgotten about thermal imaging cameras, annoyingly I've just forked out for a Kurokesu C920 adaptor kit with my mind set on the IR option. A Flir sounds the best soloution but I couldn't see any way of getting the signal into my mac with the models on their site. Any suggestions as to which Flir model that outputs USB or SPI (which I can plug into my Blackmagic)?

Nic

-

@nic said:

Any suggestions as to which Flir model

Well I use a model no longer in production FLIR VSR6, it has an analogue BNC output so it is not difficult to use with analogue to digital converter. Looking at what is available online, the price point for a new thermal camera is very high and the secondary market doesn't offer much choice in used cameras unfortunately.

On another note, I have been investigating tracking options using IR LED's like this one:

It is impressive how well the light source from this tiny IR LED can be tracked using the Eyes++ module in Isadora. It gave a clean tracking output in Eyes++ from the other side of the room (approx. 5 mtr). Bellow is a snapshot of the camera that I have used to test the IR LED, Logitech 920, with an IR pass filter sheet taped over the lens . I haven't opted for the case mod yet, as you have, but the ability to get a wider field of view lens would be useful.

There is a lot of potential in this tracking method. However, the IR LED's are made for remote control devices so their angle of view is generally reduced to a narrow angle. From what I can find from electronics sellers they emit in the range from 3° to around 50°, and that is not enough for tracking performers. We would want to put an array of IR LED's together to create a 360°emitting angle for optimum tracking with Eyes++. I have spotted some IR emitters that have a 130° they are not in the regular LED form factor but are cheap enough to order some to try out.

Of course this is still prone to occlusion as a performer moves, but careful placement of the IR LED array in a costume or integrated into hair and makeup could be a cheap and efficient system. This replaces the need for IR lighting in the grid by placing the light source on the performer.

Best Wishes

Russell

-

I wonder if there would be a way to adapt this to work? Screen mirroring, perhaps?

https://www.flir.ca/products/f...Cheers,

Hugh

-

Thanks Russel and Hugh,

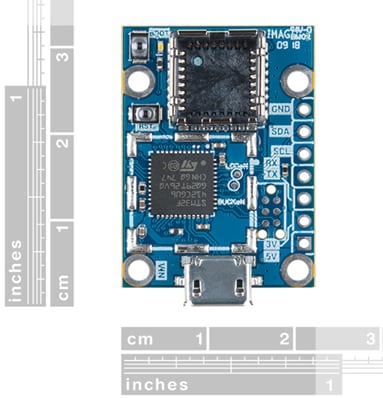

these are all very helpful. I think I'll try to string a few Kinects together and also try the top down C920 motion tracking. I did notice the FLIR iphone unit but was a little stuck on how to send the screen to my mac, but yes screen mirroring is a good idea. Flir also produced this which seems to give USB output though it's not guarenteed Isdora will read it. I wonder if anyone has tried:

and the devlopment board 'seems' to suggest a plug & play USB interface:

However the issue I have with all thermal imaging is that the lens view angle is fixed at around 50 degrees which, in my situation, where we are viewing a 10m x 10m dance floor from above means that the camera needs to be an unrealistic 10m above the stage. Same is true I think for the iPhone Flir. Multiple cameras could be stitched together but thermal cameras aren't cheap and this could add to the cost. Perhaps it is a good option though? I fear Isaora will struggle with 4 camera input and live processing output?

However the issue I have with all thermal imaging is that the lens view angle is fixed at around 50 degrees which, in my situation, where we are viewing a 10m x 10m dance floor from above means that the camera needs to be an unrealistic 10m above the stage. Same is true I think for the iPhone Flir. Multiple cameras could be stitched together but thermal cameras aren't cheap and this could add to the cost. Perhaps it is a good option though? I fear Isaora will struggle with 4 camera input and live processing output?If we go down the IR tracking road there are also issues as for our production we also have tungsten stage lights. On one side this is good as they will help produce IR light but mean that any head mounted LED might be washed out.

I'll report back after our research next week! -

@nic said:

I fear Isaora will struggle with 4 camera input and live processing output?

It's not Isadora that would struggle; how much your computer can handle is the determining factor. That being said, there are devices that stitch together multiple camera feeds into a single feed (mostly purposed for security camera setups), which would allow you to combine your four video feeds into a single video feed.

-

Maybe one could try these mobile phone, clip on lenses for a wider angle.Some DIY would be necessesary though.