[LOGGED] Keying Head & Shoulders like in zoom & skype

-

Hi, curious if this exists in Isadora, or if not, it would be great for live virtual theater. The ability to recognize & key out the background of talking head feeds imported live from Skype - like the virtual backgrounds in Zoom & Skype, but to replace the background with alpha. Thanks.

-

If you have your performers in front of a green screen, you can use the Chromakey actor, but otherwise, no this feature does not yet exist in Isadora.

-

@liannemua said:

<p>Hi, curious if this exists in Isadora, or if not, it would be great for live virtual theater. The ability to recognize & key out the background of talking head feeds imported live from Skype - like the virtual backgrounds in Zoom & Skype, but to replace the background with alpha. Thanks.</p>

Give this a try: https://www.chromacam.me/

-

While we're on the topic, does anyone know of any open-source, cross-platform tools for this? Having open-source code as a starting point would both decrease the difficulty, and increase the the likelihood, of being able to incorporate this as a native Isadora feature.

Also, I've logged this as a feature request. -

-

@Woland said:

Also, I've logged this as a feature request.

I couldn't agree more: I find it a "must-have" in Corona-times. I am just checking out Skulptures tip: https://www.chromacam.me/. 30 bucks for the full version and a very slow download are not very promising ;o(

-

Just tried out the Chromacam - got it working in just a few moments. May be worth the cost if needed.

late 2012 MacPro - Mojave

-

@liannemua If you get your remote performers to set their virtual backgrounds to a pure green image then the keying in Isadora works perfectly. Even if their local laptops aren't up to it and they have to tick the 'I have a greenscreen' option in zoom you can really good results that way. It's how we made Airlock and are using it as a technique on other projects.

To be honest, I actually prefer the results you get with the 'I have a greenscreen' option than the 'head and shoulders recognition' option. Zoom seems to do a pretty good job of keying out imperfect (or imperfectly lit) greenscreens (or green sheets, or blue walls, or whatever your performers can get in front of). If you then set their virtual background to an all green image then it's really easy to get the settings just right in Isadora. It also means you don't get that thing of hands, arms, hats, props or whole performers disappearing occasioanly.

-

@woland said:

While we're on the topic, does anyone know of any open-source, cross-platform tools for this?

I did some poking around. The algorithms to remove an arbitrary background all require training artificial intelligence systems using datasets of people in front of web cams to work. You can get a sense of the complexity by looking at this Background Matting GitHub project or this article where this person implements the background removal using Python and Tensorflow (AI) tools.

So, what I'm trying to say here is that this is a major project that would require my entire attention. If there the program mentioned above works for $30, I'd say it's a reasonable cost given the how much work it would be to implement such a feature. I wish we had unlimited programming resources to take this on, but it's not realistic at the moment for us to do so.

Best Wishes,

Mark -

P.S. One further note:

This article from our friends at Touch Designer describes how you can use the Photo app in iOS to remove the background and send the image to a desktop computer. (The example is for Windows, but there is a "For Mac Users" section. They mention using Cam Twist but you should use our free Syphon Virtual Webcam to get Isadora's Syphon output into Zoom.)

However, this example uses NDI to capture the iPhone screen so there is going to be a substantial delay.

Best Wishes,

Mark -

@mark said:

I did some poking around. The algorithms to remove an arbitrary background all require training artificial intelligence systems using datasets of people in front of web cams to work. You can get a sense of the complexity by looking at this Background" class="redactor-linkify-object">https://github.com/senguptaumd... Matting GitHub project or this article where" class="redactor-linkify-object">https://elder.dev/posts/open-s... this person implements the background removal using Python and Tensorflow (AI) tools.

In furtherance of this subject, I did come across an interesting project using the Tensor BodyPix, which is a popular framework for this type of work but not super helpful for us Izzy users. If I get some time, I am going to bolt on Spout / Syphon to this and try to make it cross platform, and maybe speed it up some if I can. I'm imagining you'd output a stage to the shared memory buffer, then select the stage in a console, and then it will post an alpha mask to another shared buffer.

Would love to discuss this further as I do see it being helpful for online work. I worked a fair amount on soft body projection mapping / masking in pre-covid times, using pixel energy functions as heuristics to accelerate these detection algorithms. I find that for virtual shows, I am pulling out / modding more GLSL shaders than I normally do and thinking critically about compositing, and background segmentation is often a key part of that :)

Somehow, it all comes together.

-

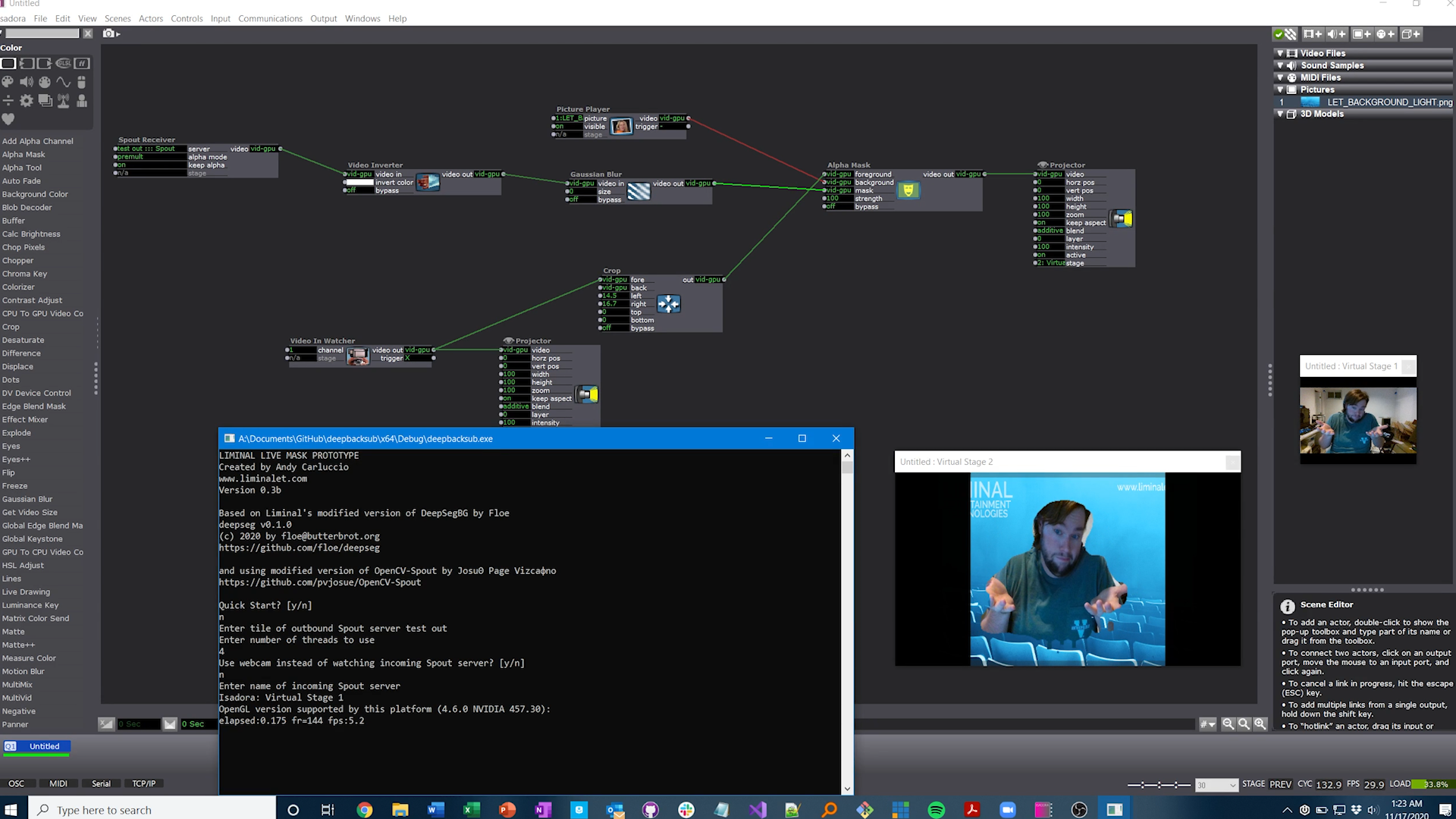

So the following is purely for fun in response to @mark 's post imagining how this would be done. I did follow up on this over the weekend and got something "working"

I heavily modified the project I mentioned earlier by manually rolling it over to the Tensorflow Lite c_api (a real pain!), then porting it to Windows, and feeding it the deeplabv3_257_mv_gpu.tflite model. To make it useful to Isadora, I dusted off / updated an openCV to Spout pipeline in c++ that I used a few years ago for some of my live projection masking programs, so now my prototype can receive an Isadora stage, run it on the model, and output the resulting mask to Spout again for Isadora to use with the Alpha Mask actor.

My results:

Now obviously, this is insane to actually attempt for production purposes in its current form. I'm getting about 5fps (granted no GPU accelerated and I'm running in debug mode). I could slightly improve things by bouncing back the original Isadora stage on its own spout server, but this is just a proof of concept. In this state, it should be relatively easy to port to Mac/Syphon and add GPU acceleration on compatible systems for higher FPS and / or multiple instances for many performers.

Again, just a fun weekend project but I found it very educational.