[ANSWERED] Isadora, body mapping and Kinect 2 camera

-

Basically you need two things:

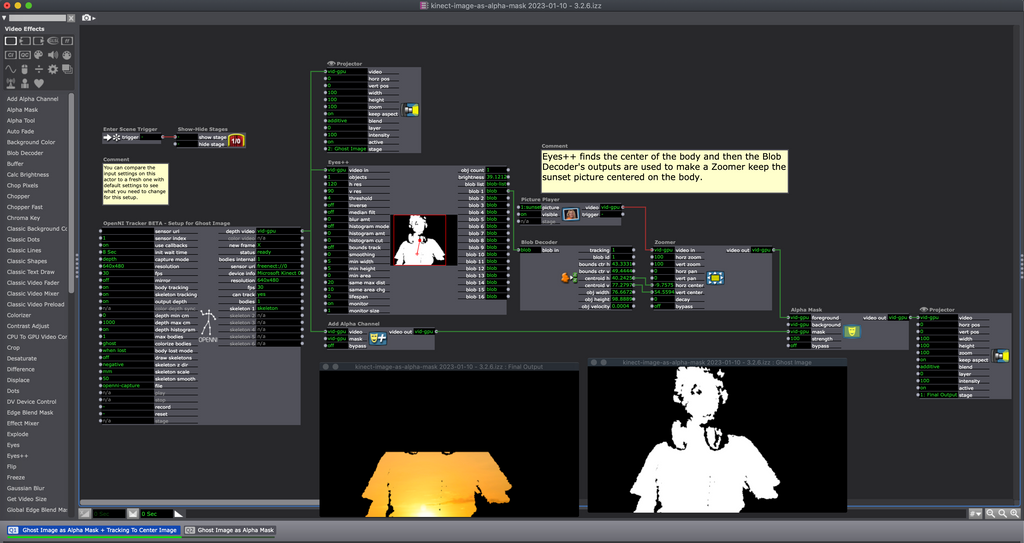

- An OpenNI actor in Isadora with a chain of actors after it that are some variation of this: kinect-image-as-alpha-mask-2023-01-09-3.2.6.izz

- You need to set the Kinect on top of the projector/beamer you are using because you need to minimize the difference in physical space between the location of the projector/beamer lens and the Kinect lens. The greater the difference between the lense locations, the more warping/correction you'll have to do to make the projector throw land accurately on what the camera lens is seeing.

Also keep in mind that the faster the performer moves, the more likely you are to have the body mapping system lag behind. Slow movements are good. Light colored costumes and a dark background will also let you hide the bleed better.

Best wishes,

Woland

-

I spent a good deal of time working with this kind of setup last year. Beyond the tech needs (nicely summarized by @Woland), it's worth actively exploring the possibilities and constraints it affords with the choreographer before they get set on the kind of movement they want to show....or even on a concept for that movement. The tech won't be a purely transparent medium..it will be something of a dance partner. That is, the dancer will have to negotiate spatial constrictions (such as staying within eight feet of the camera and not doing a great deal of floor work) and the choreographer will have to think about how to work with the delay. How can those constraints not be constraints, but opportunities to try something new? Good luck!

-

Hi both!!

These are so precious and useful for me to know. I will definitely pass these ideas to the choreographer.

I tried to open the file you kindly shared. It says the Open NI is missing. Where can I find them? I tried the file from the tutorial and it's the same.

I'm also thinking another problem that how should I build the contents? Can I create something with Isadora or I can actually build the patch in other programs like Processing or Touchdesigner and send it in Isadora? The idea is keep the projection in the body so can I use alpha mask and insert the image in the body? I would love to hear what you guys have played with the program and get inspired! Thanks so so so much!!!Cheng

-

-

@woland said:

@ckeng said:

Where can I find them?

Within Isadora, you can insert videos using masks (black and white images/videos) or by layering with alpha channels.

Putting an image into an area is the easy part, ensuring the area lands on the body/canvas is the more complicated part (as mentioned above).

The content you create and how you create it will depend on the effect you are trying to achieve. If for example, you wanted to create an army or marching ants that walk around the perimeter of the body form, that would require some additional programming and might be suited to Processing. On the other hand, if you wanted to place a time-lapse sunset on the body, a video file layered inside Isadora could easily provide the desired effect. -

I think additional programming is too much for the project so we are looking for using some stock video and images as the resources. I'm not super understand the last bit. If I want to put the sunset video on the body, how can I achieve that? Will the image follow the movement of the body?

-

@dusx said:

On the other hand, if you wanted to place a time-lapse sunset on the body, a video file layered inside Isadora could easily provide the desired effect.

@ckeng This file shows basically what Dusx was saying here. You use either the Skeleton Decoder or an Eyes++ actor to find the center of the body and then use another actor like a Zoomer to keep the image of the sunset centered on the body. Here's a (very choppy) gif of this method in action: https://recordit.co/0fqcrD8fsE

-

I tried to install the plugin and learn from the tutorial but it kept saying that it's not installed. I followed all the instructions for Window system and Kinect 2. I have installed the SDK and read the text file but it's still not working.

I got these codes when I open the tutorial file.OpenNIl Tracker BETA (ID = '5F4F4E42")

Skeleton Decoder (ID = '5F736B6C')

Skeleton Visualizer (ID = '5F736B76')

-

You have not installed the OpenNI Plugins then.

- Go here: https://troikatronix.com/add-o...

- Get the download for Windows

- Unzip the file

- Open the folder "OpenNI Tracker Windows"

- Double left-click the file "__Double Click To Install Plugins__.bat"

- If you're still having trouble open the file "__READ ME__ Installing the Tracker Plugins.rtf" and read the steps in the section "Installing OpenNI Tracker and Associated Plugins"

-

I tried a few times and the plugin folders are in Isadora actor plugin folder but I still can't find a OpenNi actor. Not sure if I did anything wrong. I have tried this with Kinect v1 on another device and it worked well. -

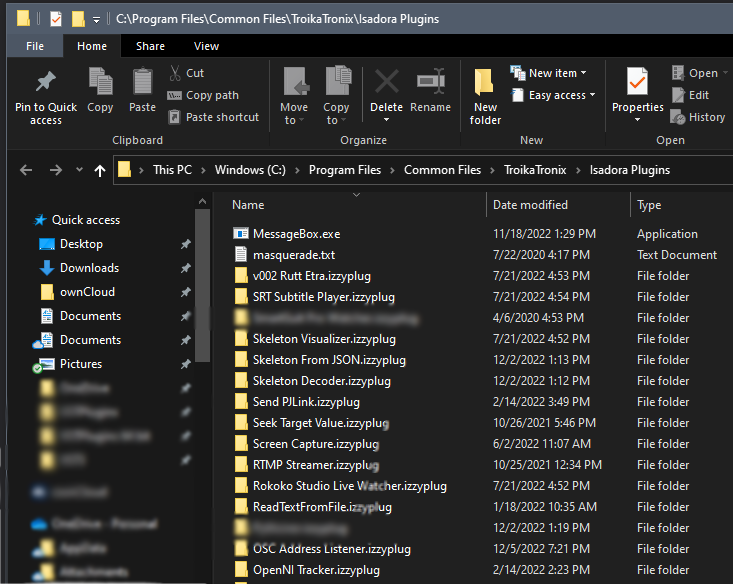

Please ensure your plugins look like the plugins in my plugins folder.

The .izzyplug folders need to be at: C:\Program Files\Common Files\TroikaTronix\Isadora PluginsI have had a few users place these within subfolders and this does not work.

-

Thank you finally it works!!! Now I have to figure it out how can I play with the sensor.

-

Hi,

I tried to copy your network but it turns out a bit different. The photo is still in rectangular shape which means the body is not masking it. Also, I still can see the background of the body(my room). Is there a way to filter it out?

I suppose this is only for rendering in the projector but not for body mapping? Do I have to do calligraphy in Isadora? -

how is your NI watcher set up? maybe you have it set to 'show background'? this would output a rectangle of video. if you set it to 'hide background' or 'ghost' then you only get pixels output from the human shapes

-