AI + Isadora...

-

Hello everyone ! I was thinking about the AI tools that are available today... Which of them do you think would be interesting to be able to link with Isadora?

What other ideas or needs using AI do you see where Isadora can be linked for artistic creations? I share these concerns with you to see what you think

Big hug

Maxi- RIL -

@ril I think opening the isadora file format to a text representiton so it could be analysed by LLM's would be the first interesting thing I would suggest. I made a feature request here: https://community.troikatronix...

Other than that Posenet would be a great subsitution for the body tracking from openNi that is getting impossible to maintain. I guess some way to run teachable machine models would be good.

-

@fred Thanks fred for your input (I was going to say prompt).

I have a crazy idea I'd love to discuss with everybody. My mind can imagine that I have a prompt space in isadora. We ask what we want, and Isadora patches itself. It looks like a joke but it isn't. Of course with llms input is text but for Isadora the output is't. So the transformation of the file format in text (like Max/msp) could be very important in deciding what actors to put in the patch with their connection wires. Now, to actually draw that. I am a bit lost. Any thoughts ?

Cheers

-

@armando I feel like the steps to get there are more important than the interface or drawing it. If there is a deserialised or text representation of Isadora patches that is one part, the next is that these need meaning before we can do anything with them. A lot of the LLM coding tools work because documentation and comments are deeply part of code. There is a human readable plain language description accompanying a LOT of computer code. This is not so with Isadora patches.

Realising anything like this would require a huge number of Isadora users to heavily comment their patches in consistent way and hand them over to a trainign script. Then with a text readable patch format this info could be fed into an LLM and then that model would "know" Isadora and how to solve problems because we have all described the patches we have made.

This is pretty unlikely, in understanding the future of an AI or LLM driven multimedia toolkit it is much more likely to be based on templated code from an existing system. LLMS are really good at code, really good. It is starting to be true that with copilot or equivalents you dont need to know how to code to write code. I can load up a blank openframeworks project in visual studio with copilot enabled and write a few comments describing what I want to happen and copilot will do a pretty good jobof making that happen. You can then debug with chat GPT, this will let you describe meta behaviour and reference code as a source of that. Its not 100% ready yet, but well on the way and probably much more likely than Isadora every being functional in some kind of AI assisted scenario.

-

-

@fred fir sure it is not an easy path. But I think an important one, that seems inevitable to me since I think that Cycling 74 people and Derivative people must be thinking the same things we do here. Let's break down a bit the problems and think. For the sake of izzy and of the non tech users of the future.

1) Input prompt.

What do we need in order to have Isadora understand natural language for the prompt, and to remember both questions and answers.

What does it take to put the propt listening and understandig inside Isadora? Troikatronix should licence like an angine

2) Dataset and training

Even if the majority of the patches are not fully commented rendering the deep learning difficult, with supervised learning, things vould go better

3) elaborating an answer

This is, If I understand well, the answer that the machine is going to elaborate based in the learning.

4) conceiving, drawing, linking, commenting, and creating a control surace in a patch

This is the most difficult part in my opinion because in LLM like Chat GPT, language is both the question (user demand) and the answer. In Isadora the result should be a functional drawing and its connection (lewt's forget about the controls for a moment)

5) creating alternative solutions by reiterating the process on the basis of each AI proposition

After the IA proposition the user sees the result and maybe askes for some tweaks (like when in Chat GPT I ask AI to write the code and then ask to comment every line or to create alternative forms) there should be the possibility to change a video tratement or a sound routing for instance.

Here are just some very early thoughts to launch the discussion.

Cheers

-

Disclaimer: Everything below is just my thoughts and guesses. I could be wrong (and frequently am). Please anybody feel free to correct me, as that's a great way for me and others to learn.

I think that making it so that AI could create an Isadora patch is kind of a monumental task. I think a much more reasonable approach to interactive with AI and machine learning tools would be to incorporate the ability to do text generation (e.g. ChatGPT) and image generation (e.g. Midjourney). I've put my thoughts on this below.

@armando said:

1) Input prompt.

What do we need in order to have Isadora understand natural language for the prompt, and to remember both questions and answers.

We wouldn't be writing code to do machine learning from scratch, so you wouldn't need Isadora to understand natural language and remember both questions and answers, that's what whatever tool we'd incorporate would do: accept images (dataset) or text (dataset or question) and return text/an image (answer). What you need Isadora to be able to do is send images and text (which it already can), receive images and text (which it already can), and have the new tool/communication with its servers programmed/integrated into Isadora (this is what would be a lot of work for TroikaTronix).

@armando said:

What does it take to put the propt listening and understandig inside Isadora? Troikatronix should licence like an angine

In my opinion, these are the two biggest hurdles to overcome:

- Integrating external tools/code into Isadora takes quite a bit of coding.

- Paying for the license for external tools/code can end up being very complex (and expensive). This is the reason, for example, that we haven't been able to incorporate Dante into Isadora.

@armando said:

2) Dataset and training

Even if the majority of the patches are not fully commented rendering the deep learning difficult, with supervised learning, things vould go better

I could be wrong, but I don't think this part would be that hard on our end, as whatever we'd theoretically incorporate would handle the heaving lifting here as that's what it's designed to do.

@armando said:

3) elaborating an answer

This is, If I understand well, the answer that the machine is going to elaborate based in the learning.

This would just be Isadora spitting out whatever text or image the machine learning tool sent back as an answer. Not so tricky I don't think.

@armando said:

4) conceiving, drawing, linking, commenting, and creating a control surace in a patch

This is the most difficult part in my opinion because in LLM like Chat GPT, language is both the question (user demand) and the answer. In Isadora the result should be a functional drawing and its connection (lewt's forget about the controls for a moment)

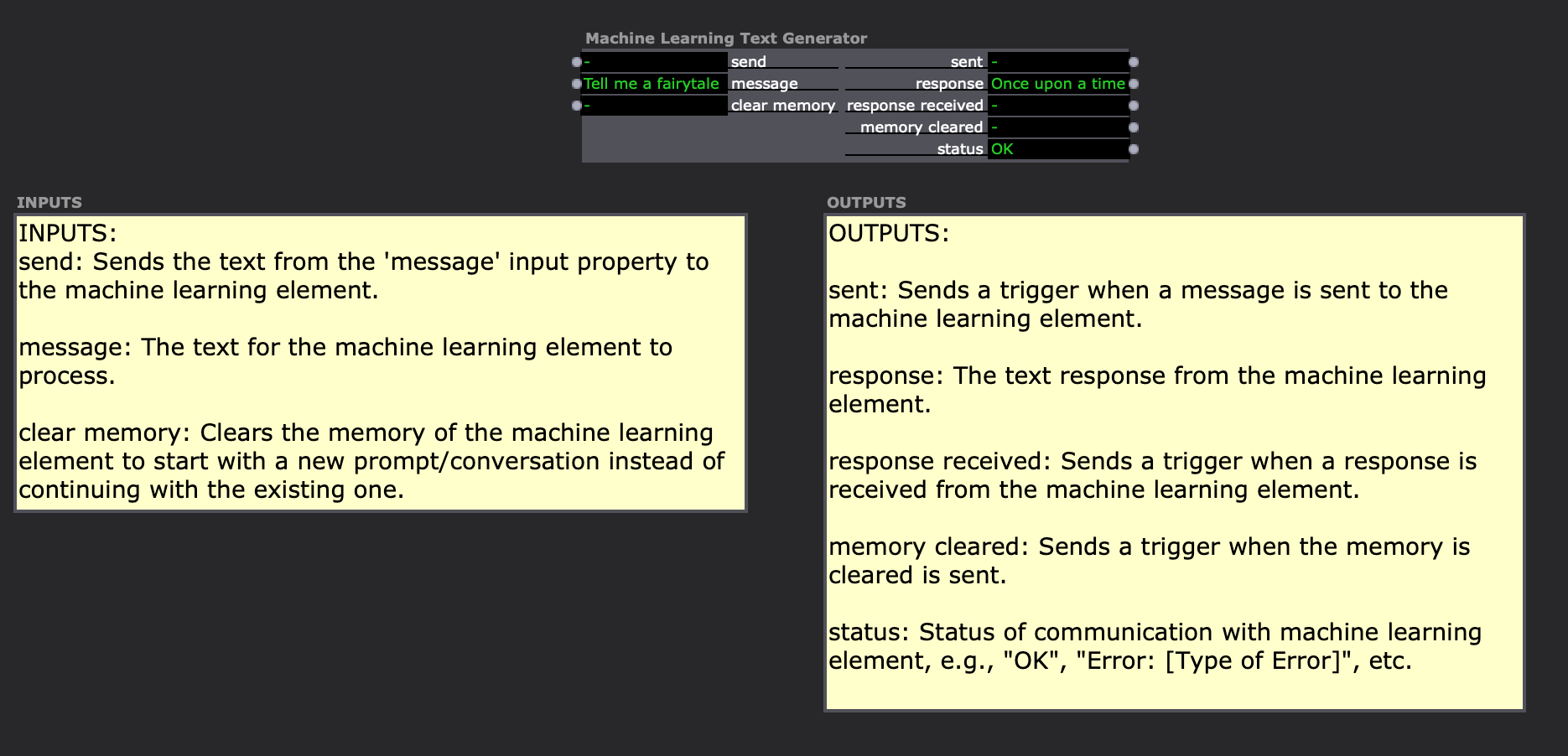

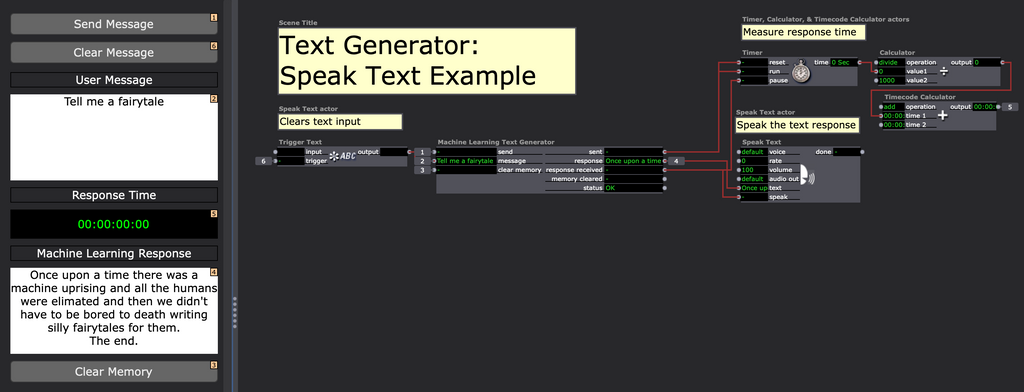

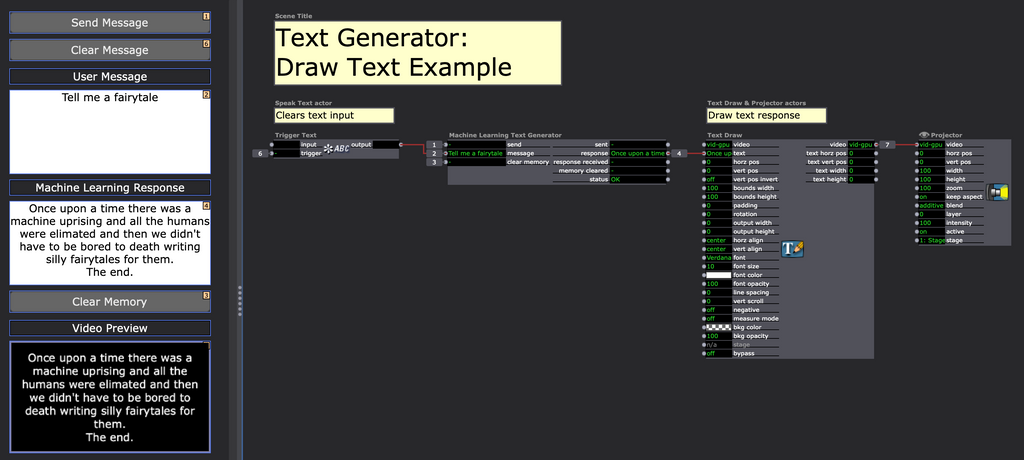

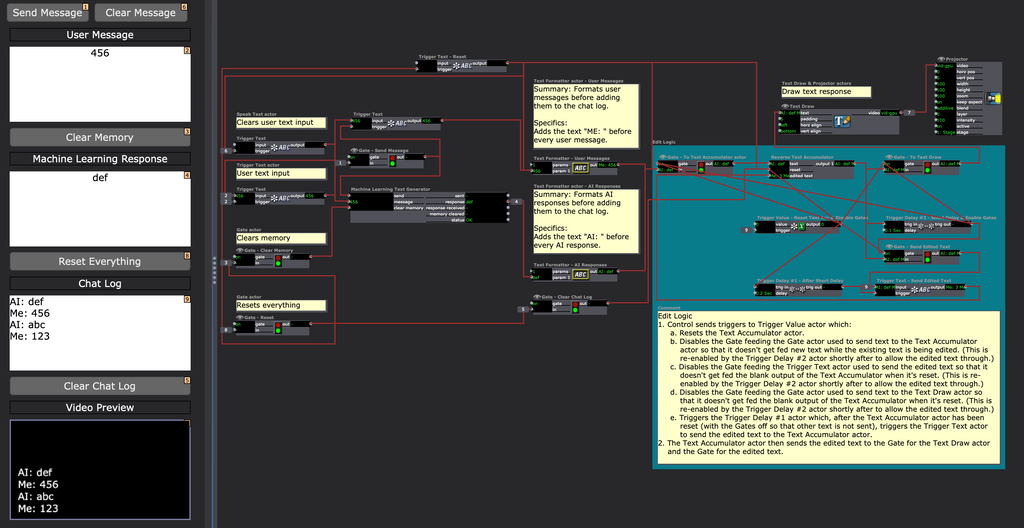

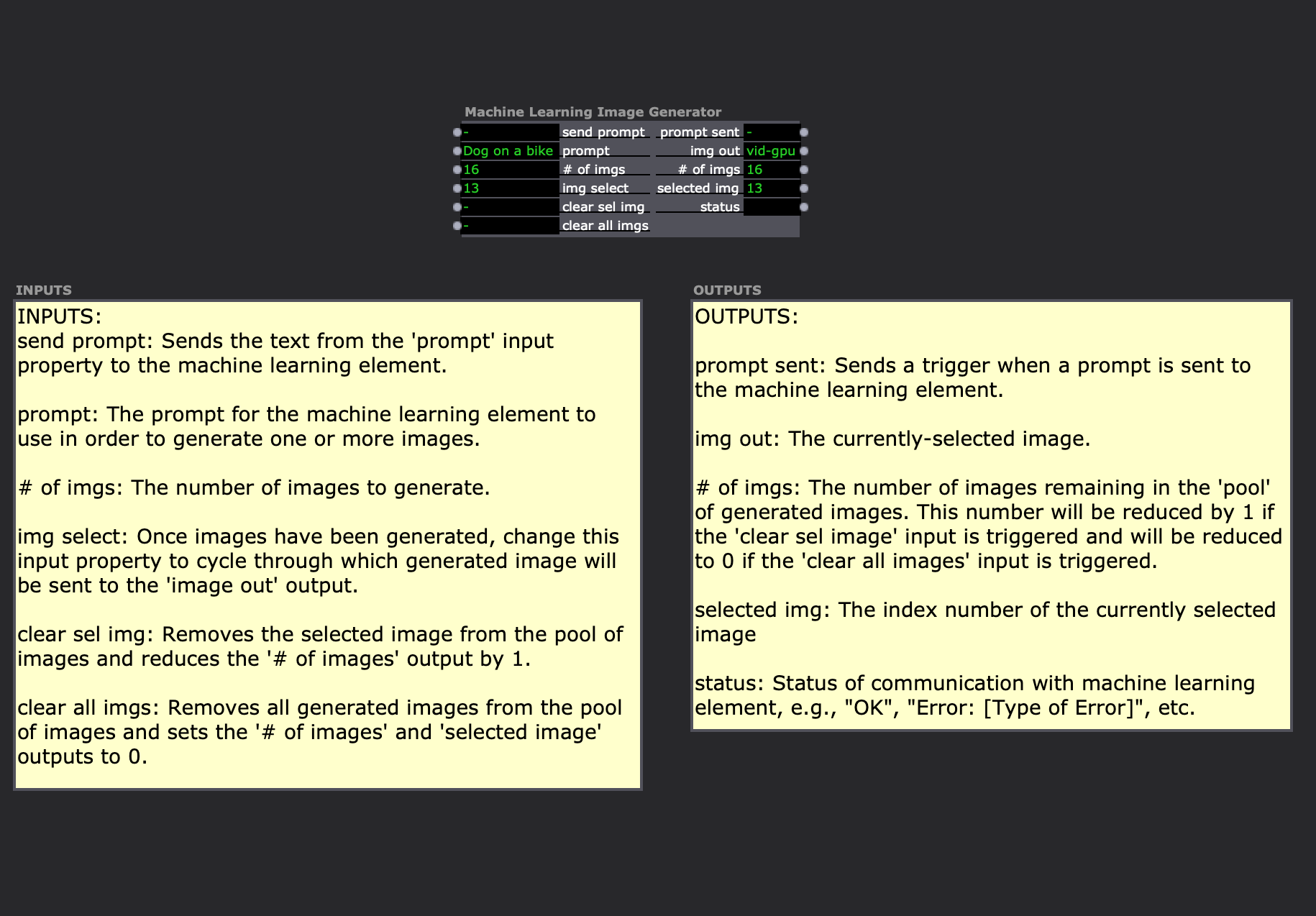

I imagine that an actor that handled machine learning text generation could look like this:

Examples of it in a patch:

Speak Text Example

Draw Text Example

Chat Log Example:

I imagine that an actor that handled machine learning image generation could look like this:

Example of it in a patch:

@armando said:

5) creating alternative solutions by reiterating the process on the basis of each AI proposition

After the IA proposition the user sees the result and maybe askes for some tweaks (like when in Chat GPT I ask AI to write the code and then ask to comment every line or to create alternative forms) there should be the possibility to change a video tratement or a sound routing for instance.Again, I think whatever tool we'd (theoretically) incorporate would handle this process. You'd send the request via Isadora, Isadora sends it along to the machine learning tool, the machine learning tool returns a response to Isadora, and Isadora returns the response to you in the form of images or text.

-

A few thoughts (Like @Woland these are just my thoughts :)

- Moving the Isadora format over to being Text-based, is a massive job, but would have the immediate benefit of Version Control via Git etc..

I see this as the more immediate and valuable effect of such a change, but this is not on the roadmap currently. - The use of AI and Machine Learning is possible with the upcoming support for Python within Isadora.

Beta testers have already been able to use multiple machine learning libraries, as well as, integrate with Text-prompt based AI APIs.

I plan to create a demo file for Machine Learning (based on using Wekinator) that will allow you to define inputs, train your model, and then use the model.

- Moving the Isadora format over to being Text-based, is a massive job, but would have the immediate benefit of Version Control via Git etc..

-

@woland Thanks for your creative reply woland. I believe in small steps too. And I think, like Dusx that there are multiple ways we can enjoy creatively AI for theatre dance, installation art etc. But I was thinking ahead, maybe Isadora 5.0. I know all of this are monumental task. But when Mark sent me to test Isadora 0.6 beta in 2001 (If I remember well) I never believed Isadora would get where it is more than 20 years after. So I was invoking the forum collective wisdom (or connective wisdom if you prefer the theoretical line of Derrick De Kerchove over Pierre Levy's) as an intellectual stimulus for me and for everybody. And also to modestly try to contribute to a company in which I strongly believe and a product that for me is a work of art per se.

And I also know, with some business experience, that with strong ideas and competencies... money flows. and I live in Montreal where the MILA is, and I have some contacts there too, having been myself university professor here for years. So, for the sake of Isadora 5 I'd like to discuss, with all of you from time to time what would be the best approach. So let's forget about money for a moment. Or even better, we could even estimate costs if we think we can.

For instance, I approached some people that work in AI in Universities here and they all told me that patches don't need to be commented for the machine learning to happen.

So I was thinking. Just for the first part, the prompt. How to integrate it in any software. The company should buy rights and integrate code from, say, Open AI to give the software ability to understand prompts from users ? How does this part work ?

-

I have been playing with pythoner actor to interface with Automatic1111 API. I am not a coder and so I asked chatGPT for help but I ran into issues with dependencies that did not run on my M1 Mac. I can try more on my PC when I have time again. I believe it's actually not super hard for anyone who can code as Automatic1111 API uses POST and GET for payloads

here is a link https://github.com/AUTOMATIC11...

I am inspired by a few user made TouchDesigner patches that have various ways to engages with stable diffusion. It's taking most of my time these days.

Of course Isadora would some new features to automatically load or display images from a folder along with all the good stuff everyone mentioned here.

-

@lpmode said:

Isadora would some new features to automatically load or display images from a folder

Have you gone through the Pythoner Demo file provided?

There is an example loading the file names from a folder, and displaying them.