Extended Video (and Audio) Delay

-

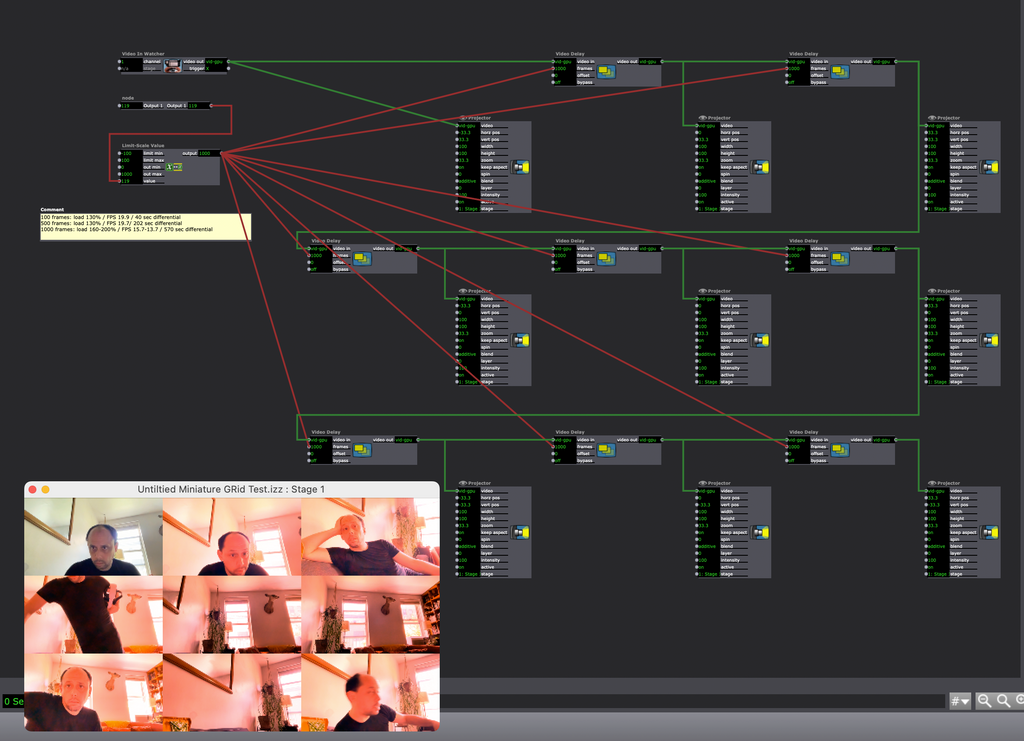

Oh! And just to clarify. I've done a little stress testing on my show computer, which is a fully decked out 2021 Macbook Pro M1 Max. Daisy-chaining 8 video delays to 9 projectors I have maxed out the frame rate to 1000, which I guess has the last projector operating at a delay of 8000 frames.

At that rate, the scene is running at a variable load of 160-200% / with a variable frame rate of 13.7-15.7 / and a differential between the live feed and the last projector of 570 seconds or 9.5 minutes!

Surprisingly the image remained rather smooth, with no stuttering, despite the reduced frame rate. So this seems to be within the realm of possibility. I'm just wondering if there's a way to reduce the strain on the machine and maybe spread out the labor.

-

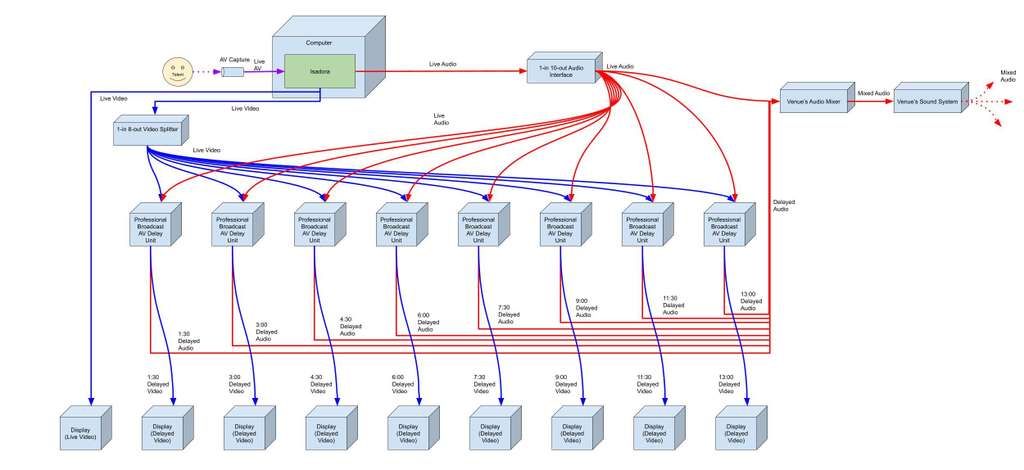

My first thought involves a lot of probably expensive hardware.

I started writing it out but it got too complex so I just made a diagram. The major drawback with this is that you do not have immediate, individual control of any of the delayed audio and video streams, only the live one. You also cannot apply audio or video effects to the delayed content before it's sent out. This would mean you'd have to rehearse everything really tightly because you wouldn't be able to control when any particular video or audio stream is on or off.

I have another idea that involves additional computers which would allow you to add effects to/modify the delayed video and audio feeds in Isadora or other software before sending the delayed feeds out to their respective displays (video) or the mixer (audio) as well as giving you immediate control of each video and audio stream programmatically or interactively.

I also have a few other ideas on how to do this, but many involve these AV delay unit devices used for professional broadcast situations. (I haven't worked with a device like this, I'm just sure that there's either an AV delay unit out there or that you could get separate delay units for the video and audio if need be.)

-

@woland Thanks so much! This is incredible. Right now I'm pretty sure that I wouldn't need individual control over the delayed videos, but of course you never know how things might shake out. I'll definitely look into the av delay units. Excited to think this can be done without having to get hold of multiple computers.

—J

-

@joshuawg said:

Excited to think this can be done without having to get hold of multiple computers.

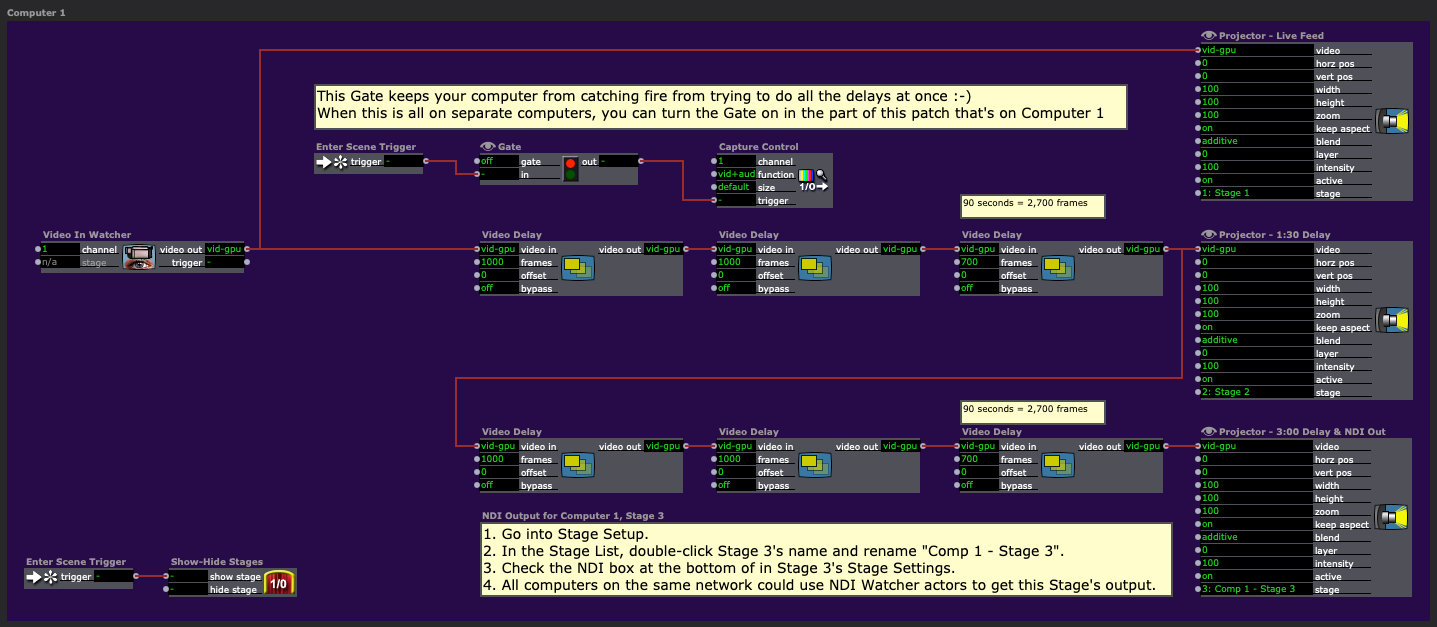

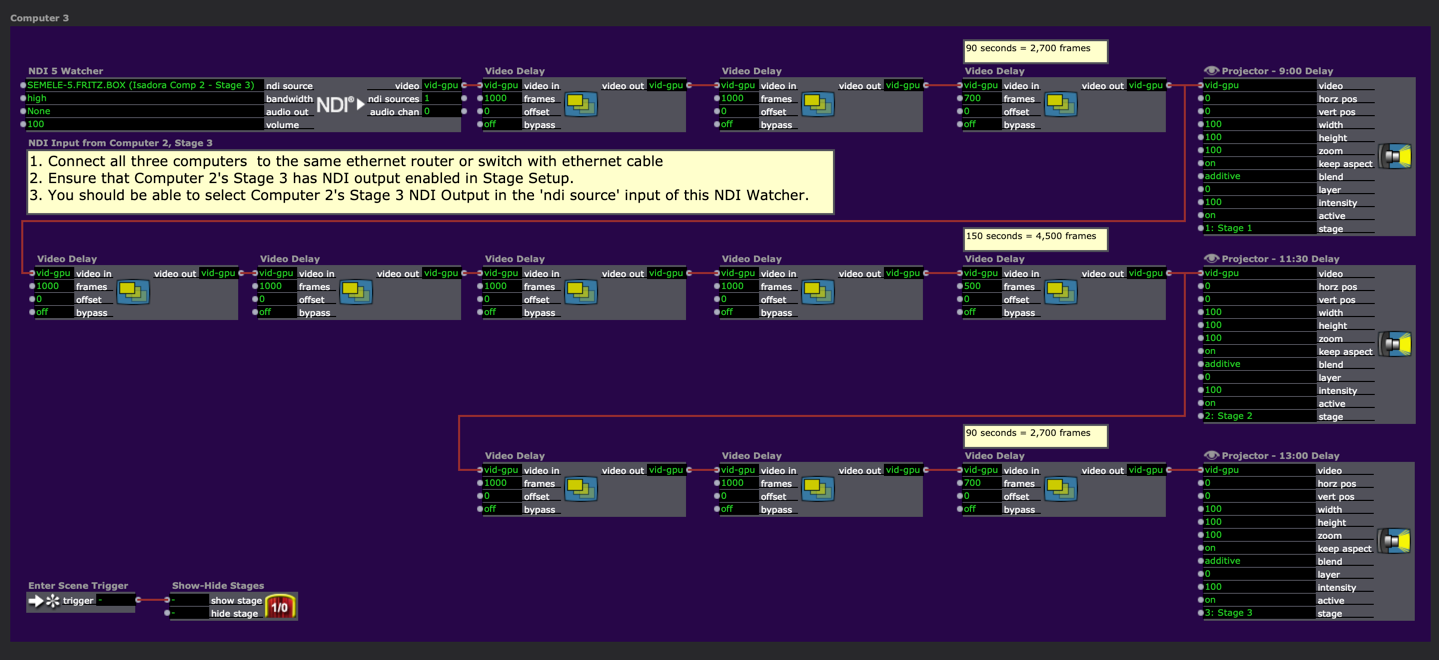

Using multiple computers would probably be cheaper though. You could:

- Connect three computers to the same beefy ethernet router/switch

- Have computer 1 do the first three times and send them to three Stages

- Have computer 1 output the 3:00 delayed video feed over NDI by enabling NDI for Stage 3 in Stage Setup

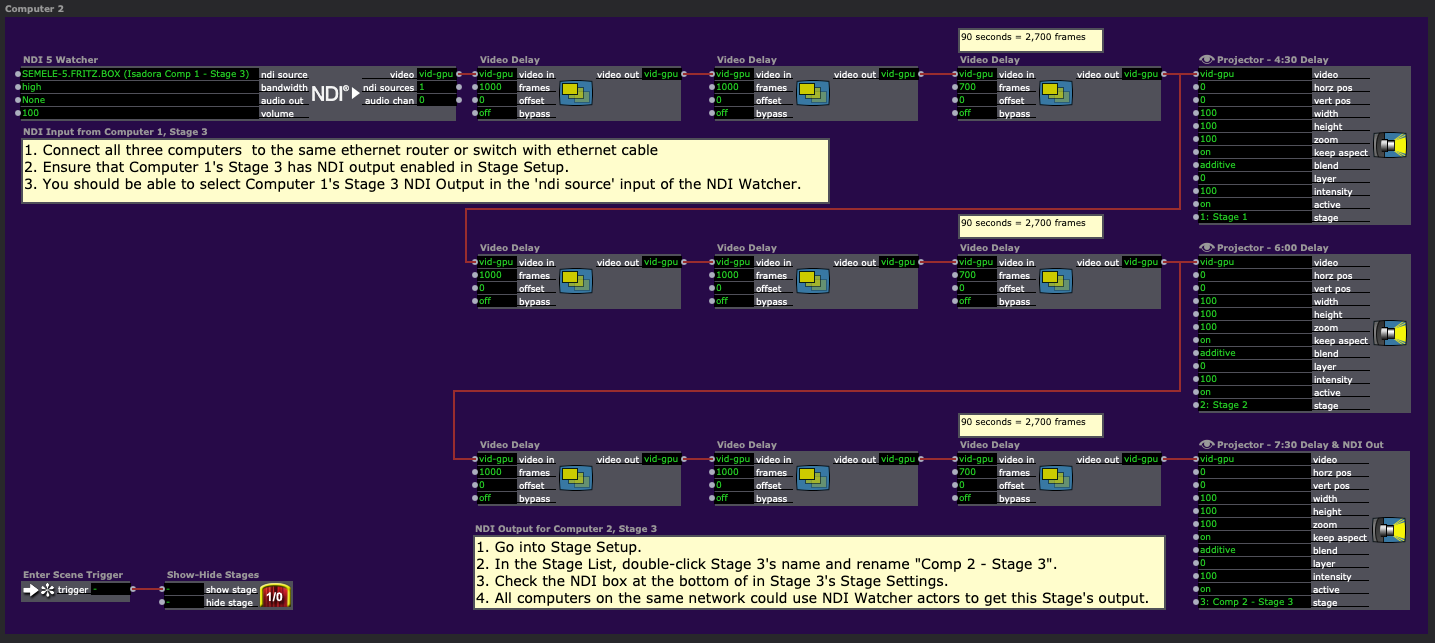

- Have computer 2 pick up the 3:00 delayed video feed over NDI with an NDI Watcher actor

- On computer 2, use Video Delay actors to make the next three delay times for three more Stages

- Have computer 2 output the 7:30 delayed video feed over NDI by enabling NDI for Stage 3 in Stage Setup

- Have computer 3 pick up the 7:30 delayed video feed over NDI with an NDI Watcher actor

- On computer 3, use Video Delay actors to make the last three delay times for the final three Stages

Computer 1 has to do the live feed and keep 3:00 of camera live feed in Video Delay actors at all times.

Computer 2 has to keep 4:30 of the live feed from Computer 1's Stage 3 NDI output in Video Delay actors at all times.

Computer 3 has to keep 5:30 of the live feed from Computer 2's Stage 3 NDI output in Video Delay actors at all times. (I'm not sure if the 2-minute and 30 second jump from 9 minutes to 11:30 was intentionally breaking the pattern of just adding 90 seconds to the previous time or not.)3:00 = 180 seconds 180 seconds x 30 fps = 5400 frames Each Video Delay actor can hold a max of 1000 frames 5400 frames / 1000 frames per Video Delay actor = 5.4 Video Delay actors, rounded up is 6 Video Delay actors. So computer 1 would need to be running 5 Video Delay actors at max capacity and 1 at 4/10ths max capacity.

4:30 = 270 seconds 270 seconds x 30 fps = 8100 frames Each Video Delay actor can hold a max of 1000 frames 8100 frames / 1000 frames per Video Delay actor = 8.1 Video Delay actors, rounded up is 9 Video Delay actors. So computer 2 would need to be running 8 Video Delay actors at max capacity and 1 at 1/10th max capacity.

5:30 = 330 seconds 330 seconds x 30 fps = 9900 frames Each video delay actor can hold a max of 1000 frames 9900 frames / 1000 frames per Video Delay actors = 9.9 Video Delay actors, rounded up is 10 Video Delay actors So computer 3 would need to have 9 Video Delay actors at full capacity and 1 Video Delay actor at 9/10ths capacity.

-

@woland said:

Connect three computers to the same beefy ethernet router/switch

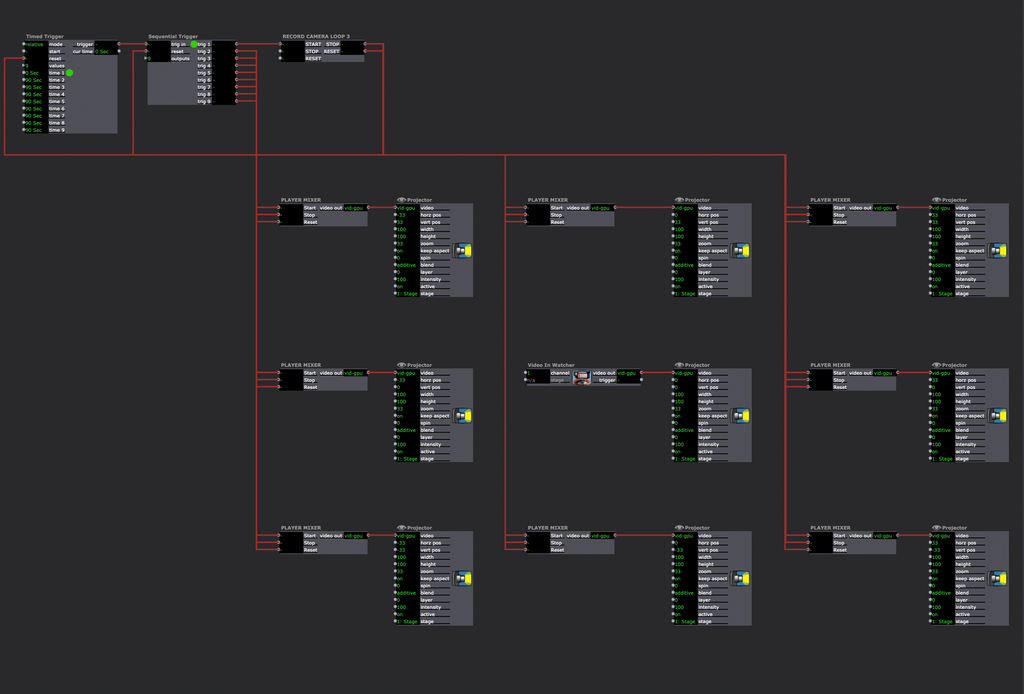

@joshuawg Here's a template Isadora Patch that shows the actors and steps needed for each of the three computers when using this method: patch-triple-computer-video-delay-2024-05-29-3.2.6.zip

Computer 1

Computer 2

Computer 3

-

Thanks so much for teasing out the less expensive option. This seems very doable! (Also you were right to catch my bad simple addition, sorry!)

Just for comparison's sake, what sort of load are you getting on your Mac Pro when running the 9 video delays? Any recommendations for minimum system requirements?

Thanks again!

-

@joshuawg you can also do this in another way. I’m not sure if it’s easily possible Isadora but if you can precisely record and playback the right sized sections of video this should be able to be done on a single computer.

The issues to solve would be precise starting and stopping of the record process with no gaps and the seamless loading and playback of the chunks of video. With a modern computer these limitations will not be from hardware but from how the software lets you control these processes.I would use another platform with more prefixes control to do the recording and playback of smaller chunks- gstreamer is a good option because it can do this, it’s free, and it supports NDI so you can get the streams back in isadora

gstreamer is an open source library that lets you do command line controlled video processing. It has a lot of features like fork and branch of video streams as well as recording and joining. It is very fast with low overhead and many functions are threaded.

You can ask it to record a stream into chunks and also to rebuild those chunks into streams. You can add chunks to a stream in real time. You can also stream playback with NDI.

Here is a bash script that outlines how this might work. You can use chatgpt or copilot to help you debug this on hardware.

#!/bin/bash

# Parameters

CAMERA_SOURCE="/dev/video0" # Adjust according to your system

SEGMENT_DURATION=10 # Duration in seconds for each segment

MAX_DELAY=780 # Maximum delay in seconds (13 minutes)

SEGMENT_DIR="/path/to/segments" # Directory to store video segments

NDI_OUTPUT_BASE_NAME="NDI_Delayed_Stream" # Base name for NDI output streams

DELAYS=(60 120 180 240 300 360 420 480 540) # Delays in seconds for NDI streams# Ensure the segment directory exists

mkdir -p $SEGMENT_DIR# Function to segment live video

segment_live_video() {

gst-launch-1.0 -e \

v4l2src device=$CAMERA_SOURCE ! videoconvert ! x264enc ! h264parse ! splitmuxsink location="$SEGMENT_DIR/segment_%05d.mp4" max-size-time=$(($SEGMENT_DURATION * 1000000000))

}# Function to clean up old segments

cleanup_old_segments() {

while true; do

current_time=$(date +%s)

for segment in "$SEGMENT_DIR"/segment_*.mp4; do

segment_time=$(stat -c %Y "$segment")

segment_age=$((current_time - segment_time))

if ((segment_age > MAX_DELAY)); then

rm -f "$segment"

fi

done

sleep 10 # Check every 10 seconds

done

}# Function to create and manage delayed streams

create_delayed_stream() {

local delay_seconds=$1

local ndi_output_name=$2

local segment_pattern="$SEGMENT_DIR/segment_%05d.mp4"gst-launch-1.0 -e \

multifilesrc location="$segment_pattern" index=0 ! decodebin ! videoconvert ! queue ! ndisink name=$ndi_output_name &

}# Start segmenting live video in the background

segment_live_video &# Start cleanup of old segments in the background

cleanup_old_segments &# Create delayed streams

for delay in "${DELAYS[@]}"; do

ndi_output_name="${NDI_OUTPUT_BASE_NAME}_${delay}s"

create_delayed_stream $delay $ndi_output_name &

done# Wait for all background processes to finish

wait -

@fred I hadn't heard of gstreamer — really excited to look into it! Will let you know if I can get it working!

-

@fred said:

gstreamer is an open source library that lets you do command line controlled video processing. It has a lot of features like fork and branch of video streams as well as recording and joining. It is very fast with low overhead and many functions are threaded.

You always know about the coolest things <3

-

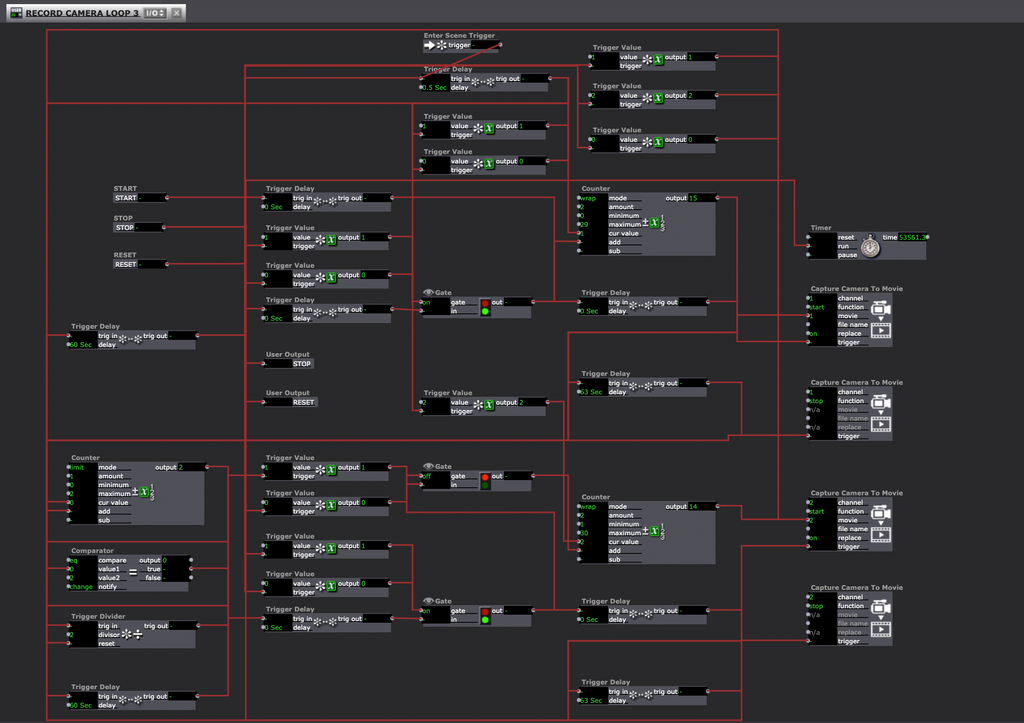

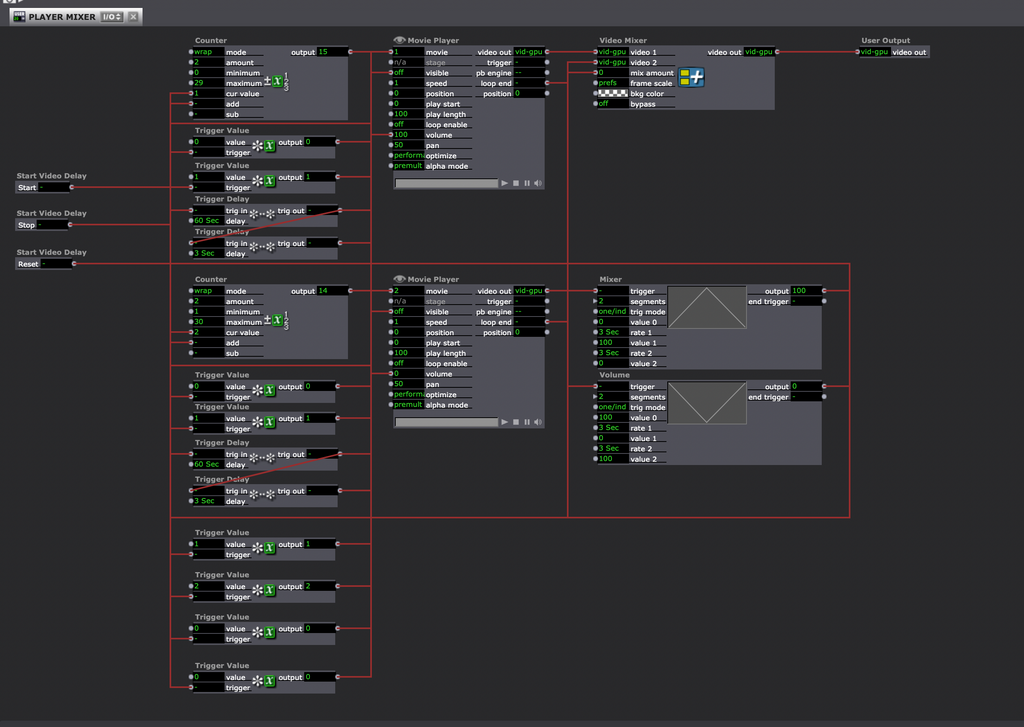

Just wanted to send a quick update, since I seem to have gotten this to work! Sadly I wasn't able to go the gstreamer route with my limited coding abilities, but having a vague idea of the underlying theory, I was able to get it working in Isadora. As @fred suggested, it's a rolling capture of videos that are then played back on a trigger delay to create the illusion of a video delay. Of course the blip between videos using a single capture-camera-to-movie actor was a bit of a giveaway, so I ended up using an hdmi splitter to split my camera signal across two camera inputs (using loopback to split the audio signal as well), so I can alternately capture the two feeds with a bit of an overlap and crossfade between them — which has the benefit of letting me capture consistent audio! Anyway, thanks again for all of your suggestions. The load is super low and I'm excited to start playing with it.

-

@joshuawg said:

Just wanted to send a quick update, since I seem to have gotten this to work! Sadly I wasn't able to go the gstreamer route with my limited coding abilities, but having a vague idea of the underlying theory, I was able to get it working in Isadora. As @fred suggested, it's a rolling capture of videos that are then played back on a trigger delay to create the illusion of a video delay. Of course the blip between videos using a single capture-camera-to-movie actor was a bit of a giveaway, so I ended up using an hdmi splitter to split my camera signal across two camera inputs (using loopback to split the audio signal as well), so I can alternately capture the two feeds with a bit of an overlap and crossfade between them — which has the benefit of letting me capture consistent audio! Anyway, thanks again for all of your suggestions. The load is super low and I'm excited to start playing with it.

Wow that sounds fantastic! Did the performance already happen, if so, is there a recording available to watch? If not, where and when is it?

-

Doesn't happen until April actually — but I'm about to head out on a retreat tomorrow so I'm going to be working with the new patch then!

-

@joshuawg Wow, I would not have tried to do all this in Isadora, that amazing and so much more efficient that saving frames in memory.

-

Very nice patch! as an minimalist alternative for that "probably expensive hardware" way, this one seems a wonderful solution!

Hope you don't mind if I take some ideias from there.. ;)

-

Absolutely! Go for it.

Getting the crossfade between videos takes a little finessing — and sometimes there's a little ghosting/misalignment but in general I'm finding it really effective!