help with Eyes (++)

-

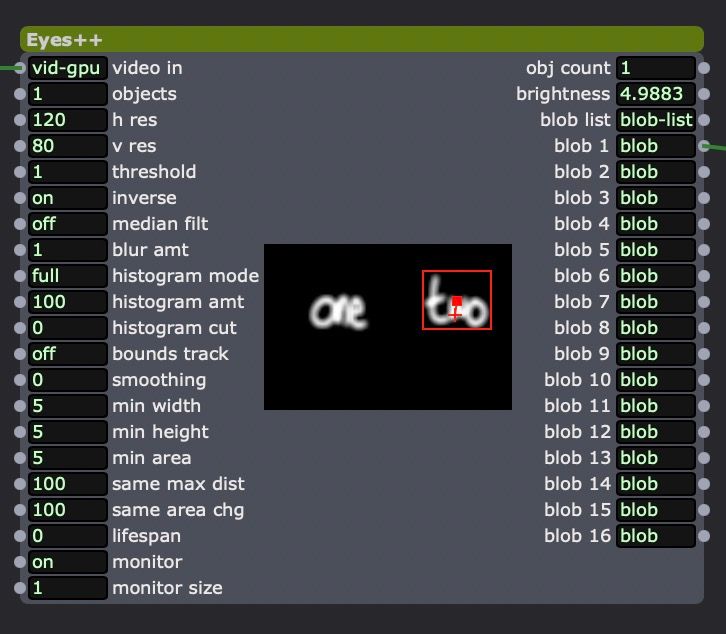

I would like to track a group of objects as if they were one object. (the objects are not moving) - sounds complicated? basically, I'm running an iPad screen into Izzy, with people drawing on the screen. I then capture the images as JPGs and file them away for animating. But I'm trying to dynamically scale the drawings so that if someone uses the full screen, it shrinks it down to fit the area I am using, and if someone draws something really small, it enlarges it to fill the area.

But.... if someone draws something small on one side of the screen, and something small on the other side, then Eyes++ only selects and tracks the largest blob and so my system ignores the smaller blobs.

Is there a setting that will create a bounding box around all pixels that are above the threshold?

-

@dbini off the top of my head - Get media or screen size? Maybe send the outut to a virtual screen and hen use the Get Stage Size actor? Obviously some maths on the width and height? Sorry it's a short reply.... will check back later on!

-

How it is if you use more objects and also bound tracks?

How it is if you use more objects and Best regards,

Jean-François

-

using more objects creates boundaries for each object, but I need to add these together into one object and measure the overall size

-

@skulpture

the screen size is always the same - i'm Airplaying from the iPad to an Apple TV box, then HDMI out to a capture card, so the image coming in is always a 1920x1080 white screen - what I need to do is measure the size of the black shapes that are drawn on this screen -

@dbini this is kind of annoying to do, mostly as we do not really get an expanding list from the blob tracker.

In principle though you can calculate a bounding box for all the bounding boxes of the blobs.

You would have to do some fancy footwork, but likely python is the best bet - First you need to connect what you would expect to be the max number of objects to the blobs output.

Then we need to know if there is actually a blob on that blob output (the blob decoder as a tracking output that is a boolean for if there is a blob at position 0, 1 2 etc). We then use that to determine if the blob width height and horizontal and vertical positions should be taken into account when calculating the meta bounding box).

Then in python you can write a relatively simple script that will use the positions of any active blobs to get a meta bounding box - you can also get a centroid and area pretty easily.

-

@fred

Thanks Fred. I've been developing my patch to try to accommodate separate blobs, and realised there is an amount of maths involved. Rather than go down the Python route, I'm combining calculators and have found a way to determine the maximum size of the input, but am struggling with the average position. I think it just needs more maths. It's the kind of thing that my brain needs time to process. : )

I will be testing the system in the real world at the end of next week, so will find out then if my logic is logical.... -

@dbini You could also play with the parameters bleow smoothing about area surface and same area max distance. Bit it depends how what you are tracking moves

-

thanks to everyone for advice, I managed to tweak Eyes to make the most of Histogram mode and blurring and captured most of the inputs accurately.

Here's the results:This was made at a STEAM event for children, so I couldn't shoot any footage of the system in action.

-

Could it be that you're missing something very simple?

You should define the maximum amount of objects to track (second input of the Eyes++ actor). Then Eyes(++) will look for more objects.

You might face a different problem if the objects are close together, but you can play with the other parameters to catch those things up.

I hope this helped,

Cheers

-

@gaspar said:

Ouch.... just saw my reply is off. You intended to track everything as one blob... and you solved it.

My bad.

Looks great by the way.

-

very nice!

-

Nice project. Glad it was a success.