Projecting onto a mannequin head

-

Last year we had this installation at the train station where I live, it was installed in a waiting-room: http://glaserkunz.net/

It seems they only do this if you go on selected works.

Best Michel

-

Thank's all.

-

There are a bunch of live facial mapping tools around, most operate on a similar principle. They start by using a standardised mesh to describe a facial structure, a corresponding mesh is extracted from the source face and the destination face through feature tracking. They then interpolate between the source and destination meshes and the live video is bound to the mesh. This gives a pretty accurate result and then it does not matter what position the source and destination videos are, you can switch between them because they are locked )as long as the features are being tracked), the next part to make it all nice is to feather the edges of the mesh to make a smooth blend on the destination. As we there is no face tracker in Isadora it is a little difficult to implement without making a custom plugin and at the moment the SDK is well behind the current release. Here is an example made with OF that you could convert to inputing and outputting syphon and comte controlling through OSC. Here is an example of the output that uses the above code- in fact there is an example there that does everything in the video.

Having said that if you have a very still recording of the source head (which looks quite unnatural - it seems this is the odd thing about the way it was done in the link Michael posted), and the destination does not move then it is a pretty simple exercise just tweaking the mask and mapping of the image. -

Hmmm.... Faceshift would be the perfect commercial tool for the job.

-

I masked out a basic face and projected it onto the head - worked pretty well with hardly any effort.

-

I have wanted to try this since I have a number of maniquins here.. and I saw the Gaultier exibit, it was very inspiring. Haven't had a chance yet.. Glad to here its working well for you without too much trouble.

-

Faceshift Studio is NOT available anymore, rumors say Apple has bought the company. They extendet our license one more time and it will end on April 2016.

-

How would the OF work if projecting onto a smooth (egg) maniquin head/face? I suppose it would require some form of registration... perhaps it could be input manually as a single XYZ -

All I have done is grab a picture, mask out the face and warp into on the mannequin head. Nothing special at all. It all lined up pretty well - but I needed a picture that was perfectly front on.

I just grabbed a picture of my wife from off her facebook page - she wasn't impressed! haha.Something *really* obvious but simple hadn't thought about was.... The mannequin's lips are closed. So when I replace a picture with a video talking - the physical 3D head wont move it lips. So this could look strange. I may need a mannequin with no lops - just a flat surface.Which is exactly what they have done here @Michel http://glaserkunz.net/ so thanks for that link.I also published the X and Y for two warp points on her lips and linked it to a sound level watcher haha - made her mouth move. It wasn't perfect but looked funny. -

@DusX I would start with a still of a head and map it to the mannequin. I would then use a video of a talking head and map it to the still image that was used for mapping. This means the face from the live or recorded video (where the head can move and the tracking can follow it) would be extracted and then matched to the features of the head used for the basis of the mapping to the mannequin. This would mean that pretty much any video of a head (live or recorded) could be used and it would map directly to the mannequin correctly every time.

-

Good call, that makes perfect sense. -

That's what my plan was/is. :) Still image and then think of video.

-

Hi @Fred.

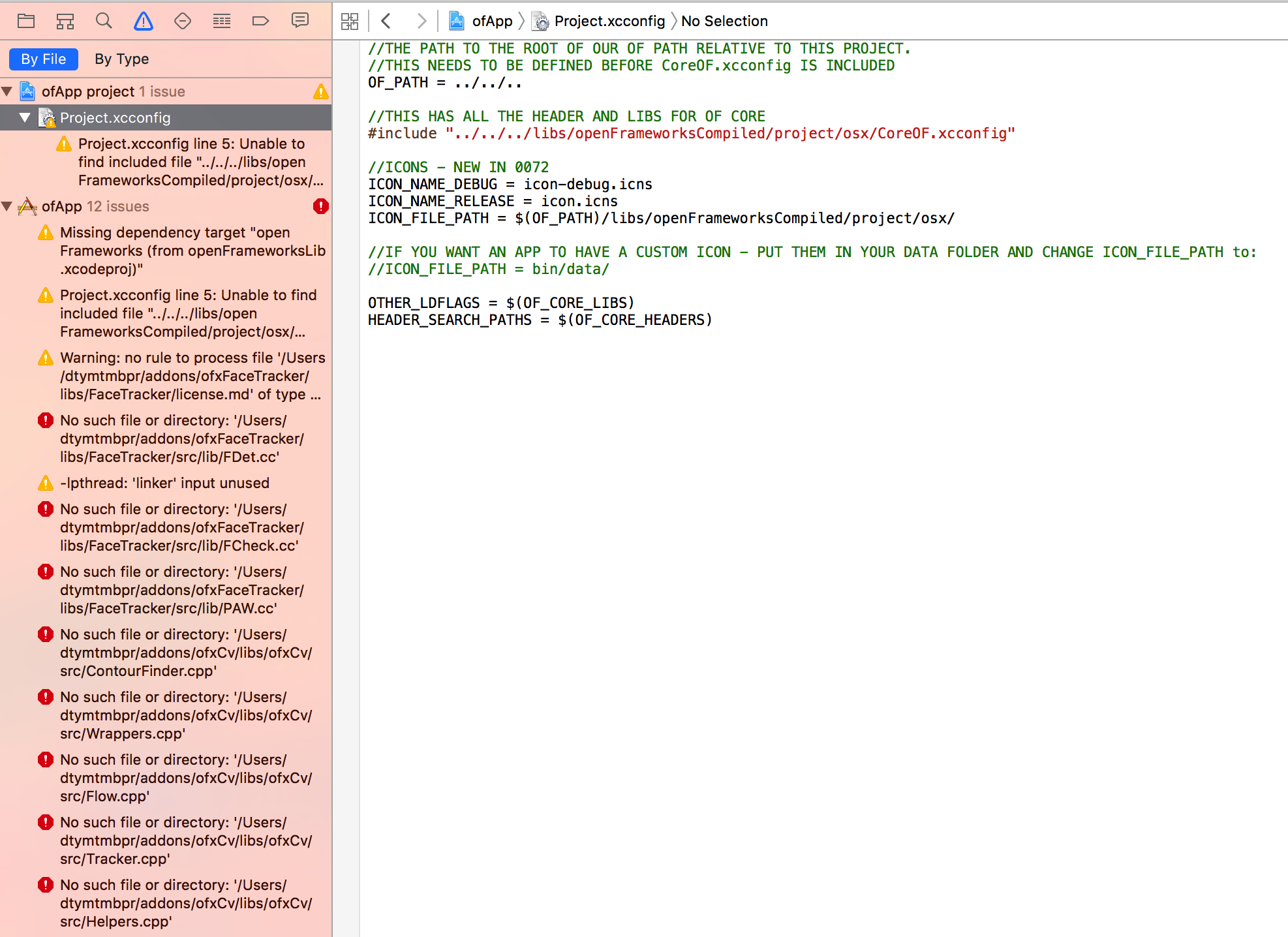

Been looking at the link above. Downloaded all the files but then get lost in Xcode.Just get tons of errors. I literally have no idea how to use Xcode.Where can I learn to solve these errors - I cant even think what to google... Apart from paying to go on a course maybe?

-

Ok, there are some good instructions for running OF, but briefly you need to download openframeworks from here https://github.com/openframeworks/openFrameworks, download the ofxCv addon from here https://github.com/kylemcdonald/ofxCv/tree/develop (note it is the develop branch so it matches with the branch of openframeworks. The addon needs to go in the addons folder and make sure it is a clean name, not with master- or develop in the folder name. You then get an error from not following this instruction

After downloading or cloning ofxFaceTracker, you need to make a copy of the `/libs/Tracker/model/`directory in `bin/data/model/` of each example. You can do this by hand, or `python update-projects.py` will take care of this for you.the of setup guide here http://openframeworks.cc/setup/xcode/ gives some good starters. and there are some basic tutorials that are really good here http://openframeworks.cc/tutorials/Fred -

@Fred I could kiss you.

Thank you for taking the time to explain that. I appreciate it.