Motion Tracking through Isadora

-

Thanks a lot for that @Marci ! I'm gonna have a serious look on it tomorrow!

Glad you're not sick anymore! :) -

If you look at how sketch 7 works, it creates a PGraphic element with a white background, and renders the text on that rather than displaying on screen. We then iterate over the x and y checking each pixel, looking for a black one (or possibly red - can't remember what state I left the patch in). Wherever a matching pixel is found, a particle is added to an array with the x/y position - the particle is then released from 0,-100, with acceleration and velocity, and is attracted towards it's pre-destined x,y co-ords... it overshoots and spirals back towards losing velocity as it goes until it flocks around it's spot.

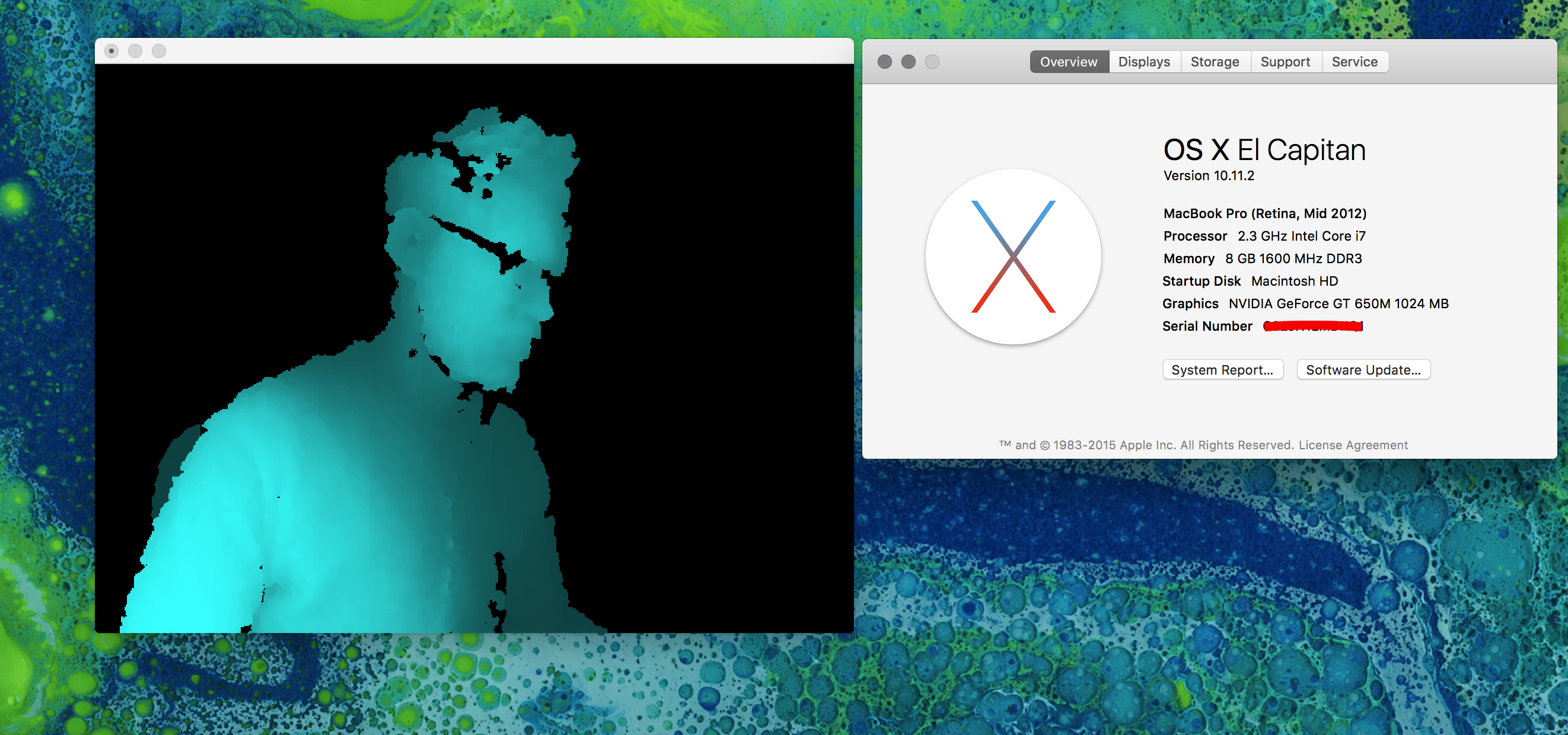

Exactly the same principle can be used on the raw depth image from a kinect (sketch 8). Whilst going over x and y, we check the raw depth of that pixel, and if it falls between a defined range (our minimum and maximum depth thresholds) we color it red. Using the same technique as for the particle text, we add a particle to an array for any matching red, and launch them from 0,-100.The trick is keeping the particles in check, so when we're dealing with large blobs such as in scene 8, we have a skip variable, so we skip and only look at every 3rd pixel. Lowering the resolution essentially. Otherwise you'll find CPU gets stressed and up spin all your fans. We give pixels a lifespan, and once their counter (in frames) has reached 0, they're marked to be removed the next time they exit the boundaries of the screen. Again, helps keep the load in check.There are all sorts of optimisations that could be done. These two demonstrate depth layer tracking to emulate motion tracking in a not-really-efficient way.Patch 6 is the multi blob sketch that I need to work with next.At the moment this is all limited to using a single Kinect feed (i.e.: just the depth feed), mainly because I'm on a Retina Macbook with USB3\. The USB library that is used in SimpleOpenNI and OpenKinect has issues with isochronous packets and tends to crash out if you try using more than one feed (e.g.: to do a coloured particle cloud, textured from the RGB camera image, you'd need both RGB and Depth feeds - on a USB3 macbook this is likely to crash out rapidly). This is why a lot of other Kinect middleware out there can be flakey for some users (indeed, many of them are actually processing sketches compiled out as a standalone executable)...If you have an older Macbook WITHOUT USB3 (non-retina MBPro 17" with AMD GPU for instance) - then the sketches above could all have the RGB feed enabled and particles textured from that... or we could pull in the user feed and handle skeleton alongside blob detection etc.A decent powered USB2 hub may get round the issues for retina MBPro users, but I haven't got one so can't confirm that. -

Thank you Marci. It's going to take some time for me get to speed on this but I and thankful for the path you've provided.

-

@Marci thanks for all this, it's going to take me a while to go through these sketches...

But It's very close to something I want to do and I feel like it's a really good hint for me.I didn't know for that USB3 issue since i'm (now gladly) owning a old MBP with USB2thanks again! -

Hi Marci.

Thank you for sharing your tutorial with code examples. It's so informative and is something I've been trying to work out for a while. I'm having some stability issues and sometimes get errors running Processing that sometimes results in a crash. I wonder if it could do with versions.I'm curious as to which Kinect sensor you are using. Do you think that could have anything to do with it? Mine is the first generation.Thanks again! I appreciate it. -

Me? Kinect for xBox360... 1417 model (x2). The only issue with these is if running over USB3 and using multiple feeds as noted above.

-

@ Marci

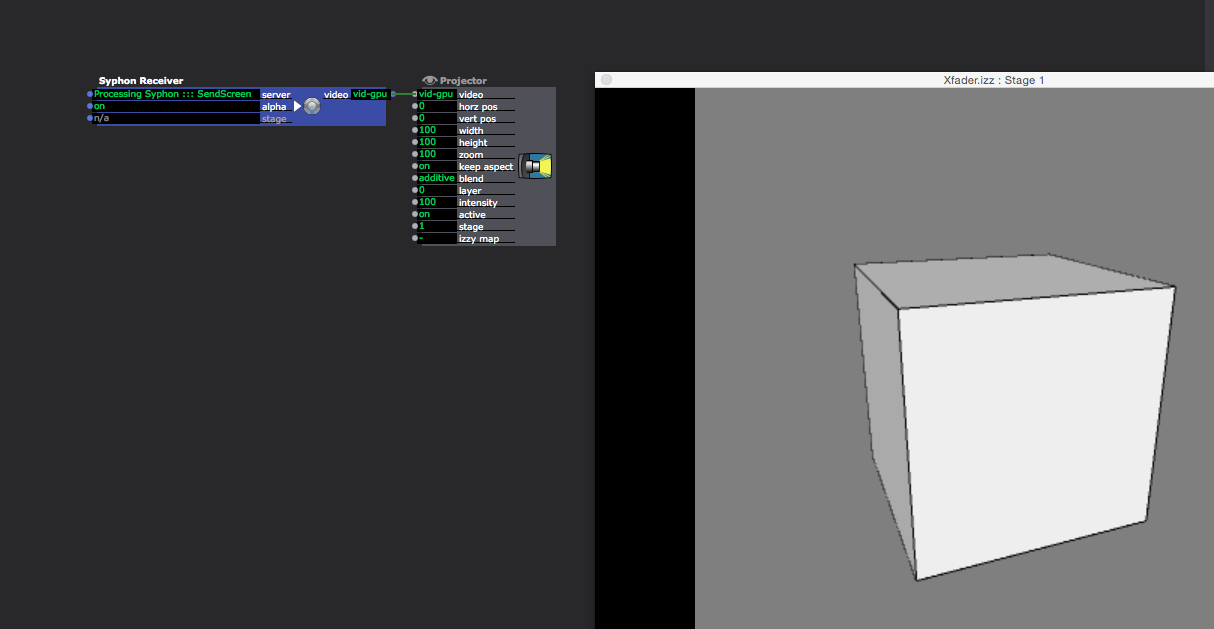

finally got it working but i can't get it into isadora :( Processing does not appear in the syphon receiver. -

@ skulpture

finally getting to motion tracking/ kinect... ect. i looked into your tutorials today. I even downloaded Synapse but all i get is a Black Preview Image. Could it be that is it not working in "Yosemite"?Or is it the MaxPatch that is missing (also in your tutorial)thanks for any further Informationp. -

Hi. Yes this is an old tutorial I'm afraid. I will have another look into it for you as soon as I can.

-

"finally got it working but i can't get it into isadora :( Processing does not appear in the syphon receiver."

Hit CMD-SHIFT-O in processing and it should give you the Examples browser... Expand Contributed Libraries, find the Syphon library's examples, run them and check they show up in Isadora. -

-

-

@skulpture yes after moving ( more jumping) in front of the kinect it woke up after all. i even managed to get the skeleton working, but getting to my laptop ( meaning closer to the sensor) it really freaked out :( so i might end up using nimate...

-

Anything based on SimpleOpenNI (including NIMate) takes an age to initialise skeleton properly unless it can see full height (feet to head) in shot. When you get too close, frequently skeleton legs start to go whappy - skeleton doesn't like it when it can't work out where a limb is, and just makes something up, which often freaks out whatever the Kinect is driving... One of many reasons I avoid using skeleton and prefer depth blobs. Otherwise, you have to add your own checking routines to remove limb markers between your middleware and output, or disable whatever that limb is driving when the coordinates exceed screen / stage / world boundaries or just vanish.