Motion Tracking through Isadora

-

Hi Marci.

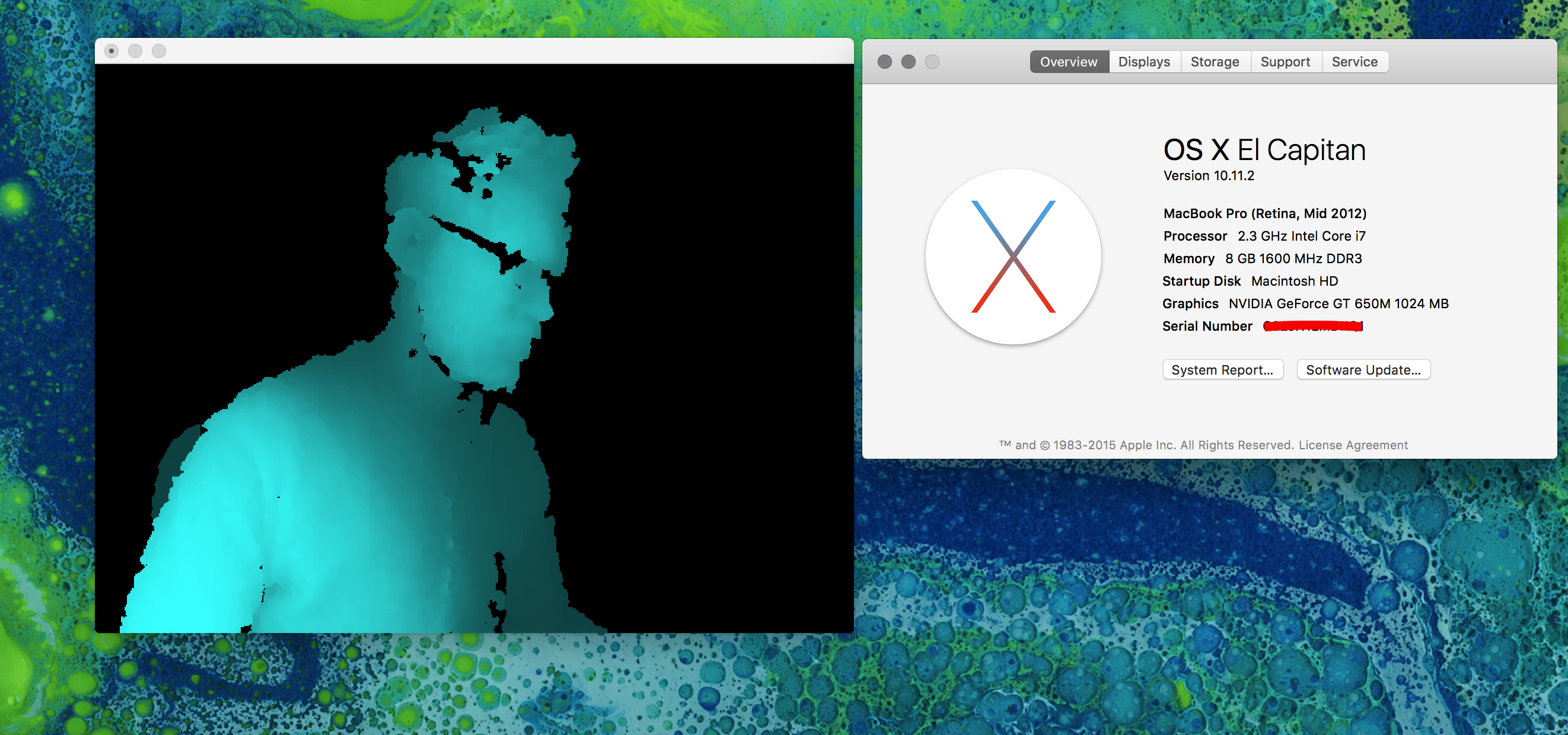

Thank you for sharing your tutorial with code examples. It's so informative and is something I've been trying to work out for a while. I'm having some stability issues and sometimes get errors running Processing that sometimes results in a crash. I wonder if it could do with versions.I'm curious as to which Kinect sensor you are using. Do you think that could have anything to do with it? Mine is the first generation.Thanks again! I appreciate it. -

Me? Kinect for xBox360... 1417 model (x2). The only issue with these is if running over USB3 and using multiple feeds as noted above.

-

@ Marci

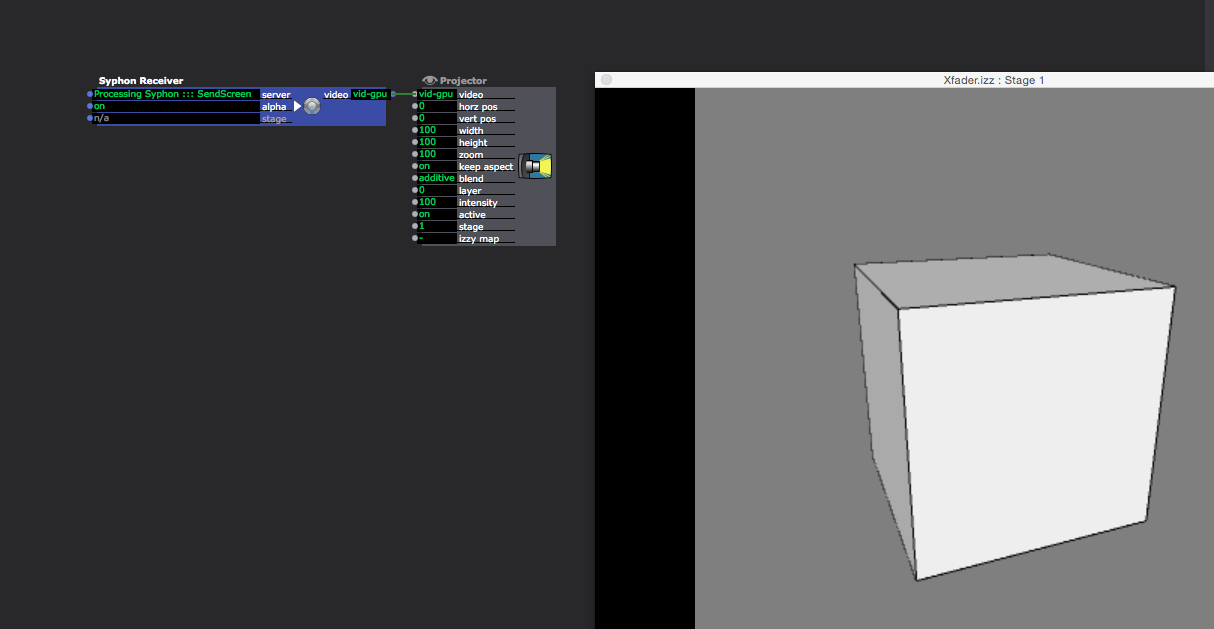

finally got it working but i can't get it into isadora :( Processing does not appear in the syphon receiver. -

@ skulpture

finally getting to motion tracking/ kinect... ect. i looked into your tutorials today. I even downloaded Synapse but all i get is a Black Preview Image. Could it be that is it not working in "Yosemite"?Or is it the MaxPatch that is missing (also in your tutorial)thanks for any further Informationp. -

Hi. Yes this is an old tutorial I'm afraid. I will have another look into it for you as soon as I can.

-

"finally got it working but i can't get it into isadora :( Processing does not appear in the syphon receiver."

Hit CMD-SHIFT-O in processing and it should give you the Examples browser... Expand Contributed Libraries, find the Syphon library's examples, run them and check they show up in Isadora. -

-

-

@skulpture yes after moving ( more jumping) in front of the kinect it woke up after all. i even managed to get the skeleton working, but getting to my laptop ( meaning closer to the sensor) it really freaked out :( so i might end up using nimate...

-

Anything based on SimpleOpenNI (including NIMate) takes an age to initialise skeleton properly unless it can see full height (feet to head) in shot. When you get too close, frequently skeleton legs start to go whappy - skeleton doesn't like it when it can't work out where a limb is, and just makes something up, which often freaks out whatever the Kinect is driving... One of many reasons I avoid using skeleton and prefer depth blobs. Otherwise, you have to add your own checking routines to remove limb markers between your middleware and output, or disable whatever that limb is driving when the coordinates exceed screen / stage / world boundaries or just vanish.