Audio Features for Isadora: What Do You Want?

-

hi,

perhaps the Sound Player and Movie Player could have an option to display the audio waveform in the progress bar. That way the module could be expanded to see more waveform detail and create more accurate loops etc.

-

very good idea! And timecode control, start, stop, pause, jump, loop function, input and output routing, output devices, sound output of videos for manipulation etc

greetings Matthias

-

Yes! yes! yes!

-

So for all of you passionate about, as Juriaan said it, "making audio a first class citizen": as I said, implementing any kind of plugin structure where audio is routed through the program is a major undertaking. Are all of you saying you'd prefer to not have the new audio routing features (which I believe we can give you in relatively short order) but instead that you'd prefer to wait the until the fall of 2020 to get fully patchable audio added to Isadora?

On macOS adding this kind of funcionality would require far less effort because Core Audio gives gives it to you as what is essentially a built-in feature. I have searched for a open-source ASIO based VST host for Windows that emulates the behavior of what macOS offers -- but I have never come across one. That means we have to build the whole thing from nothing, and then to test it and make sure it is reliable in mission critical situations. This effort would pretty much consume the resources of the company, and aside from bug fixes, I don't think you'd see many other major improvements if we were to take on such a project.

Why do I say fall 2020? I've become far more cautious about estimating how long it takes for us to do something this big. I am guessing it would take roughly four to five months to implement the core features we need. I would then add two to three months of internal testing plus at least two more months of beta testing. This comes out to a development time of approximately 9 to 10 months.

Thoughts?

Best Wishes,

MarkP.S. I am not saying I'm going to take this on. There are a constellation of concerns that will determine what features we add when. I am trying to:

1) hear what you want most, and

2) give you a real-world time frame for accomplishing the features your requesting. -

I prefer to have the new audio routing features as soon as possible and that you keep time to correct bugs or optimise existing actors.

Thanks

best

Jean-François

-

Hi,

Perhaps you could release the OpenNi tracker suite of plugins push for increased sales based on its awesomeness, fill the coffers/get cashed up and outsource the audio module architecture for major release in 12-18 months as ‘Isadora 4 Audio’. All the while in the short term providing a multichannel MoviePlayer for PC.

Sounds like a plan - but ?

-

@mark For me VST is something that can wait. I would really like audio treated the same way as any other signals, so node based routing and connecting outputs to inputs for all audio channels of audio and audio from videos, audio inputs and audio playback, and having audio outputs as an actor. But I understand this is a big task and will need to be cut into chunks and worked on progressively. I am sure you have checked out a all of these opensource projects but Jack, openAL, dr_wav, dr_mp3, dr_flac and stb_vorbis are some useful libraries I have come across , but my requirements for licensing are much less strict than yours.

I am not sure about timing, IMHO I think it is better to wait for a complete interface (if the node based approach is what will slow you down), rather than expand on the current audio routing setup or make another intermediate interface. -

@bonemap said:

Perhaps you could release the OpenNi tracker suite of plugins push for increased sales based on its awesomeness

I think we're quite close to being ready for public beta on those plugins and we will see if it affects sales. But, frankly, no theater designer who does your standard sort of production is probably going care in the slightest about real-time skeleton tracking -- though I know supporting these cameras is going to definitely help us stand out.

Best Wishes,

Mark -

@mark said:

no theater designer who does your standard sort of production is probably going care in the slightest about real-time skeleton tracking

When you say it like that, it sounds like a really dull market you are pushing into. Let’s hope there is an excited and expanding market for your vision. For one thing the power of the OpenNi is not just skeleton tracking there are many awesome techniques possible with the depth sensing capabilities. All kinds of incredible scenographic projection masks are going to be possible with the extended depth range of the new cameras. You just need to own it and get it out there for designers and artists to explore the potential. I really wish you the best for your efforts.

-

Great to hear audio thoughts are on the horizon!

Seconding @jhoepffner that the most important function for me is stable input / output routing.

seconding @Juriaan that being able to treat audio as another signal type -- esp with regards to being able to easily loop audio from a video output back around, and basic analysis.For both of these use cases better support for Dante and NDI would helpful. Dante is becoming my go-to replacement for sound flower, but its not really composer friendly.

seconding @bonemap on the desire for more reliable multi channel discrete audio channels from the movie player.

seconding @michel on the usefulness of 24 bit audio file playback. Most files I get these days are 24 bit, and while its easy to recompress them -- I've twice experienced sound designer demands for an entire separate machine, solely to play a 24 bit aif file because of concerns over the 16 bit reduction...

VST plugins aWhile it would be lots of fun for small projects to be able to use VST plugins inside izzy and do more analysis within going to Max, realistically for major projects it would be hard to get past the need for max and abelton running on a separate machine.

Ian

-

Thank you for reinforcing the points that mean the most to you. For others that may not yet have commented, giving a +1 as Ian just did is useful for me.

But one technical point for all of you considering this to understand:

There is no real difference between adding 'audio' as a signal path and a structure that supports VST plugins. As soon as 'audio is a signal, you'll need an audio version of the Projector actor -- i.e., an actor that pulls the audio data from earlier audio providers like the Movie Player or Sound Player so that it can be sent to the audio output device. Once you have this structure, inserting an actor between those two -- whether it is to do sound frequency analysis or audio effects processing -- is not really a significant effort. One source of complexity comes in when you've multiple instances of this our imaginary "Audio Projector" actor. Consider the situation where one is receiving audio at 48K and another at 44K, which means that you've got to start doing re-sampling and also you've got to manage audio volumes to ensure the output doesn't get overloaded, etc. There is also the issue of channels: what happens when you connect an eight channel audio stream to a two channel one? Do you just drop the extra six? Do you mix the eight channels down to two? But mostly, the biggest issue with these kinds of "audio graphs" -- going from audio source actor to audio effect to to audio output actor -- is that they need to function with super precise timing. An "Audio Projector" actor is going to be asking for blocks of something like 256 samples (a 5 millisecond chunk) at a rate of two hundred times per second. If you are late by even a fraction of a millisecond, the buffer doesn't show up at the right time and you've got a big fat click.

That's my big hesitation on this front; getting such a system to work perfectly, so that not even one of those buffers is missed, is something that will require serious effort and considerable testing.

Best Wishes,

Mark -

@mark I'm not sure if it makes a difference as it seems you evaluated the work involved already. But fixed sample rates per patch seems like a fine limitation, with a pop up explaining why. This could some other issues you mentioned. To dealwith summing mixers there are a few approaches, from a very basic divide amplitude by the number of inputs, to even just letting overloads overload. How does this work now when you have multiple scenes with audio that is full volume and activate them at once, whatever the behaviour you have implemented there would make sense to transfer.

As for patching, single or paired audio streams per connection would answer the what if there was a 6 channel movie and a stereo output, or taking the first tracks of a multi track output is also logical.

Having said that as much as I would like to see this, it does seem like quite a rabbit hole to go down. As well as our proposals, would you be willing to share what you have thought of doing in the intermediate term for audio?

-

Hi all ! First of all, thank all the work and dedication of @mark, his punctual and obsessive work. Thank you!

Secondly, I think we should pay attention to Mark's knowledge and his experience and smell. I have the feeling that insisting on the Asio question may not be the best way, but it's just an appreciation of mine. Perhaps first move forward with what Mark knows that consumes less time to implement, instead of delaying the update for another 2 months.

What I do think would be great first to solve the issue of depth cameras and the tracking skeleton. It is also a highly anticipated announcement and for some time now. And I think it will be another step in the positioning of Isadora among the rest of the software.

A big hug to all !Maxi Wille - Isadora Latin Network. RIL

-

-

Visual/graph based equalizer

the ability for MP3's and compressed audio file types to sit in the audio bin along with .WAV and .AAIF

-

First of all, before we start this I would like to say that we love the fact that you include us in this talk and make us part of the design phase of how audio should flow in Izzy.

I get that we aren't easy and that our wishlist might be a big undertaking, so like @fred said I would also love to hear what your plans are with audio and how you envision it in the program in short-term and long-term (so let's say in the coming version of Izzy and Izzy in 1-2 years from now on)

Regarding your questions

Q: Resampling issue

A: I would be fine with setting the program to a certain sample rate so that all files have to be in that ballpark. It is not strange for us as Izzy programmers to do this already with video to ensure that it has great playback for the situation that is required.Q: Overloading

A: If you overload a channel then honestly it is your issue. If you add a quick toggle in the HUD of the program to allow us to silence all channels it will be fine with a little volume slider that multiplies and acts as a grandmaster. (And yes, this can be global doesn't have to be scene-based)Q: Channels

A: Just drop the extra channels, if you try to connect 8 streams of audio to a 2 channel interface you will lose the extra 6. Just limit the amount of 'Virtual Audio renders' to what the program can handle (Just as an example lets say we have 16 channels in Izzy where you can output sound through, render the sound to the 'containers' and only output the sound if there is a physical device connected to that channel)Q: Timing

A: Roger that, I agree that we can't have the super-precise timing of the audio channels on the same thread as the video renderer / the UI thread. So that either means that we need a separate thread for that.. (Not sure or this is feasible, just sharing ideas here..)A few things that I would like to point out.

As an artist, I'm fine with simple audio plugins / routing options inside the 'logic' of the program. So like you noticed before I'm a big fan of just connecting the signal to a 'Speaker' actor and get my sound to the channel, say against Isadora that Channel #1 should go to Output #2 on my soundcard. It also gives me a lot of options in the future for my job as an installation artist (and I know that some features where you are working on now, for example the Kinect actors / OpenNi intergation are more for that 'new' market (we are here ;), then the normal 'scenography' student or the theater maker that is afraid of a beamer..)As a teacher, it makes perfect sense for me to explain how audio flows if it follows the same pattern as video

As a community member I see a future if we now lay the ground work for this to happen that we can share some amazing patches with the community / fellow artist to create powerfull patches with still having the easy flow of Isadora. And that is a huge advantage if you compare it to the sleek learning curve of for example Max MSP. (And yes, we will have issues where the answer might be, go work with Max MSP to get this done and not with Isadora and here are some amazing tools to get you started / get the data back in Isadora and work with it)

-

Dear Community and @Fred, @Juriaan, @RIL, @bonemap @ian @jfg @kirschkematthias @tomthebom @kdobbe @Michel @DusX @Woland @eight @mark_m @anibalzorrilla @knowtheatre @soniccanvas @jhoepffner @Maximortal @deflost @Bootzilla

Thank you all for your contributions to this thread so far. We are listening.

But, as I said at the outset, making audio a "first class citizen" in Isadora (i.e, audio travels through patchcords) for Isadora 3.1 is not something we will take on, because we want to get 3.1 out in the next few months. Instead, such a feature is what I would consider to be an Isadora 4 feature. Now, you should not assume that making a major release (i.e., from 3.x to 4) will take years. But what I can say is this: the effort to program such a feature and test it and ensure it will be reliable, and to deal with the subsequent tech support for this feature will be costly; it means we need to release it as Isadora 4 so that we can ask those with perpetual licenses to pay an upgrade fee.

With that in mind, here is what we are proposing for Isadora 3.1.

Isadora 3.1: Sound Routing For all Movie Players and Sound Players

1) You will have an 'audio device' input that allows you to choose the hardware or virtual output (if possible) to which the audio is sent.

2) You will have a 'audio routing' input that allows you to route the source channels to whatever output on the audio device you wish.

3) While it is yet to be proven I can do this, if it is feasible, you will be able to control the volume of every source channel being sent to an audio output.

4) The audio output will delivered to the hardware using the default audio system for the given platform -- that means HAL (hardware abstraction layer) on macOS with AVFoundation, and WASAPI (Windows Audio System API) on Windows.For the movie player, I already have routing working under AVFoundation on macOS. I am close to having a working verson using Windows Media Foundation on Windows.

For the sound player, I have modified the sound library we use (SoLoud) to allow this routing, and my initial tests show it works. That covers both macOS and Windows.

In other words, I believe we are not terribly far away from having these features in a working beta.

That said, I want to dig in to point #2: the 'audio routing' will be represented by a string that indicates the source channel to output channel routing. Right now, I imagine something in the form:

1 > 2; 2 > 5; 4 > 6

The first number represents the source channel in the movie or sound file. The second number, after the greater than sign, indicates the destination audio output. So the above string would send source channel 1 to output 2, source channel 2 to output 5, source channel 4 to output 6. I could also imagine a notation like this:

1-4 > 5

where source channels 1 through 4 would be assigned sequentially to output channels 5, 6, 7 and 8.

The reason to use this string representation is interactive control: you could generate such strings on the fly or by using a "Trigger Text" actor and send them into this input to control the routing.

In addition, I can imagine a volume parameter along with these, assuming it is actually possible to control the volume -- something I have not proved on both MacOS and Windows yet.

1 > 2 @ 50%

or

1 > 2 @ -3db

which would route source channel 1 to output 2 and have a volume of 50% (in the first example) or a volume reduction of -3 db (in the second example).

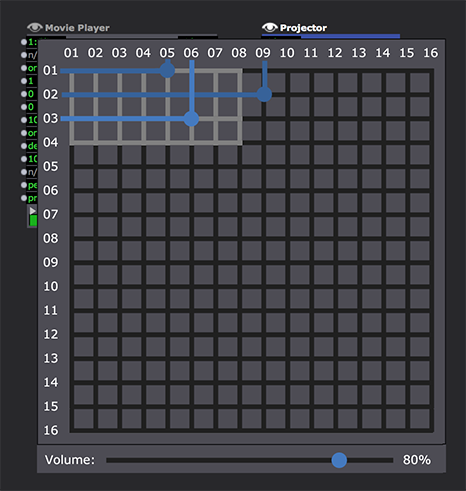

Of course, no one wants to write out these values as a text string to define a fixed routing. So if you click on this input, you would be presented with something like this:

(Note: this is just a rough drawing I whipped up as an example!)

The source channels are listed along the left edge, and the output channels are listed along the top. To route a source channel to an output channel, you would simply click on the intersection between the two. Again, if it's possible, you could the set the volume for the currently selected routing at the bottom.

Note that the lighter grey lines would indicate the valid source channels for the currently playing file as well as the valid output channels for the currently selected device. (In the example above, there are four channels in the source media, and eight channels on the output device.)

Because media files or (in theory) the output device can be chosen interactively, and one might have more or less channels than were present when this routing was made. If a routing is made that is invalid for the currently playing media or current audio output device, it would be ignored. This would be the case in the example above, where channel 2 is routed to channel 9 of the output device; as indicated by the darkened lines, the audio output device only has eight outputs. So the routing from source channel 2 to output 9 would be ignored.

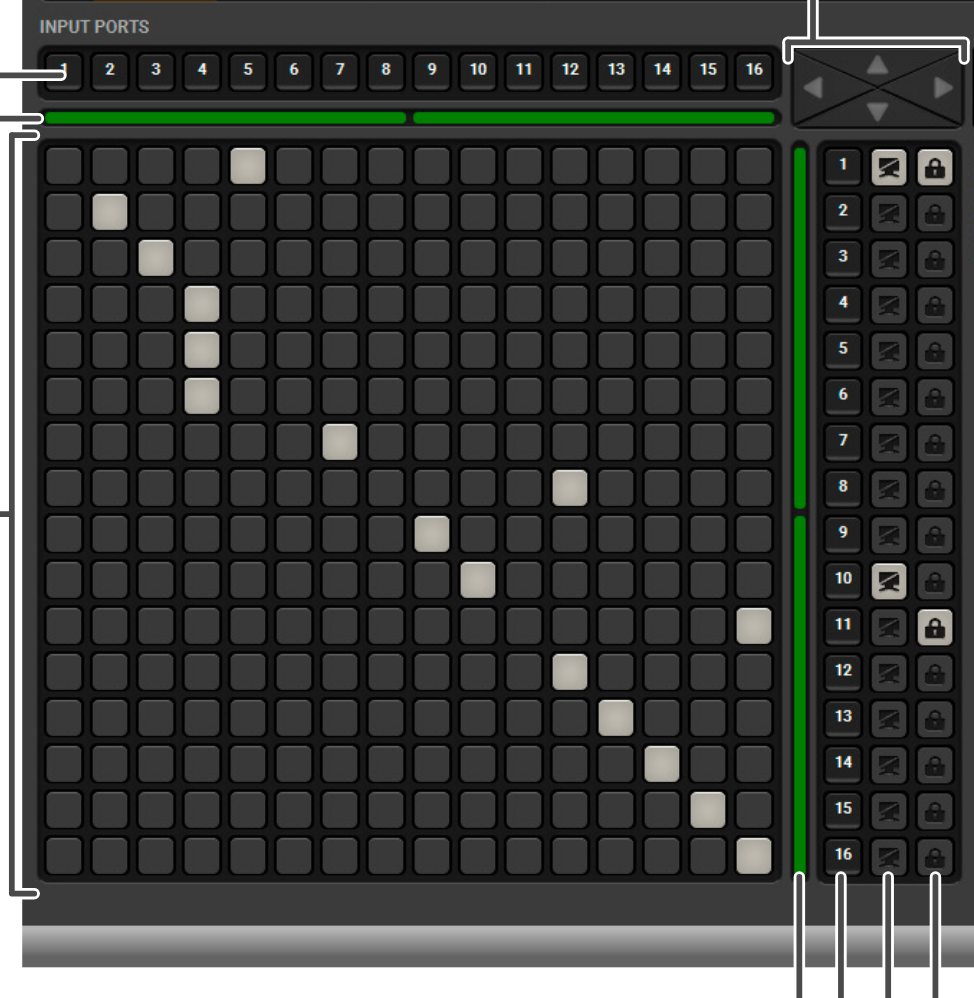

Isadora 3.1: Audio Matrix actor

We would also offer a new Audio Matrix actor (not sure of the name yet, but for now we'll call it that.) It would essentially give you the same interface shown above, allow you to click the actor itself to change the routing or volume, as well as offer multiple inputs that would allow yout to interactively change the volume of the routings you've created. The output would be a routing string as described above. You would then feed that output into the 'audio routing' input of the Sound Player or Movie Player.

Isadora 3.1: Sound Frequency Bands all Movie Players and Sound Players

While I know you'd love to be able to do this in a separate module, for the moment I would simply offer this.

1) We get the existing 'freq band' output working for all flavors of the movie player on macOS and Windows.

2) We would add a matching feature to the Sound Player.In that way, you could get the frequency band outputs we had in QuickTime in previous versions and you would not need to route the sound output to Sound Flower and then back in to analyze the sound.

Isadora 3.1: Sound Player Interface Matches Movie Player

Several of you mentioned this. I can safely say that we can implement the 'hit loop end' and 'position' outputs, as well as offering the same controls we added to the Movie Player in Isadora 3.

Isadora 3.1: Option to Express Volumes as dB instead of a percentages

This is just a numeric conversion from one form to another, so I feel I can promise this for 3.1.

Isadora 3.1: Sound Player reads 24 bit Files

Yes.

Isadora 3.1: Signal Generator

A few of you brought up the idea of a "signal generator" system to check the configuration of the speakers. I don't see this as a big deal and I feel we can promise it for Isadora 3.1. I would say that you would be able to choose a) a tone, b) a voice saying "one one one..." for audio output 1, or "two two two..." for audio output 2, etc., or 3) a combination of both.

Now I would also discuss a few "maybe" features that seem doable to me, but I do not feel confident yet to say how quickly they can be accomplished. If they are doable and turn out to be easy and not prone to make Isadora crash, maybe we could get them into 3.1. However, these might be far more difficult than I imagine, in which case they would have to be moved to another interim release like Isadora 3.2. For purposes of discussing them, I will call them Isadora 3.2 features and list them in terms of what I see as their importance.

Isadora 3.2: ASIO output from the Movie Player on Windows

For the Movie Player, it is conceivable to write a Windows Media Foundation "transform" (i.e., module) that would accept raw audio samples and send them to ASIO instead of WASAPI. I have no real idea about the difficulty in achieving this, but in theory, it seems entirely doable.

Isadora 3.2: ASIO output from the Sound Player on Windows

For the Sound Player, we use an open source audio system called SoLoud, which @jhoepffner has criticized but which I still think is a solid option for Isadora, especially because the source code is clear and compact enough that I can easily modify it. It offers a number of "backends" including HAL on macOS, and Windows Multimedia and WASAPI on Windows. These backends are extensible, meaning you can "roll your own", and so adding a new backend to send audio to ASIO. I would think this is quite doable, but again, I need to really dig in to this to find out if I'm right or not.

Isadora 3.2: Global Volume and Mute in the Main User Interface

This should not be too difficult to do and I feel 90% sure we can have it in Isadora 3.1. But until I think it through more carefully, I'm listing it here instead of in the "promised" features above.

Isadora 3.2: A "vamp" loop for the Sound Player

Addning the possibility of a looping system where the sound plays from the start of the sound to Point A, then loops indefinitely from Point A to Point B, and finally plays from Point B to the end of the file when triggered is not too difficult to imagine... it's just a variation on the existing looping structure. I can see the usefulness of this and feel like it should be rather easy to do for 3.1, but again, no promises yet.

Isadora 3.2: Audio Delay Function

@Michel suggesed adding an audio delay to the Movie Player and Sound Player. This might be possible, but such a feature starts to move into the realm of audio as a first class citizen. I cannot say more right now than I see the reason why this would be important, and I'll keep this in mind.

Isadora 3.2: VU Meter for the Control Panel

This is really just a simple re-write of the Slider Control. It seems doable to me but I won't promise I wouldn't want to delay 3.1 because of this.

So, this is what I'm proposing for an Isadora 3.1 release. Please read this over and offer your reactions.

Best Wishes,

Mark -

hello,

1mil+ thanks to mark and the hole team for having the courage to explain the difficults and a little bit of your company structure to the people.

we are happy about all improvements on audio you promis and all of them you will do in the future. our biggest problem is not have to use other software

for audio in our productions, the main thing is that our workshopper and the people which see how far our set is able to go, are always a little bit sad about

the fact that they have to buy another software to route and minimal manipulate sound. but every thing else from what they are dreaming to do as an interactive art nubbie, isadore is able to do,absolutly great for.

another time thank you for getting us on the development screen.

r.h.

-

Dear Mark and the crew,

it sounds fantastic. I cannot wait tohear them

tank you very much

Jean-François

-

I silently followed this threat, as I did always wait for extended audio features to giving Isadora a more complete feeling, but haven't had a specific feature request.

This all sounds very promising!But I now just wanted to extend this a little bit, even if I risk to tell something you already have in mind, as it is to obvious, but I better say than sorry!

For the audio routings you mention multiple inputs to single output in a 'sequential' manner. But shouldn't it be even more important to have the possibility to route one source to several outputs?

This is especially important to keep in mind, when 'building' the graphical based matrix 'cross point view', as it needs the possibility to put multiple crosspoints in one output row.I guess this is the reason, why the most video matrix switchers remotes software that visualize cross points (like Lightware, s. below) are showing inputs and outputs the other way around (inputs on top, outs right).

As I said, maybe you have this in mind already, and it might be just a small thing, but I thought it would be worth mentioning.

kindly

Dill