3D objects in Isadora

-

Hi,

This post is about using 3D Player objects in Isadora, particularly the quirks associated with UV texture map input and 3D lighting actors. A UV texture image must be assigned to the 3D model in a 3D software program before using in Isadora, otherwise you can not replace the texture using the 3D player 'texture map' input (see the Isadora manual for more info). The texture image file name assigned in the 3D modelling software ( I am using Blender) needs to be short as it appears longer names are not supported and cause an error message in Isadora. The 3ds format supports 65000 vertices I have had 3D models of 45000 vertices working well in Isadora 2.2.2.

I have been working kinect skeleton tracking through NiMate, OSC with the 3d actors, 3D player, 3d Light Orientation,

3d Renderer(update: Virtual Stage actor has replaced the '3d Renderer' in version V2.x). The workflow has been - working with simple objects (3D rock crystal forms) in the modeling software Blender (http://www.blender.org/about/) - apply a 'material' and a 'texture' before exporting single objects in .3ds format for use in Isadora. The 'texture' as a bitmap image (.jpg) assigned to the object in Blender needs to be saved to the same folder as the exported .3ds file or Isadora will not be able to link the two files causing an error message. The length of the file name needs to be kept very short. For me, a couple of times Isadora created an error message asking for a truncated file name. For example, using a texture image file I created in Photoshop called 'texture_01.jpg', generated an error message asking for the file 'texture_01jp' or similar and there is no way to relink the file with Isadora. Keeping the image file name very short helped to avoid the error.It is useful that Isadora can override the texture image file linked to the .3ds object assigned in the 3d software. This allows any image or video stream from another actor to be used with the .3ds object in Isadora. When Isadora recognises an external linked 'texture' image (the one assigned in 3D software before import to Isadora) the 3D player object can have a video or image replace the external image and assign a new texture to its surface via the 3d player actor input called 'texture map'. In this way an image file or a video stream from another actor can become the new 'texture map'. You can also use transparency in a .psd image file. I created image files using the gradient tools in Photoshop allowing a gradient opacity with feathered transparent areas. This translated to a translucent/ see-through 3D player object in Isadora. If the .3ds object does not have a 'texture' assigned in 3d editing software it will display with a base material in Isadora, but will not display the content of video and images assigned through the 3D Player 'texture map' input in Isadora.

Both the 'texture' and the base material can be coloured using the 3D Light Orientation actor. The 3D Player, 3D Light Orientation and the '

3D Renderer'-Virtual Stage all need to be set to the same 'stage/chan' number through their inputs. To use the '3D Renderer' - Virtual Stage set the 'destination' input of the 3D Player to ‘stage’ in V3 'renderer'. Update Isadora 3: use the Stage Setup to implement a new Virtual Stage and then assign it through the 'stage' parameter on the 3D actor in the patch. You can then assign a number of 3d objects to the same '3D Renderer' - Virtual Stage and move them as a group. If you want to apply individual colour and light control you would have a number of'3d Renderers' - Virtual Stages linked to the 'video' input of a number of Projectors, with each Projector assigned to the appropriate 'stage'. A number of 3D Light Orientation actors can be assigned corresponding channel numbers allowing each .3ds object to be coloured independently. This worked for my purpose because the lighting options appear very limited and I have been unable to generate good specular or shading effects with the 3d Light Orientation actor. The most flexibility came from using a separate '3d Renderer' - Virtual Stage and 3d Light Orientation for each object. As a result frame rates dropped after more than 3 of the 3D Player objects were rendering to the stage. (update: the move to Virtual Stage in Isadora V2.x & V3.x with its gpu output has improved this frame rate dramatically.)At times the 'texture map' input of the 3D Player has not 'held' the assigned image - for me - navigating to another scene and coming back to the scene the 3D Player object reverted back to displaying base material - even though the 3D Player input showed an assigned 'texture map'. This appeared to be prevalent for images assigned to the 'texture map' input using the Picture Player actor - video streams didn't have this issue. Toggling the 'on/off' input on the Picture Player actor flicked over to the intended texture map. I have had to include a Scene enter trigger sequence to force the texture map onto the 3D Player object.

There is a processing overhead using these actors to build a scene. Any sophistication - particularly if your expectation is any advanced specular rendering, shading or multi element 3d scenes - will require careful planning, culling and compromising. Sometimes it is the limitations that push us in new directions.

cheers,

Bonemap

-

Thanks for the in-depth report. This is a perfect blog post actually!

Do you have any screen recordings of the output? I am interested in the image of the right; looks like a skeleton tracking/kinect body? -

Thanks Skulpture,

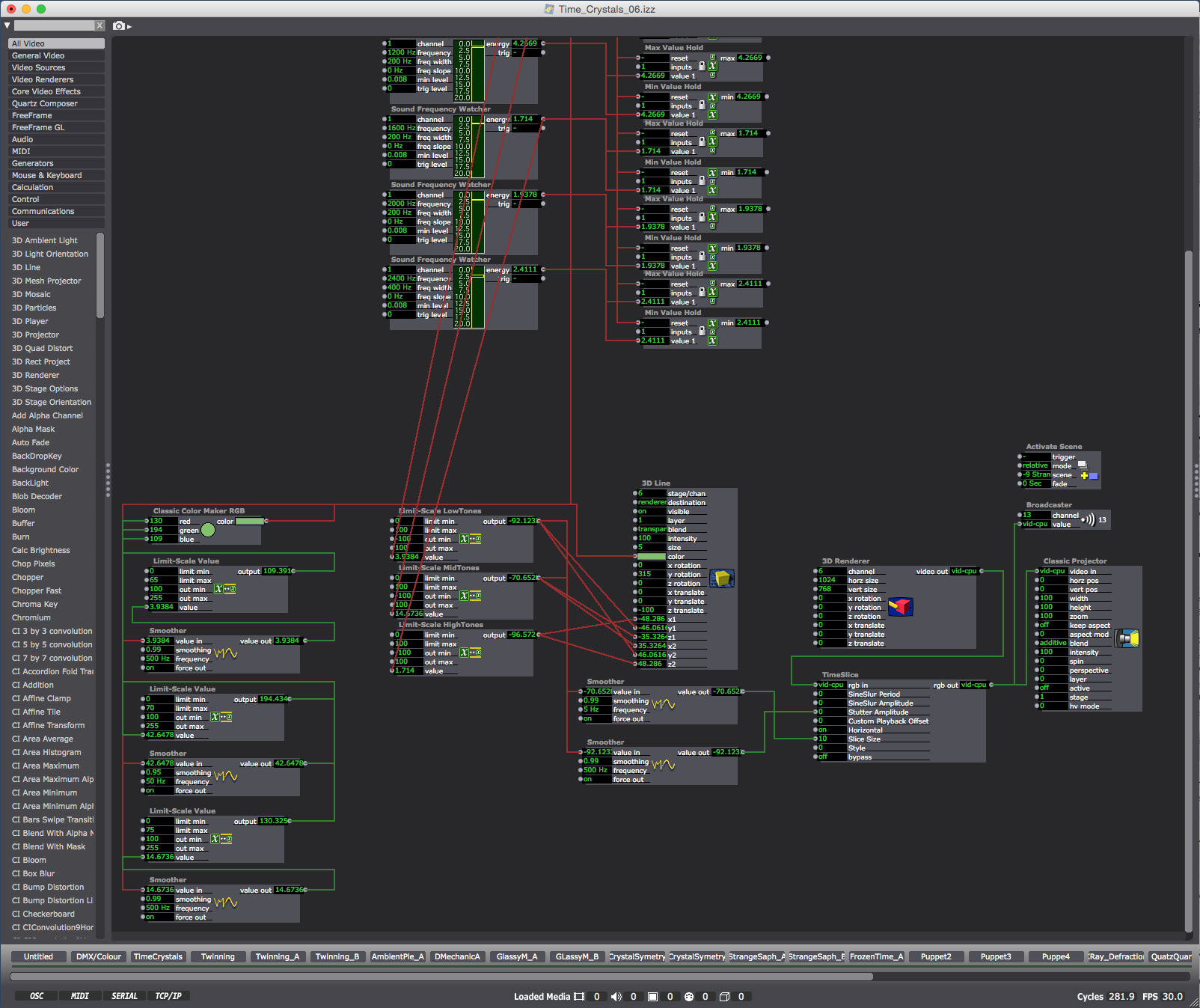

The line figure is modelled in Isadora using the '3D line' actor - the idea was a figure that appeared formed from a continuous line. The points of the figure correspond to the skeleton tracking data from Kinect via Ni Mate and OSC. There are 24 '3D line' actors that make up the drawing. Each '3D line' has a total of six x,y,z inputs (three for each end of the line segment). The 'limit scale value' actor has been used extensively to transpose the figure to the Isadora stage. A user actor was generated to deal with a single line segment and it was then duplicated enough times to generate the outline of the figure. Each data stream has a 'smoother' actor that is embedded in the user actor, but an input for the smoothing has been routed back to a single scale value actor linking the smoothing for all of the 24 line segments.A series of .3ds files of 'crystals' were treated in the same way, however the '3d Player' does not have inputs for a segment - so a "Calculate 3d Angle' actor was used to exchange the proximity data into rotation. The x and y data from the Kinect was then linked directly to x and y of each '3d Player'. The effect is the .3ds objects appear to tumble around the skeleton slightly in continuity with the motion capture.I have made a screen recording of some sections of the overall project. I will be capturing a dancer and two musicians live so the patch scenes are variously motion capture, tracking and sound frequency segmentation. The projection will be mapped into a performance space. Some of the projection will be specific for 'solid light' forms that appear suspended in the performance space haze in the style of Anthony McCall - 'Line describing a cone' etc.Vimeo link follows:Motion capture with Kinect Xbox 360, Ni Mate skeleton tracking and Isadora from Bonemap on Vimeo.

-

Wow that video is incredible. I didn't want it to end!

Very impressive. Love the circular audio responsive stuff.Once again - thanks for sharing. -

Nice work... the single line drawing is elegant.

And its nice to see more use of '3D particles'... that's such a powerful actor..If you can get away from using the renderer at all.. you will see a FPS increase. CPU vid really slows down the pipeline when applied in this way.

-

Very nice work !

And thanks for sharing your experience with 3D actors, this is a part I intend to explore and your Blender tips are useful.All the best.Clem -

Thanks for the encouragement!

@DusX - I will look at avoiding the cpu-vid elements, but I don't think I can achieve these effects without the '3d renderer'. If the 'projector' actor had a video output and could be routed away from a stage - perhaps?I am open to suggestions.Regards,Bonemap -

@ Skulpture - see the screen grab here for the missing piece! - its the 'timeslice' actor on the output of the '3d line' actor. The '3d line' actor has colour and position parameters linked to sound frequency analysis.

Regards,Bonemap

-

Very clever. You are giving all your secrets away here you know ;)

I love seeing how people make patches and use effects, etc.I often think that Isadora patches themselves are just as impressive as the final outcome. -

@ Skulpture - Ha ha!

That particular arrangement of components came about from working with the '3D line' actor and the '3D particle' actor. Inspired by 'generative art' and working out a way to segment audio frequency input. I will be capturing sound from analogue musical instruments using up to eight microphones (percussion, vibes, piano) through a focusrite Clarett 8Pre audio interface. The idea is to clearly visualize each instrument as a pattern and range of colour - intentional synesthesia.

Regards

Bonemap

-

Very inspiring! Thanx for sharing your research!

-

+1 very inspiring stuff.

-

@ bonemap

I try to follow your discription above .... but i am loosing the 3ds object material info from Blender and i wasn't able to colorized the object by the '3D Light Orientation' actor ...

In case i use one 3ds object found in the web, every thing is ok. I try different blender render engine, no different ...The objects look fine at re-import to Blender. I am using Blender 2.76. Do you have an idea to solve this topic?Any hint are more than welcome ....

Diether

-

The '3D renderer' has been replaced by the 'Virtual Stage' in version 2.1 of Isadora. The texture image file name needs to be short as it appears longer names are not supported by Isadora. The 3ds format supports 65000 vertices I have had 3D models of 45000 vertices working well in Isadora 2.2.2

https://vimeo.com/170840419 -

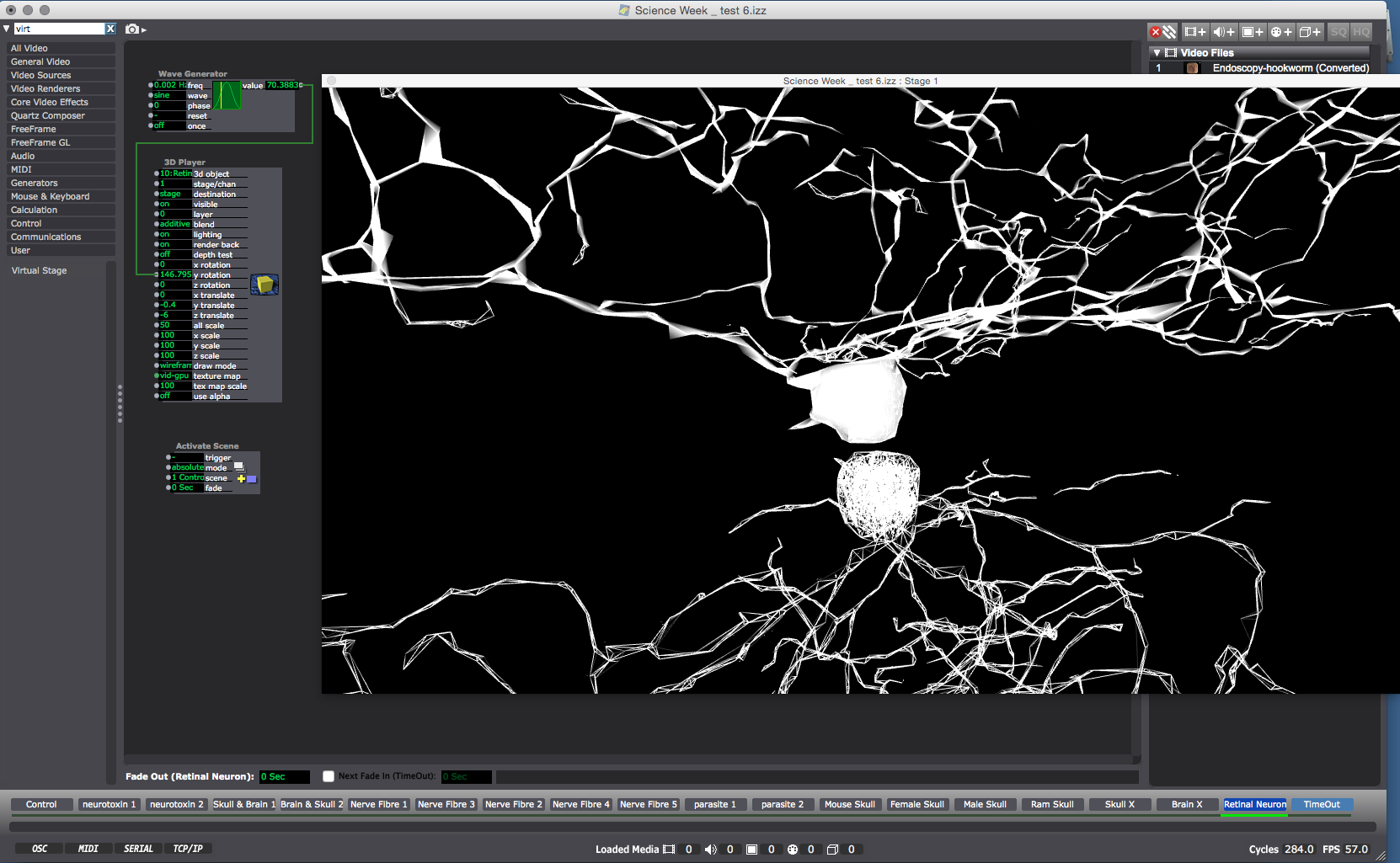

Here is a link to a render of 3D object from Isadora. The 3D Player has been set to 'wireframe', however only about 50% of the model has actually rendered as a wireframe.

https://vimeo.com/171600234 -

I like the effect, but I imagine it was not what was intended.Could there be anything unusual about the makeup of the file that would separate the 2 sections? -

Hi @DusX,

I don't know - the effect has only appeared with the 3D model neurons. These models are from the eyewire.org project to map the human brain. It could be something associated with them.Sometimes it is the mistakes and anomalies that lead us in new directions or produce results that are unexpected and ultimately more interesting than those of our initial intentions.cheers,bonemap

-

Looks amazing!

-

@bonemap Hi!! Thanks so Much for this great Topic!! I've experimented with 3d player a while ago and wasn't able to have my video stream or texture cover entirely the 3d object.In the case of a more complex object that is. The texture only covered group meshes like in this video :

The green spots are actually a line actor that reacts to sound frequencies.

It only does it on these segments.I did this peice but would llike to go much further

or

https://goo.gl/iaDhvV

The result can be interesting but too obvious and synchronised.Any suggestion?

I want to used transparent video files or psd.

Thank you and again great post and vimeo profile!!

-

Hi David,

Can you give me a bit more information about your 3D model - It is hard to tell how complex it is? Is it the same model and Isadora patch in both videos?

The presentation setting in the second video looks great!

I make an assumption that there are limitations to applying texture with the '3D Player' actor. The more complex the model the more issues arise with rendering surfaces and applying textures.

Regards,

bonemap