Gear porn

-

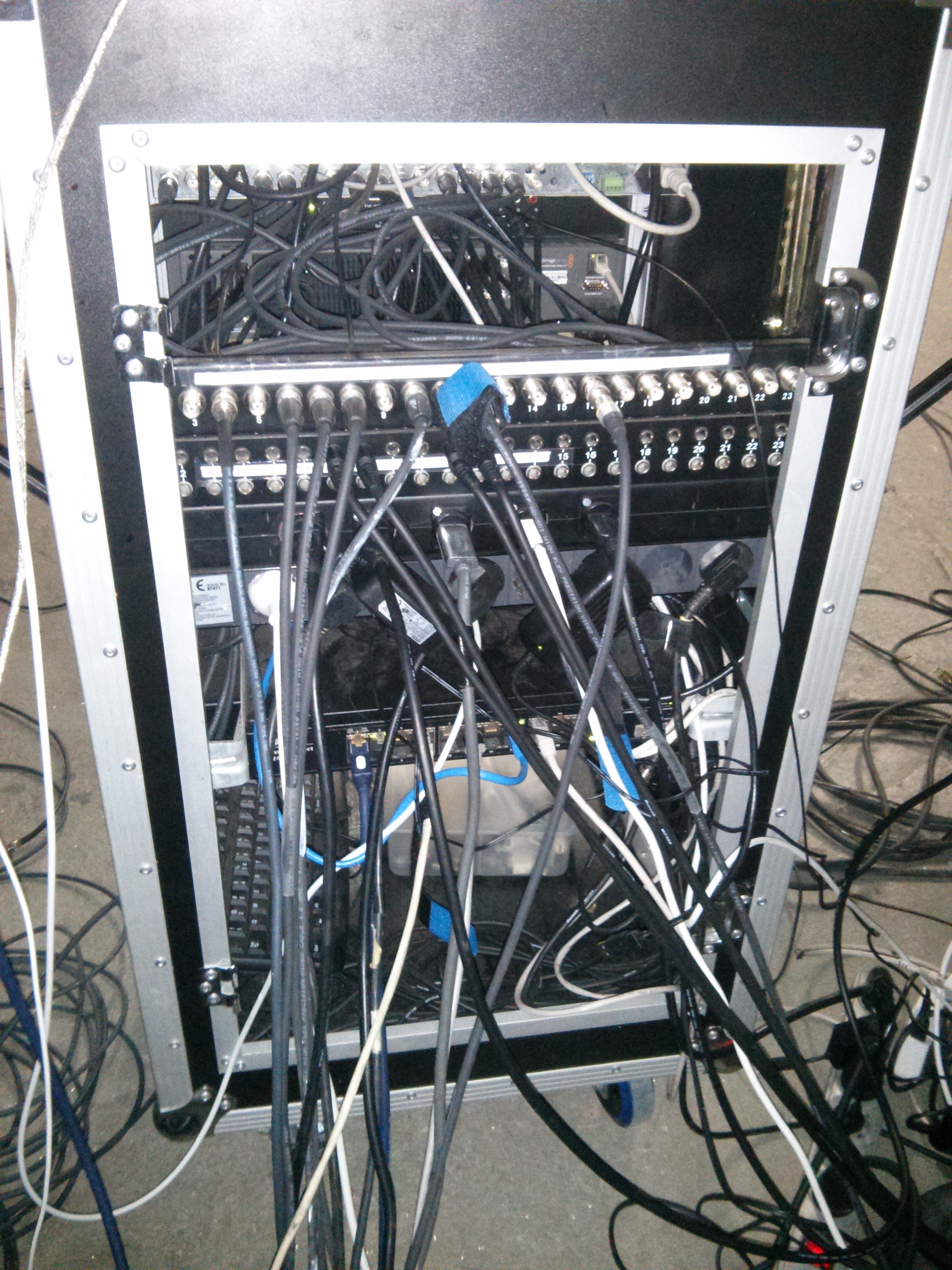

We have been working on a new show I thought I would show a little of our setup, end to end digital HD video and audio. We are using SDI everywhere (even to beamers) except from our computers, an ATEM video mixer with touch screen built into a rack. The ATEM also works as a matrix sending any input to any of the 3 video processing machines. video laptop does live camera mapping (2x HD outputs to 6 custom screens). All systems a relinked with gigabit network for file transfers and OSC. All capture cards are HDSDI and all video runs at 1080i 50. We are using a kinect calibrated with an HD video camera and I made a 25m real extension (no power supply hanging off the kinect it is in the rack) All our audio is on digital snakes (ADAT over CAT 5). The ATEM takes care of all our blending keying and alpha masks ( we generate live alpha masks from the HDMI output of the retina mac book pros- the mini display port provides the image). We are also using a full motion capture system (Natural point with Motive and 8 s250e gig e cameras at 200fps) for live augmented reality. All systems have total access to each other and the lighting system so lights sound video and live events are lock tight sync.

The rig is super reliable and not really that expensive, it is really a mess as I have been building as we go, Ill rip it apart and make it neat before we start our run (we have 40 shows in a row so I want it all to be tight before we take it out of the studio. Also replace the german post crates with a real keyboard stand). We toured with a similar setup last year and the rolling racks are great, we just push the systems from the truck to the venue patch them together and we are away, I get much longer life from the gear as well.If anyone has any questions feel free to ask.Oh and video of the kinect extension is herehttp://www.youtube.com/watch?v=J_ltN66BNcM&feature=youtu.beFred

-

Uh, WOW. ;-)

-- M -

Hi Fred, could you expand on "Natural point with Motive and 8 s250e gig e cameras at 200fps"? Thanks.

--8 -

"Traditional" motion capture systems use multiple cameras pointing into a space. They can track markers as individual points but better they can track arrays of markers as rigid bodies. With rigid bodies you cannot mistake one marker for another as they are unique. To be correctly identified a marker (part of a rigid body- they use 3 markers at least per rigid body) has to be seen by at least 3 cameras. From this the system can triangulate the exact position (mm accuracy) of the marker, in the case of a rigid body it means giving precise position and orientation (something you cannot do with normal tracking or even a kinect). We have 2 motion capture systems both from Natural point (the cheapest option for motion capture but not so cheap) the one I mentioned here has 8 wide angle high resolution (gige with poe on cat6 lines) cameras that are put around the space, the system is calibrated and then we tell it where we want the zero point to be (in the centre of the room). This system run autonomously from our other rigs and sends a live TCP stream of the position and orientation of all our rigid bodies. You can also use suits with 30 markers to track humans in space at very high speed and accuracy. This is what is used to capture the motion of dancers to apply it to 3D models in hollywood animations. Here is a cheesy sample of the system at a trade show

https://www.youtube.com/watch?v=s2oIBUO4mY8More info on the system we use is herehttp://www.naturalpoint.com/optitrack/products/motive/Here is some of the things we have been doing with ithttp://vimeo.com/61531402We made this so we can draw augmented reality live (using a camera as the input source for drawing) -

jaw dropping

-

I'm not even jealous or anything.... ;-)

-

-

Ooooo, Ooooo I have some questions:Are you using Isadora at all in this set up?

If you are, how are you connecting your MoCap data with Isadora? How do you map the rotations of your MoCap data to your Isadora actors?If you are not using Isadora, then what software are you using to integrate your live video feeds with MoCap data?Kind regards,Alex -

No, we dont use Isadora, we make everything in openframeworks. This stuff is not possible in Isadora. I have used Izzy with the mocap as I wrote some software to get the position data via OSC. We do the video overlay via hardware (using the ATEM switcher from blackmagic and generating a live alpha mask out one of the HDMI outputs in real time. The ATEm can do the blending in HD with less than a frame delay. I rarely use Izzy these days but sometimes give workshops on it. However my hardware is Izzy compatible and I worked a long time to get very high quality image workflows so I shared it here.

Fred -

Fred,

I love what you are doing and thank you for explaining the video overlay signal path.I am currently trying to integrate a VICON system with Isadora and am having some strange problems calculating rotation data from the position data I get via OSC. Thus my question about how you might map rotations.Basically I am getting XYZ data and am trying to calculate the rotations based on comparisons between the 5-6 points I am tracking on a prop.At the moment I am struggling with a strange kind of flipping that occurs when I try rotating a prop more then 180 degrees or so. . . . -

When you get data from the system we use only quaternions, they provide the correct 3d location and rotation information. Without being able to use quaternions and rotation matrices getting anything useful from a full motion capture system is pretty much impossible. I tried to do this kind of precise work with Isadora but found too many inconsistencies and limitations in Isadora's 3d environment. Quartz composer maybe of more use for you as it has a more advanced set of 3d tools, but honestly without rotation matrices and view ports and other standard 3d tools you will be very limited. What exactly are you trying to do with rotation? Maybe I can get you started with some code?

-

Wow, Thank you!I have been attempting to map a 3D asset to a physical object via VICON>OSC>ISADORA.One possible goal in this current research is to produce a 1:1 relatoinship with properly distorted video onto a physical object that is being manipulated in realtime.Quartz may well be the way to go as I am at least superficially familiar with it. I am unfamiliar with what it offers in the way of 3D tools and interaction via OSC. I will need to spend some time researching the 3D tools available in/for Quartz Composer. . . .I've gotten to the point where Isdaora can see what I think is the raw XYZ information produced from VICON. Each point VICON tracks sends ts XYZ coordinates on a something like a -5000 to +5000 mm 3 axis matrix.I have been mucking about with math and trying to use the Calc Angle 3D actor to map steady rotation between a digital prop and a physical prop. At the moment the best I have breen able to do is get the 3D asset to follow a very limited range of motion. My current calculation causes the digital asset to flip and spin when I cross certain thresholds of rotation. I'm guessing this might be the kind of problems you ran into?I had a colleague suggest today that I am dealing with "Gimble Lock." Which "is when two rotation axes overlap." They also mentioned that I should investigate "quaternions." . . .This is a bit of a false task though. In response to your quesiton "What exactly are you trying to do?" - Exactly, I am trying to create a curriculum for a new class that leverages Isadora in conjunction with VICON. We will also be looking at Quartz, MAX/Jitter, Motion Builder, and a number of other theatre centric performance media technologies such as DMX, LANBox, etc.What I am really doing is looking for is a good challenge AND finding a practical reason to integrate Isadora with a VICON system. Why Isadora? I am primarily teaching this class from the perspective of Isadora as a media design tool for rapid prototyping of interactive media systems - using it as a kind of glue for various media design techniques and technologies. Oh, and there is a VICON system in the EMMA lab I have the good luck to be teaching in.Perhaps a simpler relationship between the XYZ data that does not include accurate rotations can still offer a theatrically viable interaction? . . . .Thank you! -

The maths involved in calculating rotation from position is difficult and problematic (it is never exact and depending on the method you use you can end up with gimble lock). Isadora is not able to easily make these calculations. If you can switch to using real rotation data from the vicon (quarternions), maxmsp has some objects to handle them http://cycling74.com/forums/topic/ann-quaternion-objects-for-max/ Although dealing with this in any node based media tool will be pretty difficult and inefficient. You can also try look inside this tool, it is about as good as you can get for rotation from xyz position and it is quite limited (to reduce wildly wrong rotations). https://code.google.com/p/tryplex/ Somewhere in there is a halfway conversion from xyz to rotation. I know the folks who made it and this was as good as it could work. Using something like openframeworks is actually quite easy and i have been teaching it to young students and they pick it up quick. It is free, open source, cross platform, almost unlimited and much more powerful. The learning curve is no steeper than Isadora and it gives students especially an environment they can use well into the future. I wish I had found it earlier!

-

hi, nice gear. We used Organic Motion and jMonkey engine for Networked MoCap with Dancers in Japan, NY, Florida and Vancouver sharing the same space. we used OSC for the bone rotations and collisions. This is a very impressive setup!

-

PS as for motion builder! I ended up getting incomplete data streams and corrupted .BVHs so beware!

-

I have used motion builder a little but not had these problems- is it a problem from the data stream from Organic Motion?