New iPhone streaming / Syphon software?

-

Does anyone out there have any new, interesting tips for software that has a lower latency and higher stability when streaming video from an iPhone? I've gone through all the usual suspects (EpocCam, Syphoner, AirPlay), but nothing is really giving me the fluidity, stability, and "non"-latency that I need. Any new toys out there would be greatly appreciated to know about!

-

This sounds promising:

-

I've been using ManyCam (standard version) to stream iPhone camera into Isadora. Almost lagless over dedicated WiFi network, and pretty good over a public one, and not bad across the web.

ManyCam is seen as a live input in Isadora.

NB I am on PC but ManyCam apparently works on Mac OK -

Hi,

I have used ManyCam and AirBeam http://appologics.com/airbeam ... both have syphon functionality and drivers for input capture settings. Airbeam are claiming "Latency below 200ms on good Wi-Fi connections."

I have worked with a iOS programmer colleague to develop a plugin for Isadora and an app for iOS that streams video into Isadora using UDP packets. This was developed pre Isadora V2x using the SDK and is available here https://github.com/bonemap ... it was developed for use as a tracking input so that many iOS devices could be setup above performers quickly and cover a larger area than a single camera, each device then streamed video to an instance of the Isadora plugin in a patch. At the time of development I had a gaggle of iPods streaming video into Isadora. This is not a feature rich plugin and was not focused on image resolution or quality as it was used primarily to track movement.

I am waiting for the new SDK that is GPU ready - like some other plugin developers on this forum... https://community.troikatronix... I would be interested to invest in further development of the Isadora plugin and iOS app when the GPU version of the Isadora SDK is finally made available - and if I can inspire my iOS programer colleague again!

regards,

bonemap -

there are also now the NDI tools. This proto ol is udp based as well. It seems to be getting some traction in the market.

-

I often use TCPSyphon from Techlife, you have 3 resolution settings. Depending on your Wifi you have to go to the lowest resolution. http://techlife.sg/TCPSyphon/

Best Michel

-

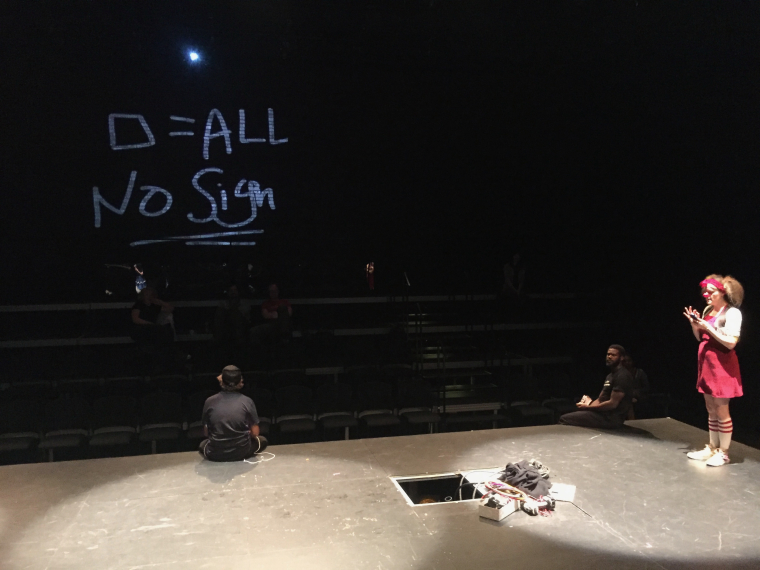

I just opened a show here in Toronto where we make extensive use of wireless, live camera and drawing work from an iPhone.

These are images from the tech week and previews, where the clown duo Morro and Jasp use an iPhone to interactive create "new rules" with the audience, which are projected around the space. Later in the performance a live camera feed from the iPhone is also used.

The performers needed to be able to use a wireless touchscreen device from the stage, to create original drawings. Canned assets could only be used as a “backup” since the audience would actively participate in generating the material.

Here's a few things we found along the way

Building your own solid, high performance wireless network around the stage with a hard-wired connection between the access points and the show control machine has a massive impact on reliability. I recommend the network be reserved for this purpose only - ours did not have internet access, and wasn't open to the rest of the production team. can't stress enough the difference this makes. We used a router in the middle of the stage with two additional access points on either side. Overkill, but it was needed due to the amount of physical movement during the show.We used AirServer to wirelessly send the display of the iPhone to the computer. Air Server is fast, easy to set up, reliable, uses Apple’s existing AirPlay technology, and already outputs to Syphon.

I used an iPhone running the Paper drawing app, which is a fun and fluid drawing app. I used Apple’s Guided Access mode, which prevents the phone from leaving the current app, and allows user-defined disabling of the lock, home, volume buttons, as well as parts of the screen to limit the functionality of the app (mainly so that the app isn't accidentally exited on stage and popups didn't appear during the show).

If possible, train the performers on how to troubleshoot the connection. The advantage of AirPlay + AirServer is that if the connection drops, it takes two quick swipes to re-establish it.

Fully charge the phone, disable notifications and cellular, and have a backup showfile with canned assets/ alternative effects. I built an entirely canned version of the show that the running crew can switch to if the connection drops or the phone breaks.

Finally expect that at some point during tech or a run, the phone is going to break somehow (even if you get a case!). We had out phone go flying out of an actor's hands and shatter partway through tech week and had to scramble to get it replaced. The producers weren't happy that afford to break a few iPhones.In Isadora, I modified the Syphon feed using the Video Invert and Chop Pixels actors to create a better looking projection, and remove the UI elements of Paper. This created the live drawing effect seen in the photos.

I then created a system which sequentially displays the Syphon feed, captures it, then displays all previously captured drawings over the course of three scenes, which were repeated eight times. Each capture scene was isolated, so I could capture the phone input independently of everything else on the stage.

Later in the show, we used a live feed. I also found Epoccam to be lacking in reliability. We ended up using the basic iPhone camera app, with the UI elements cropped out of the frame. The "autofocus box" became a gag in the show as a result. -

Great insights for using AirServer in a show context thanks for sharing that. I looked at AirServer last year for a production but found a few issues with it in the context of what I was doing. Here is the thread https://community.troikatronix...

One was having AirServer going full screen taking possession of the control screen - perhaps it has better options now. The other issue was Isadora crashing when using another instance of Isadora as a source on a second or third machine - perhaps also fixed.

-

@jakeswit We just did a theaterplay with 3 Iphone5's over one 5ghz wifi channel with Eppocam multiviewer, and that worked out very well. The trouble is when you have disconnection with one of the phones (usually a human error) the order of the streams will change/swap, during a play that is very annoying. We built in a mini 3 in 3 out matrix in front of the patch to deal with that. The Wifi connection is crucial. Many times people will think you need a supertroughput but that will only make the connection less stable. We ended up in the N setting, not the AC. But it even works on 802.11 G settings in 2.4.

-

@bonemap -- I haven't read your thread yet, but we figured out that AirServer takes possession of the screen on the computer whenever the iDevice's screen is rotated. The solve for this is to lock the screen orientation on the device.

@barneybroomer -- Setting the iPhones into Guided Access mode is essential to avoid the screen getting locked out or the app getting closed by touching the Lock or Home buttons. Guided Access also allows you to disable parts of the screen on the device which the performer might accidentally touch and cause the app to open a display you don't want to appear.

Regardless, if possible I recommend training performers on how to use AirPlay and Guided Access. In our process the performers were a quite overwhelmed by it, so that burden fell to our diligent ASM. However, if your actors comfortably understand how Guided Access and Air Play mirroring work, they can easily reestablish a lost connection.