Kinect + Isadora Tutorials Now Available

-

@Marci --

Thank you so much for your AMAZING contributions to the Kinect project! -

No probs - all work I'd already done in Sensadora - an Open Source CC0 licensed Processing sketch specifically for getting all the various Kinect usage cases into Isadora - not finished yet. Single sketch with options for blobs, skeleton, depth thresholds, pointclouds, gestures, 2D & 3D physics engines, & multiple kinects. Also with added leap motion support - idea being to allow the obvious Minority Report display interaction with a media library of audio, video, stills and live streams, along with generic OSC, serial, & http outputs for controlling Ptz cameras (VISCA / IP / Galileo) and link to Domoticz home automation for physical control over mains sockets, inputs from contact sensors etc & other smart devices. I got sidetracked by homebridge-edomoticz development, but that's launched & support requests slowing down now so should be able to get back onto it in a week or two. S'one of those ideas that just keeps expanding! Joys of a severely autistic son who demands LOTS of sensory input! The playlist_sketch at https://www.dropbox.com/sh/lyxj60kf0k6jke7/AAAF5MszGamDSuiSP4fgH9t5a?dl=0 is the experimental playground for a lot of it.

-

So there is a issue, with downloading individual files from Github.

If you save a .izz from a link it will not open.Individual files must be downloaded from the 'view raw' option.Otherwise, downloading the ZIP package is also fine.For example, right clicking to save file as, from this link:

https://github.com/PatchworkBoy/isadora-kinect-tutorial-files/tree/master/Isadora_Kinect_Tracking_Mac

Will deliver a unuasable Isadora file.To get the same, you need to click the filename, and then 'view raw' -

Ah, yeah. Standard / by design. GitHub is a version manager / online source viewer. Not designed for pulling individual files. Download via provided Download as Zip button top right of the repo frontpage, via view as raw / open binary link on individual files, or use any git client (https://desktop.github.com) to properly clone the repo... which will then handle incremental updating etc within the client, along with ability to roll back to previous versions, switch to alternative branches (eg: master, beta, stable), allow end-users to submit proposed changes back to the repo, issue tracking etc. Any right-click > save as action will just download the JS/htm src for the file viewer at a guess (open it in notepad to verify)

-

I'm sending here an updated version of my very old Isadora Kinect Skeleton Part user actor. (for History I put it in the ni-mate forum a long time ago while I still used their public beta before the 1.0. They liked it so much that they offered me a licence of the version one when it came out).

It is essentially what Mark has done for the new tutorial files but you can see in the actor's output the actual name of the part which I find useful and there is an additional input I called osc offset. I added it because kinect might not be your ONLY Bosc input and if you have fed Izzy with another device ( like an iPad with ouch osc BEFORE you send the kinect data in... you're screwed. Kinect point actor won't work unless you change your osc input inside the actor on inside the Stream Setup window.With my actor and you just give to osc offset input the last Bosc port number you already have.The actor will automatically shift all three osc listener channels inside so you have the right value for the right body part -

ey!

great discussion here. Have the files in the tutorial been updated? -

The updated files are at https://github.com/PatchworkBoy/isadora-kinect-tutorial-files - follow the link, click the “Download Zip” button top right corner.

-

Am working my way through this on Windows 10 with a Kinect that I previously had working with the original Tutorial files.

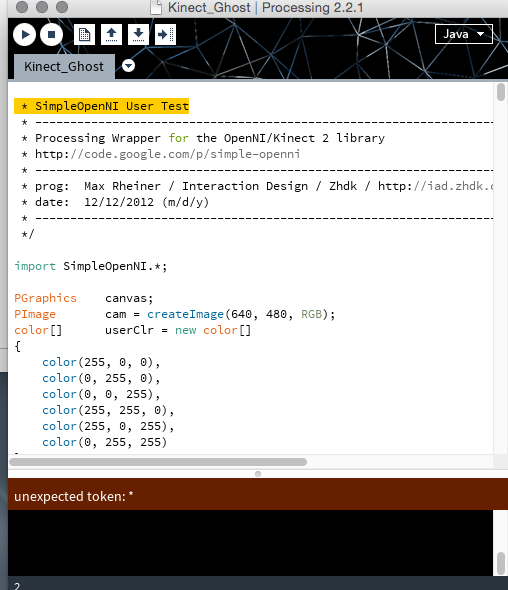

I'm trying to run the latest, updated files, downloaded from GitHub. When I run the Processing Sketch Isadora_Kinect_Tracking_Win.pde I get an error unexpected token: = and the line

if (userList.length !== 0) {Can anyone proffer any help?

Thanks

Mark

-

Try changing

if (userList.length !== 0) {toif (userList.length != 0) { -

Thanks, Marci, yes I did try that and it gave another error further down:

Cannot find anything named kCameraInitMode

with this line highlighted:kCameraInitMode = 1; Thanks Mark -

@Marci thank you for your added ghost imageon kinect! one more question. is there a way to smooth the ghost image. as it is ,lets call it quite nervous. apart from this it works great.

But i had to delete the first couple of lines (all the ones with until "import...." because they created an error. an info that might be also useful to others here in this forum.bestps: and if i press 1 (case1) on my laptop it crashes my computer completely!! but i don´t need it so i just avoid it.

-

Opening line should begin /* If it doesn't then it'll cause errors. /* denotes start of a comment block, */ denotes end of a comment block. Looks like a case of clumsy fingers your end I'm afraid, as the files in the repo all have it correctly in place. Pressing 1 will try to init RGB mode. If sketch not launched in RGB mode then this is expected behaviour... like I said, can't switch between IR & RGB once launched. Smoothing - add a blur actor in Isadora after syphon receiver, before however you're converting it to a mask (assuming that's what you're doing)

-

@Marci thank you for the quick response. Blur in Isadora was my solution to solve the "Nervousness". I was just curious if there was an Option within Processing.

best -

@mark_m: add... int kCameraInitMode = 6; ...at line 40. Looks like I missed defining a global variable when I did the Windows file. Soz! I presume that means you're the first to actually use the Windows files as no-one else has picked up on it! Will fix it in the repo when I get home later.

-

@gapworks: lots of options in processing, canvas.filter(BLUR, 6); after line44, or something similar. See https://processing.org/reference/filter_.html or https://processing.org/examples/blur.html Ultimately, doing it in Isadora is simpler for you to maintain tho.

-

Hi Marci,

Maybe I am the only person using this on Windows!So I just downloaded your updated file that you did about 1800 01 March.

This still has the == issue. (see above). I fix that, but now I get an error further down when I try and run the processing filesI don't know if this is user error or what - my programming skills sadly lacking - but when you have a mo' I'd be grateful if you could have a look

Cheers

Mark M - lone Windows user!

When I run the processing file the error says:

cannot find anything named "inUserid"

line 187 sayssendOSCSkeletonPosition("/skeleton/"+inUserId+"/head", inUserID, SimpleOpenNI.SKEL_HEAD); -

Oops - grab the latest zip from the repo... have corrected both issues. Any mention of inUserId should be inUserID

(Or you could just do a find and replace in Processing / any text editor) -

Thanks for all the work gone in here... For Kinect tutorials which all worked for me, and here also to Marci. Great stuff.

****I also had to correct the following only mac (retina) - and lower case Id to ID but then it seems to work as it is below in sample pic.**if (userList.length != 0) {**

-

5 & s key solves Ghost riddle. Brilliant!

Now on Ghost and without skeleton the IR image keeps cutting out and then re-appearing. Any ideas on why this is? Struggling to reboot it.Is Alpha Mask the best way to turn this into a mask along with an Izzy Blur to project into? Will check the follow mask tutorial too. Sorry for sketchy posts, am mid production with this unfortunately not getting the time it deserves to implement. Ghost working in Processing is great, Now to turn that into a mask and i am a happy bunny for now.Many thanks for any and all helpG -

On ghost without skeleton there should be no IR image, just the ghost...? Do you mean the ghost keeps vanishing and re-aooearing? This will be down to OpenNI not being able to detect a user... ghost / skeleton needs as much of the entire person from feet to head in the shot as possible as OpenNI works out that a person is stood there by recognising an entire stick figure. It can’t do a tight shot where the user is only there from the waist upwards for instance... or if 50% of the person’s stick figure moves out of shot to the side.

Easier way to diagnose: video your screen on your mobile phone or summat whilst it’s happening and bash it on youtube and post me the link so I can see what’s going on...Could also be USB bandwidth which would cause OpenNI to drop and have to timeout & reinitialise the sensor... which sometimes it can’t do without a complete shutdown of processing / reopening the sketch. I’d only expect this to be an issue if you were using USB external disks or cameras hooked up by USB that’d start saturating your total available USB bus bandwidth.And yes, alpha mask and izzy to blur...At some point I’ll add a blur option into the processing sketch, but can’t give any indication as to when that may be - at the moment the bill-paying work’s having to take priority!