Audio Features for Isadora: What Do You Want?

-

First of all, before we start this I would like to say that we love the fact that you include us in this talk and make us part of the design phase of how audio should flow in Izzy.

I get that we aren't easy and that our wishlist might be a big undertaking, so like @fred said I would also love to hear what your plans are with audio and how you envision it in the program in short-term and long-term (so let's say in the coming version of Izzy and Izzy in 1-2 years from now on)

Regarding your questions

Q: Resampling issue

A: I would be fine with setting the program to a certain sample rate so that all files have to be in that ballpark. It is not strange for us as Izzy programmers to do this already with video to ensure that it has great playback for the situation that is required.Q: Overloading

A: If you overload a channel then honestly it is your issue. If you add a quick toggle in the HUD of the program to allow us to silence all channels it will be fine with a little volume slider that multiplies and acts as a grandmaster. (And yes, this can be global doesn't have to be scene-based)Q: Channels

A: Just drop the extra channels, if you try to connect 8 streams of audio to a 2 channel interface you will lose the extra 6. Just limit the amount of 'Virtual Audio renders' to what the program can handle (Just as an example lets say we have 16 channels in Izzy where you can output sound through, render the sound to the 'containers' and only output the sound if there is a physical device connected to that channel)Q: Timing

A: Roger that, I agree that we can't have the super-precise timing of the audio channels on the same thread as the video renderer / the UI thread. So that either means that we need a separate thread for that.. (Not sure or this is feasible, just sharing ideas here..)A few things that I would like to point out.

As an artist, I'm fine with simple audio plugins / routing options inside the 'logic' of the program. So like you noticed before I'm a big fan of just connecting the signal to a 'Speaker' actor and get my sound to the channel, say against Isadora that Channel #1 should go to Output #2 on my soundcard. It also gives me a lot of options in the future for my job as an installation artist (and I know that some features where you are working on now, for example the Kinect actors / OpenNi intergation are more for that 'new' market (we are here ;), then the normal 'scenography' student or the theater maker that is afraid of a beamer..)As a teacher, it makes perfect sense for me to explain how audio flows if it follows the same pattern as video

As a community member I see a future if we now lay the ground work for this to happen that we can share some amazing patches with the community / fellow artist to create powerfull patches with still having the easy flow of Isadora. And that is a huge advantage if you compare it to the sleek learning curve of for example Max MSP. (And yes, we will have issues where the answer might be, go work with Max MSP to get this done and not with Isadora and here are some amazing tools to get you started / get the data back in Isadora and work with it)

-

Dear Community and @Fred, @Juriaan, @RIL, @bonemap @ian @jfg @kirschkematthias @tomthebom @kdobbe @Michel @DusX @Woland @eight @mark_m @anibalzorrilla @knowtheatre @soniccanvas @jhoepffner @Maximortal @deflost @Bootzilla

Thank you all for your contributions to this thread so far. We are listening.

But, as I said at the outset, making audio a "first class citizen" in Isadora (i.e, audio travels through patchcords) for Isadora 3.1 is not something we will take on, because we want to get 3.1 out in the next few months. Instead, such a feature is what I would consider to be an Isadora 4 feature. Now, you should not assume that making a major release (i.e., from 3.x to 4) will take years. But what I can say is this: the effort to program such a feature and test it and ensure it will be reliable, and to deal with the subsequent tech support for this feature will be costly; it means we need to release it as Isadora 4 so that we can ask those with perpetual licenses to pay an upgrade fee.

With that in mind, here is what we are proposing for Isadora 3.1.

Isadora 3.1: Sound Routing For all Movie Players and Sound Players

1) You will have an 'audio device' input that allows you to choose the hardware or virtual output (if possible) to which the audio is sent.

2) You will have a 'audio routing' input that allows you to route the source channels to whatever output on the audio device you wish.

3) While it is yet to be proven I can do this, if it is feasible, you will be able to control the volume of every source channel being sent to an audio output.

4) The audio output will delivered to the hardware using the default audio system for the given platform -- that means HAL (hardware abstraction layer) on macOS with AVFoundation, and WASAPI (Windows Audio System API) on Windows.For the movie player, I already have routing working under AVFoundation on macOS. I am close to having a working verson using Windows Media Foundation on Windows.

For the sound player, I have modified the sound library we use (SoLoud) to allow this routing, and my initial tests show it works. That covers both macOS and Windows.

In other words, I believe we are not terribly far away from having these features in a working beta.

That said, I want to dig in to point #2: the 'audio routing' will be represented by a string that indicates the source channel to output channel routing. Right now, I imagine something in the form:

1 > 2; 2 > 5; 4 > 6

The first number represents the source channel in the movie or sound file. The second number, after the greater than sign, indicates the destination audio output. So the above string would send source channel 1 to output 2, source channel 2 to output 5, source channel 4 to output 6. I could also imagine a notation like this:

1-4 > 5

where source channels 1 through 4 would be assigned sequentially to output channels 5, 6, 7 and 8.

The reason to use this string representation is interactive control: you could generate such strings on the fly or by using a "Trigger Text" actor and send them into this input to control the routing.

In addition, I can imagine a volume parameter along with these, assuming it is actually possible to control the volume -- something I have not proved on both MacOS and Windows yet.

1 > 2 @ 50%

or

1 > 2 @ -3db

which would route source channel 1 to output 2 and have a volume of 50% (in the first example) or a volume reduction of -3 db (in the second example).

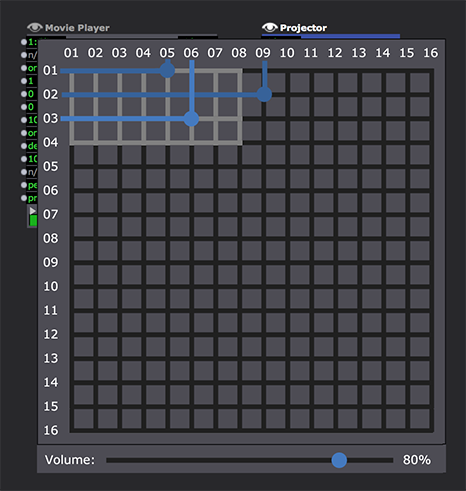

Of course, no one wants to write out these values as a text string to define a fixed routing. So if you click on this input, you would be presented with something like this:

(Note: this is just a rough drawing I whipped up as an example!)

The source channels are listed along the left edge, and the output channels are listed along the top. To route a source channel to an output channel, you would simply click on the intersection between the two. Again, if it's possible, you could the set the volume for the currently selected routing at the bottom.

Note that the lighter grey lines would indicate the valid source channels for the currently playing file as well as the valid output channels for the currently selected device. (In the example above, there are four channels in the source media, and eight channels on the output device.)

Because media files or (in theory) the output device can be chosen interactively, and one might have more or less channels than were present when this routing was made. If a routing is made that is invalid for the currently playing media or current audio output device, it would be ignored. This would be the case in the example above, where channel 2 is routed to channel 9 of the output device; as indicated by the darkened lines, the audio output device only has eight outputs. So the routing from source channel 2 to output 9 would be ignored.

Isadora 3.1: Audio Matrix actor

We would also offer a new Audio Matrix actor (not sure of the name yet, but for now we'll call it that.) It would essentially give you the same interface shown above, allow you to click the actor itself to change the routing or volume, as well as offer multiple inputs that would allow yout to interactively change the volume of the routings you've created. The output would be a routing string as described above. You would then feed that output into the 'audio routing' input of the Sound Player or Movie Player.

Isadora 3.1: Sound Frequency Bands all Movie Players and Sound Players

While I know you'd love to be able to do this in a separate module, for the moment I would simply offer this.

1) We get the existing 'freq band' output working for all flavors of the movie player on macOS and Windows.

2) We would add a matching feature to the Sound Player.In that way, you could get the frequency band outputs we had in QuickTime in previous versions and you would not need to route the sound output to Sound Flower and then back in to analyze the sound.

Isadora 3.1: Sound Player Interface Matches Movie Player

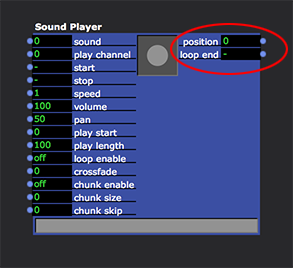

Several of you mentioned this. I can safely say that we can implement the 'hit loop end' and 'position' outputs, as well as offering the same controls we added to the Movie Player in Isadora 3.

Isadora 3.1: Option to Express Volumes as dB instead of a percentages

This is just a numeric conversion from one form to another, so I feel I can promise this for 3.1.

Isadora 3.1: Sound Player reads 24 bit Files

Yes.

Isadora 3.1: Signal Generator

A few of you brought up the idea of a "signal generator" system to check the configuration of the speakers. I don't see this as a big deal and I feel we can promise it for Isadora 3.1. I would say that you would be able to choose a) a tone, b) a voice saying "one one one..." for audio output 1, or "two two two..." for audio output 2, etc., or 3) a combination of both.

Now I would also discuss a few "maybe" features that seem doable to me, but I do not feel confident yet to say how quickly they can be accomplished. If they are doable and turn out to be easy and not prone to make Isadora crash, maybe we could get them into 3.1. However, these might be far more difficult than I imagine, in which case they would have to be moved to another interim release like Isadora 3.2. For purposes of discussing them, I will call them Isadora 3.2 features and list them in terms of what I see as their importance.

Isadora 3.2: ASIO output from the Movie Player on Windows

For the Movie Player, it is conceivable to write a Windows Media Foundation "transform" (i.e., module) that would accept raw audio samples and send them to ASIO instead of WASAPI. I have no real idea about the difficulty in achieving this, but in theory, it seems entirely doable.

Isadora 3.2: ASIO output from the Sound Player on Windows

For the Sound Player, we use an open source audio system called SoLoud, which @jhoepffner has criticized but which I still think is a solid option for Isadora, especially because the source code is clear and compact enough that I can easily modify it. It offers a number of "backends" including HAL on macOS, and Windows Multimedia and WASAPI on Windows. These backends are extensible, meaning you can "roll your own", and so adding a new backend to send audio to ASIO. I would think this is quite doable, but again, I need to really dig in to this to find out if I'm right or not.

Isadora 3.2: Global Volume and Mute in the Main User Interface

This should not be too difficult to do and I feel 90% sure we can have it in Isadora 3.1. But until I think it through more carefully, I'm listing it here instead of in the "promised" features above.

Isadora 3.2: A "vamp" loop for the Sound Player

Addning the possibility of a looping system where the sound plays from the start of the sound to Point A, then loops indefinitely from Point A to Point B, and finally plays from Point B to the end of the file when triggered is not too difficult to imagine... it's just a variation on the existing looping structure. I can see the usefulness of this and feel like it should be rather easy to do for 3.1, but again, no promises yet.

Isadora 3.2: Audio Delay Function

@Michel suggesed adding an audio delay to the Movie Player and Sound Player. This might be possible, but such a feature starts to move into the realm of audio as a first class citizen. I cannot say more right now than I see the reason why this would be important, and I'll keep this in mind.

Isadora 3.2: VU Meter for the Control Panel

This is really just a simple re-write of the Slider Control. It seems doable to me but I won't promise I wouldn't want to delay 3.1 because of this.

So, this is what I'm proposing for an Isadora 3.1 release. Please read this over and offer your reactions.

Best Wishes,

Mark -

hello,

1mil+ thanks to mark and the hole team for having the courage to explain the difficults and a little bit of your company structure to the people.

we are happy about all improvements on audio you promis and all of them you will do in the future. our biggest problem is not have to use other software

for audio in our productions, the main thing is that our workshopper and the people which see how far our set is able to go, are always a little bit sad about

the fact that they have to buy another software to route and minimal manipulate sound. but every thing else from what they are dreaming to do as an interactive art nubbie, isadore is able to do,absolutly great for.

another time thank you for getting us on the development screen.

r.h.

-

Dear Mark and the crew,

it sounds fantastic. I cannot wait tohear them

tank you very much

Jean-François

-

I silently followed this threat, as I did always wait for extended audio features to giving Isadora a more complete feeling, but haven't had a specific feature request.

This all sounds very promising!But I now just wanted to extend this a little bit, even if I risk to tell something you already have in mind, as it is to obvious, but I better say than sorry!

For the audio routings you mention multiple inputs to single output in a 'sequential' manner. But shouldn't it be even more important to have the possibility to route one source to several outputs?

This is especially important to keep in mind, when 'building' the graphical based matrix 'cross point view', as it needs the possibility to put multiple crosspoints in one output row.I guess this is the reason, why the most video matrix switchers remotes software that visualize cross points (like Lightware, s. below) are showing inputs and outputs the other way around (inputs on top, outs right).

As I said, maybe you have this in mind already, and it might be just a small thing, but I thought it would be worth mentioning.

kindly

Dill

-

What I really "miss" at the moment is "basic" player with following specs:

- Different formats WAV/AIFF/FLAC

- Different with depths / sample rates

- Multichannel output, but with volume for each routing

- Also multichannel output as one tie line (so going form 8 channel out to 8 channel in another plugin is just one tie line)

Then, behind that the use of audio as control element would be great in different plugins:

- Configurable multichannel/crosspoint mixer (Like Qlab)

- Analyzer

I have also a idea about a recurring "problem" within showontrol environments. I just had a whole conversation with Allen and Heath about this, when I did a showontrol setup with the SQ 5. If you want to make a fade over time, you need a envelope generator/ curvature / scale value, followed by CC send and trigger that CC for every value..... That is really not of this time any more. Because , it is nice for fading a couple of fades, but what if you have let's say 12 channels of audio and 20 mic inputs on your desk and you want to control, you have to make this 32 times..... And if you have a moment you want to do a fade out on all channels to zero, think about the massive overkill on the system and midi commands....I suggested to Allen and Heath a system that defines only the fade time and value and put that in a midi string as command. Then you only send commands and not CC's all the time. That was a great idea, but unfortunately the desks needed a more powerful CPU to do these kinds of controls. It would also save massive time if you want to go "from" a certain value "to" a certain value. (so in this case I always "update" the input of the envelope generator) A lot of work.

So, this conversation with Allen and Heath made me think how can you have a set of controls within Izzy to deal with this, that would save a lot of communicating back and forth:

- A multichannel mixer with volume control but also fade in/out trigger and fade time for in and out, per channel

- Multichannel tie lines

For example, I have a 16 track WAV sound file running with continuous soundscapes that you like to fade in and out at certain moments on selected tracks. In this case you just have 1 player with a 16 channel WAV, 1 multi tie line to the 16 channel mixer and there you can define the controls. let's say channel 1 fade in 0,5 second, but fade out 4 seconds. Just set the fade time and trigger it. Can be done with collapsable controls, because for each channel you have VOLUME/ TRIG FADE IN/ TRIG FADE OUT/ FADE IN TIME/FADE OUT TIME. Would be awesome if the manual volume setting can be altered as well and the command to trigger a fade will run from that value. For rehearsals it is also great.

That solves you playback fades and unfortunately there are no mixing desks at this moment that will do these kinds of processing. It is limited to scene lists, mutes or midi CC.

-

@mark: It sounds very promising what you intend to program for the next level of Isadora! I like every single improvement in the sound features you plan. I also think that the priorities you sketched are very reasonable.

A little note to all the improvements to come: I tried to build a Sound Player that has outputs "position" and "loop end". I know for sure this comes years too late, but anyway: Here is a "Sound Player plus" actor with outputs! It is made on top of the original Sound Player actor, combined with a timer and a couple of Javascript lines. Of course it's not perfect, but it works. It has the same inputs as the original Sound Player, except for the "play length" input, which I changed to "play stop". That helped to make the Javascript file easier.

Maybe one of you has some need for it while Isadora-3.1 is on the way...

-

-

Your plans for 3.1 audio upgrade sound great. I am particularly interested to see the outcome of the interactive audio routing system - because I can already imagine implementing this in my projects.

You haven’t mentioned integrating timecode or a waveform monitor for the Sound Player are either of these features on the cards?

Best wishes

Russell

-

Dear All,

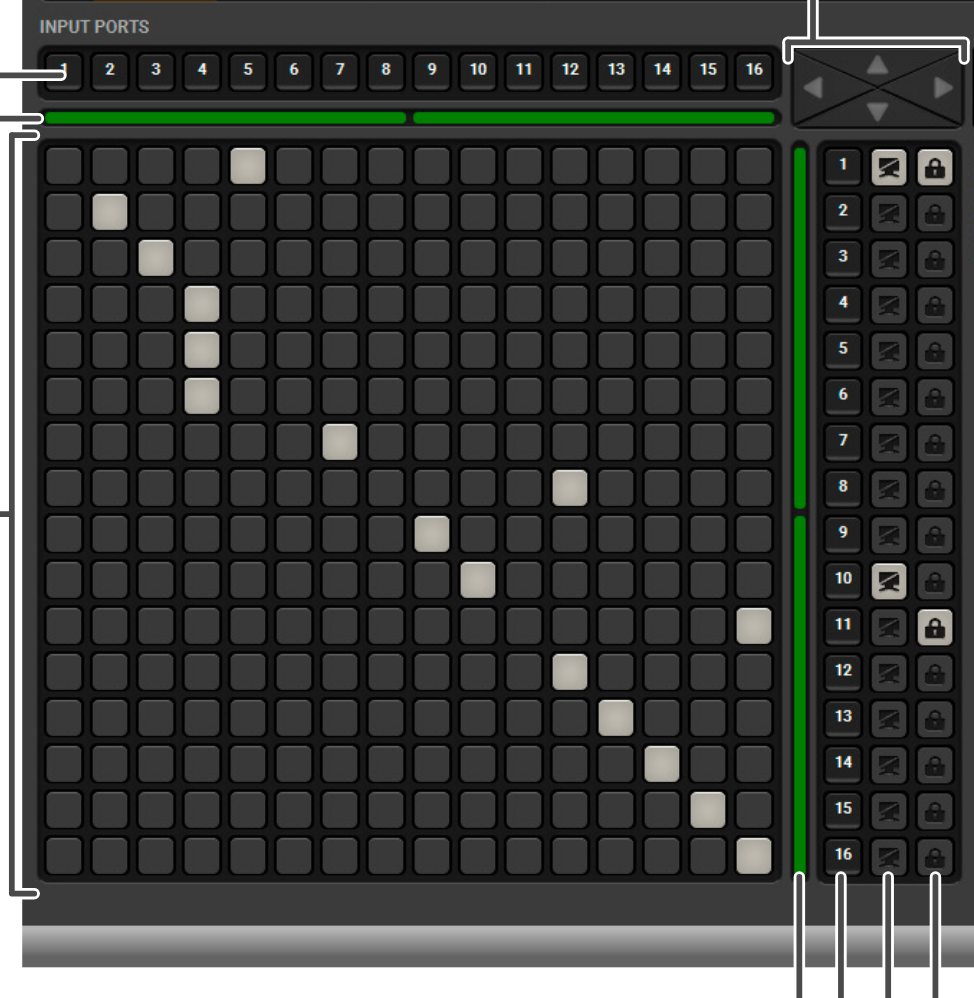

To whet your appetite, this is what I did over the holidays: 24, 16 and 8 bit AIFF or WAV files with any number of channels, and now the new Matrix Routing input shown below. Working well on macOS, now heading for Windows.

Happy New Year (almost)!

Mark -

@mark that is great news !! Happy new year for you too (almost)

Maxi Wille

-

hello,

thank you for holding on while christmastime.

do you think it´s possible to route the "speak text" actor also on windows?

thx.

r.h.

-

@ mark look fantastic.

happy new year

Jean-François

-

@deflost said:

do you think it´s possible to route the "speak text" actor also on windows?

Well, I would have to find a way to intercept the samples and then route them. I doubt that this is easy, which means I cannot assign a high priority to this given that it is a feature that not so many people use. Let's keep it in mind for a future version.

Best Wishes,

Mark -

this would be great, because it´s one of the favorite actors by our kds workshops, they love it to let the machines speak!

thx.

r.h.

-

@deflost said:

this would be great, because it´s one of the favorite actors by our kds workshops, they love it to let the machines speak!

But do they really need to route to to a multichannel output?? Seems a bit sophisticated for kids.

Best Wishes,

Mark -

not really, but sometimes it is a little bit depressing that there is no way to do left right or front back things with it!

thx.

r.h.

-

@deflost said:

not really, but sometimes it is a little bit depressing that there is no way to do left right or front back things with it!

Sorry... I read "kds workshops" as "kids workshops" -- I thought they were workshops for children.

-

you read absolutly right, children. the most of them are around 8 to 12 or 16 to 20, we work a lot

with them in a cooperation with their schools.

we do every kind of "new media" stuff with them.

so in some situations, in special for theatre like performance stuff, they are very accurate

with things that have to be logic. so when they want to let the machine speak out come in,

then please from the right side, and when the bad witch calls from back outside the building, then of course they ask

why we don´t use bluetooth or something like that.........and so on.

but so they learn much about physical audio mixing consoles and this kind of stuff.

so another thx for your work.

r.h.

-

Dear All,

I hope you're enjoying your jump into 2020.

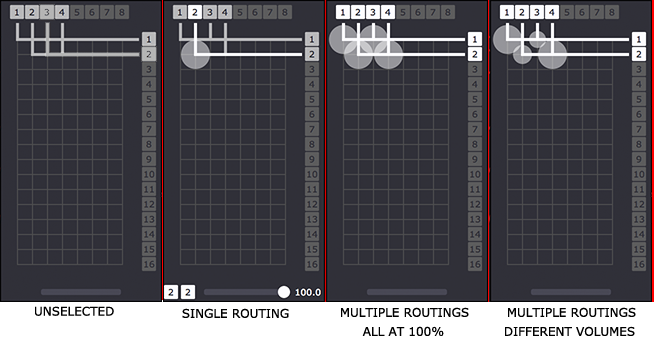

I'm exploring if and how the volume at the crosspoints of the Matrix Router should be displayed. On one hand, it's handy to see it and for sure when you are adjusting the volume, the circle getting biggger/smaller gives you strong visual feedback about which item you're editing. For sure, when no routings are selected the volume circles should not be shown. But maybe it gets too cluttered when you make a multiple selection? I could make it so that the volume circle only shows when there a single routing is selected.

I'd be curious to hear your reactions.

Also, as you can see, I've gotten this to be this to be bit more compact than you saw in the first GIF I shared before. But I can also anticipate that having more than 16 channels of output is going to make for a very tall user interface element... do people use more than 16 channel output devices on a regular basis?

Best Wishes,

Mark