Output video frame data pixel by pixel

-

Thanks for the replies.

I am considering other options (Max, VVVV etc..) however I would prefer to stay in Isadora.@Skulpture ... I started to play with chopper late last night, again I was having trouble getting single pixels. It seemed that I always got YUV video out, even if I converted to RGB right before. As YUV the smallest video out I could get was 1x2, also the pixel selection is based on % of video right? Have been having a hard time getting a sharp selection of a pixel using percentages.

going to give it another try. -

Yes, I have just confirmed that I cannot get a 1x1 video stream from chopper or chop pixels.. min is 1x2 and converts to YUV in all cases. (I have YUV mode active, but need this for other things.)

hmm. -

What about Chop pixels?

-

I need to test in non YUV mode, but my first test with Chop Pixels with horz and vert set to 1 was still outputting a 1x2 video stream (YUV), even if I converted to RGB right before the chop.

Going to give it another quick try right now. AND

The results, ys Chop Pixel will work rather well in combination with Measure color for my purpose, but ONLY if I am not in YUV mode.. which currenlty is a problem.

Maybe @Mark can shed some light on this. Anyway to remain in RGB mode using chop pixel if the system is set to YUV? -

As you talk of vvvv, you are probably using Windows?

If not and you have the Core version of Isadora, you could explore the Image Pixel actor of Quartz Composer. -

Regarding the 1 x 2 limit: when using YUV422 system (which is what Isadora uses when in YUV mode), there is no way to express an individual pixel. Instead, pixels are expressed in pairs as four 8 bit values: (u, y1, v, y2) -- y1 and y2 are the brightness of the two pixels, u and v together express the color of both pixels. Thus, the YUV422 standard does not have the capability to express a single pixel.

Why YUV422? Because the whole point of YUV was to reduce the bandwidth as compared to YUV. YUV422 expressed two pixels in 32 bits; RGB requires 64 bits to do the same.For those who are interested, you can read all about YUV [here](http://en.wikipedia.org/wiki/YUV#Converting_between_Y.27UV_and_RGB).As mentioned above, when in RGB mode there is no problem cutting down to a 1 x 1 pixel.Best Wishes,Mark -

Mark, I fully understand the reasoning behind YUV and how it works, I guess what surprised me is that converting the video stream to RGB directly before it enters the Chop Pixel didn't cause the Chop Pixel actor to process the video as RGB but rather converted it to YUV.

Perhaps it might be nice to have a behavior control on actors that support YUV to change there default behaviors. -

So you're saying that the output of the YUV -> RGB actor was YUV? Or that the Chop Pixels actor converted it to YUV?

-

The Chop Pixel actor converted it. It accepted the RGB in but output YUV even when set to a size of 1x1.

-

Can't remember if I entered this as a bug?

-

Just confirmed that this is still the case in F33 on windows.

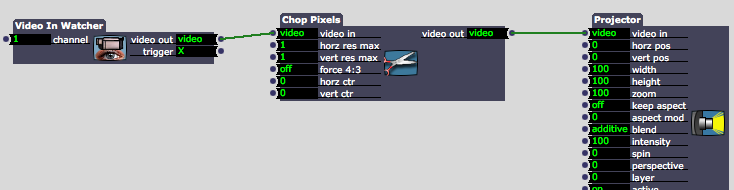

Attached a simple patch that shows a 2x1 coming from a chop pixels when in YUV mode even though set to RGB right before.Will enter bug now.

4ccad4-noisechop.izz -

Dear DusX,

Thanks for the bug report. Will follow up.Best Wishes,Mark -

I recently did this with the Measure Color Actor with it set to 0% size in both directions. I wasn't trying to output each and every pixel though, and since it's position is described by percentages, that might be a problem.

-

What does setting its size to 0% accomplish?

I suppose the other option would to create a custom plugin/

@Mark, if making a actor (with sdk in C++) is there a limit to the number of outputs I would be able to send per frame? (frame rate X cycle rate ?)

Or would outputting another data format be faster.. eg comma delimited list for each row of data?

I don't expect to work with large video files, even 80 x 60 px would be enough. -

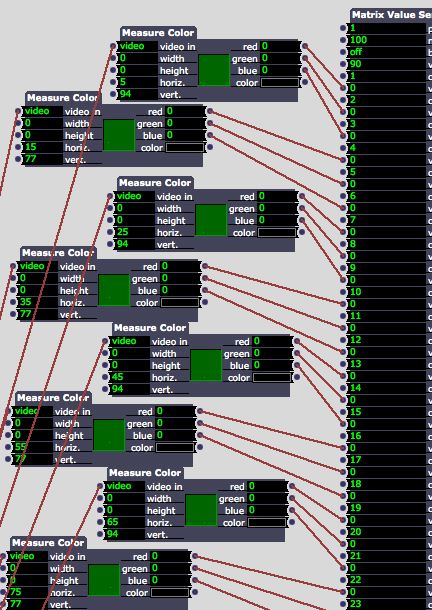

0% outputs a single pixel with the Measure Color Actor - I was using a grid of them to pixel map video to a grid of individual RGB LEDs.

-

Looking at the actor, I wonder if the help text for the input fields is correct?

It states that setting the hor and vert locations to 0 causes it to read data for a single pixel.

However this should leave you stick in the top left corner.

Seems that setting the width and height to zero should give the single pixel read, and then you can use the hor and vert positions to move your reader around.

The actors display show ti working this way as well, so it seems the help text must be wrong. -

You're right - I forgot about that. Horiz and Vert are percentage positions starting in the upper-left corner, it's one pixel when width and height are set to 0. You can see in the screen cap that the small feedback window shows one pixel being sampled in different positions, depending on the H and V settings. -Rick

-

Thanks Rick. I was just pointing out the error in the help docs.

I once tried to use this method to read each pixel.. but found the positioning rather difficult.

I have been (like you I guess) using it to read a matrix of values from video and map to DMX.. works well.

Just haven't got it down to scanning ever pixel, I'm defining tiled regions.