I am not sure to understand exactly what you want but if it is something like that:

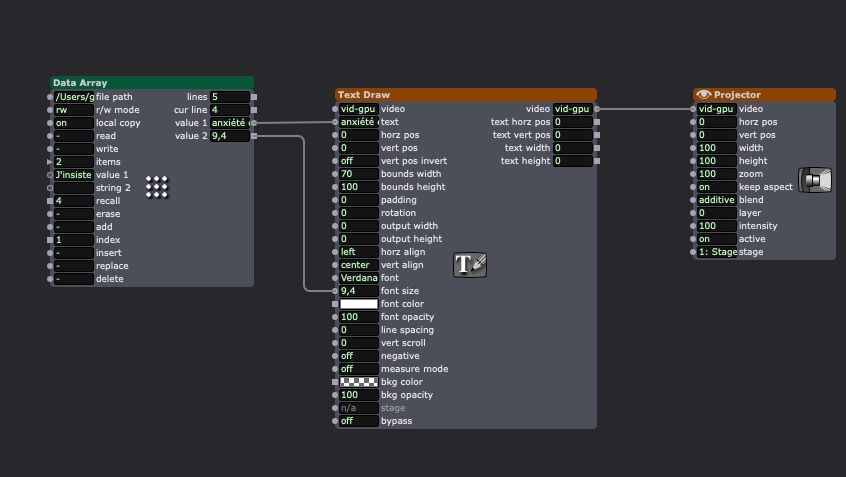

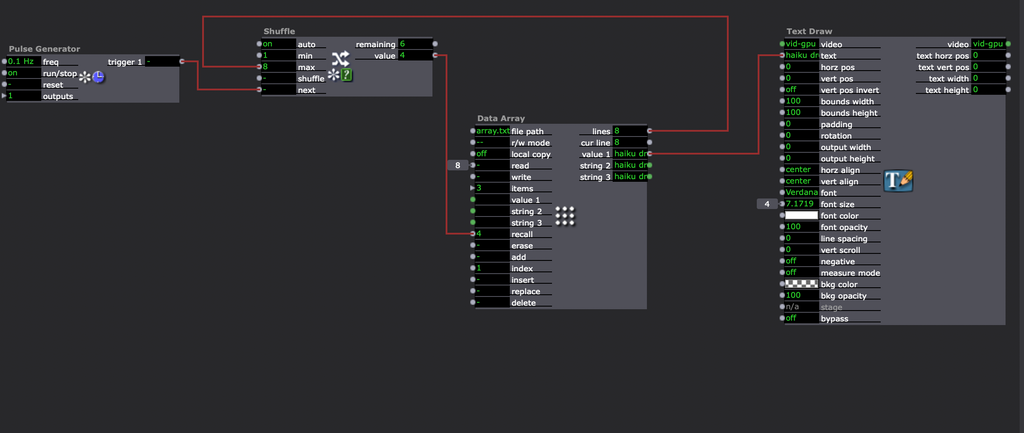

you only need to set "bounds width" and "bounds height" in the Text draw actor to limit the text to a square inside the circle and then choose the correct text size. If you need or want to change the text size for each new text you can add the size for each text in your file (after the text with a tab before) (Extract from the actor description: "The data may be stored on disk in a standard, tab-separated text file"). In the Data array actor you add a second value and link this second value to the font size of the Text draw actor. It will change with each new line.

Best regards,

Jean-François

Is there any way for Isadora to dynamically change the size and or formatting/wrapping of text based on the length of a line of text?

I've built a simple patch that using an array actor that sends a line of haiku to 3 seperate text draw actors. The aim being that the .txt document that it is based on can be updated dynamically as new lines of text are entered. Of course the number of characters will vary a bit.

Im looking for a way to calculate the optimum text formatting for a size limited "circular text zone" preferably by adding a 'carriage return'. but could be through reducing text size.

Can this be done?

I am still on Izzy 3, using Mac Studio and/0r early M1 Mac book pro.

Thanks

J1M

@rwillats A dedicated wifi that you control is the only way to go. I like UNIFI products - they are designed to work from the ceiling and can be attached to a clamp to hang on a lighting bar or truss easily.

Get the biggest bandwidth you can - connect all computers via LAN and use the config software to scan for the best free frequencies.

The Unifi U7 has pro/max and long range versions if you want long range.

You will want a controller and router with that too - the cloud gateway ultra is the smallest and cheapest.

Together these give the flexibility, control and throughput you need to have reliable wireless on stage.

BUT I also doubt you will be experiencing issues with a sub net unless there is some really big config issues or the devices are connecting to different networks (many theaters have a public wifi, an admin and a tech - on all the devices make sure you forget all of them except for the tech wifi you are using. Next turn off the SIM based internet. iPhones have a feature that will switch the network to the sim card if it thinks the internet there is faster - this will mess up your wifi network too. Depending on the config of the in house system you can make manual IP addresses on your devices and have them reserved in the in house wifi matching the mac addresses (be sure to turn off the wandering mac address feature on ios/mac os.

Getting any settings changed on the in house network infrastructure is often impossible - ergot it is way easier to bring your own isolated network you can tune to your needs.

Fred

Hi

Imy not be able to answer your query ref subnets. I am not 100% sure what you mean here.

My take on this is that you are using an existing installed wifi network not of your design. There may be many options in this set up that do not play well with your intentions.

Would it be a thing that you use your own router/access point that is dedicated only to your needs. My thought would be to install the router/AP as near to the iphone as necessary with a Cat 6a cable ran direct to the laptop or switch into laptop. This way you could set all your IP/Subnet ranges as you need?

I think you should re-look at using the wifi network belonging to the theatre and concentrate on creating your own. Depending on the actual items, it might not be that expensive.

There are plenty of users here that could offer advice on brands and set ups. Some routers are better than others for connectivity and the performance use.

iphones are painful for their incessant need to dial up the mothership.This is one of the reasons I gave up on them. They will constantly hop off your bespoke network to find a way to dial home. It is very annoying to be honest and I have wasted so much time forcing the device back onto my own network.

It looks like if you cannot edit/control the wifi source in the building so you are better off changing up how you control all with your own network. It also sounds like you are connecting via DHCP and there are multiple subnets on the network which is probably adding to the confusion...?

You should be giving yourself a static range for both devices. Without knowing any particulars, it might be a great deal easier to configure your own little WiFi set up here.

Hope this helps.

eamon

I stopped using Isadora partly because of this unavailable option that I use in every project. I regularly check to see if the blur handling in mapping has been improved. And the years go by, and I'm surprised every time that it hasn't been implemented. It's sad; I love this software.

@bvg73 Thanks much for the help. After trying it all on my iMac and not having success, I went to my laptop with the M1 chip and it made all the difference. Since they both have the same operating system I'm guessing that is what made the difference? I'm now hooked up and working and figuring out the Matrix Value Send Actor and its relation to the Channels on the Chauvet, which is a bit counterintuitive at this point but I'm getting that sorted. Again, thanks!

Hi all,

I'm working on a project that requires live video to be sent from a small set element that moves on and off stage to the operating laptop at the back of the theatre. My plan has been to use NDI (from NDI HX on a phone) over the in-house WiFi reserved for the theatre's tech. The trouble is, sometimes the feed shows up and is stable, and sometimes I can't find the NDI source at all. I believe this might be happening because there are multiple subnets to their WiFi (?). Is there a way to guarantee the operating computer and this phone are on the same subnet? If it helps, they're both Apple products with the same Apple ID.

Best,

Rory

@woland Thanks - I'll find a work-around...

I will log this as a feature request though.

I don't believe this feature has been added yet. It was going to be added if there was immediate interest from many members of the community, but that didn't happen so we focused our development efforts elsewhere. It's probably possible to roll your own through the use of Pythoner or the Isadora SDK (as most things tend to be), but it's not a native feature at this time to the best of my knowledge.