@michel said:

This would be my approach: define the path to a file that will be automatically generated when a write operation is triggered. If the day changes, the counter will reset to zero and a new line will be added to the top of the text file.

I recommend this method as well. I've used it for many different things in the past.

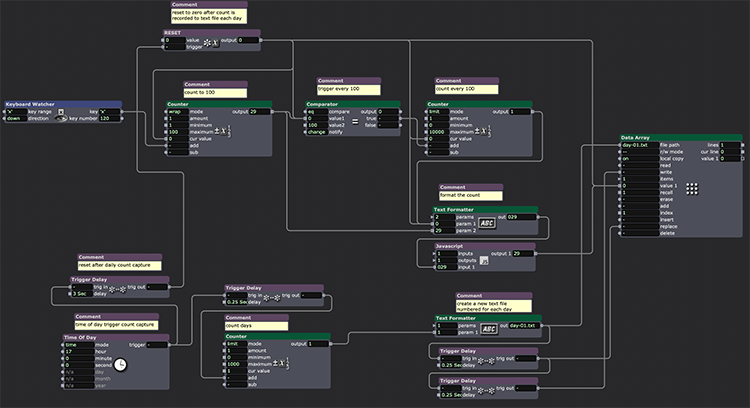

I suggest a similar approach as @Michel. I looked at creating a daily text file using a time of day to capture the current count and then resetting to zero after recording the count at a specified hour - recording to the Data Array actor. have a look if useful

Michel's approach of capturing sequentially to a single text file is perhaps a bit more efficient - I am not sure why I wanted to create separate text files for each day!

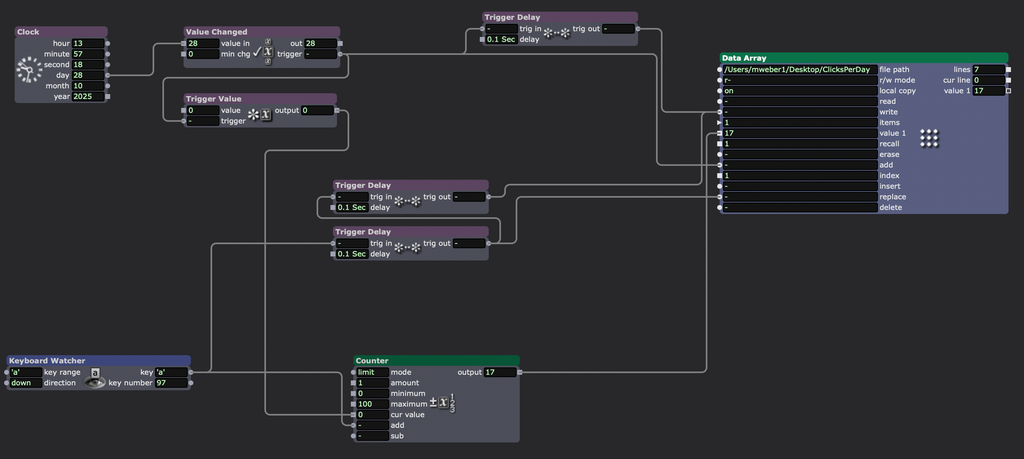

This would be my approach: define the path to a file that will be automatically generated when a write operation is triggered. If the day changes, the counter will reset to zero and a new line will be added to the top of the text file.

If the file is saved in a folder that is synced with a cloud service you can read it from anywhere.

Best Michel

Hopefully the previous steps assist in getting you a successful request from metalpriceapi.com?

What do you mean by the notification process?

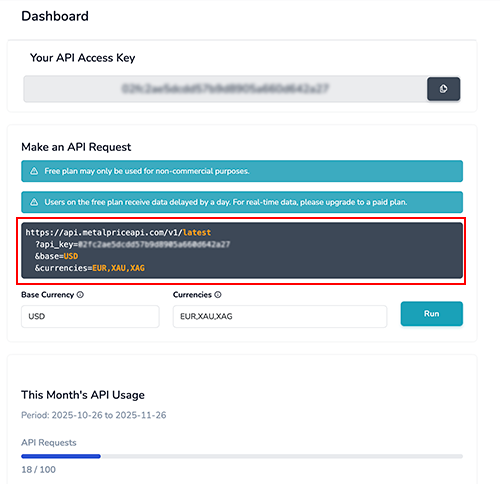

Sorry to hear you are having difficulty getting a successful data stream. I am going to assume you have indeed signed up for the Metalpriceapi.com subscription. When I signed up the access URL was preconfigured in the notification process and required copying and pasting into the ‘Get URL text’ actor module. BUT the URL success is sensitive to additional spaces, gaps and invisible characters in the address string. The following screen grabs demonstrate how unseen gaps and spaces may need to be deleted to 'tighten up' the URL address before use after copying from the Metalpriceapi.com website.

1. Below is a screen grab of the metalpriceapi.com dashboard that provides my api_key. The grey box outlined in red is preconfigured with my api_key in the dashboard so I can directly copy the address string here.

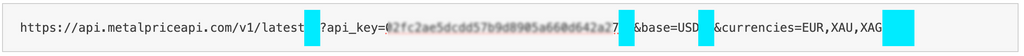

2. BUT when cut and paste it also includes spaces and gaps and hidden elements that appear to make the copied text unsuccessful in Isadora.

3. It will work if you clean up the address by deleting any spaces including extra tab or return spaces at the end of the address string.

I have tried to figure it out but when I use this method I just get this message "User did not supply an access key or supplied an invalid access key. Please sign up for a FREE API Key at metalpriceapi.com"?

Hi all

I've created a patch for an installation where I want to extract the amount of times a certain key is pressed and ideally want to store this info somewhere other than in Izzy, key is currently linked to a counter actor.

Thoughts on a very simple light touch way for someone to access just that information for each day the installation is open would be appreciated. It doesn't need to be access remotely (although that would be a bonus) but the machines running the install aren't currently on the internet or any sort of remote network.

Ideal scenario would be someone opens a spreadsheet or word doc on the install machine that has populated itself with how many times a particular button has been pressed each day.

Thanks in advance!

Simon